Talia Xu

Exploiting Light To Enhance The Endurance and Navigation of Lighter-Than-Air Micro-Drones

Jan 19, 2026Abstract:Micro-Unmanned Aerial Vehicles (UAVs) are rapidly expanding into tasks from inventory to environmental sensing, yet their short endurance and unreliable navigation in GPS-denied spaces limit deployment. Lighter-Than-Air (LTA) drones offer an energy-efficient alternative: they use a helium envelope to provide buoyancy, which enables near-zero-power drain during hovering and much longer operation. LTAs are promising, but their design is complex, and they lack integrated solutions to enable sustained autonomous operations and navigation with simple, low-infrastructure. We propose a compact, self-sustaining LTA drone that uses light for both energy harvesting and navigation. Our contributions are threefold: (i) a high-fidelity simulation framework to analyze LTA aerodynamics and select a stable, efficient configuration; (ii) a framework to integrate solar cells on the envelope to provide net-positive energy; and (iii) a point-and-go navigation system with three light-seeking algorithms operating on a single light beacon. Our LTA-analysis, together with the integrated solar panels, not only saves energy while flying, but also enables sustainable operation: providing 1 minute of flying time for every 4 minutes of energy harvesting, under illuminations of 80klux. We also demonstrate robust single-beacon navigation towards a light source that can be up to 7m away, in indoor and outdoor environments, even with moderate winds. The resulting system indicates a plausible path toward persistent, autonomous operation for indoor and outdoor monitoring. More broadly, this work provides a practical pathway for translating the promise of LTA drones into a persistent, self-sustaining aerial system.

Animal Re-Identification on Microcontrollers

Dec 09, 2025

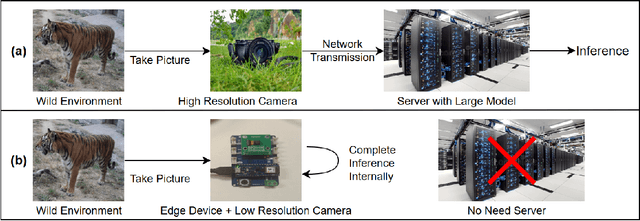

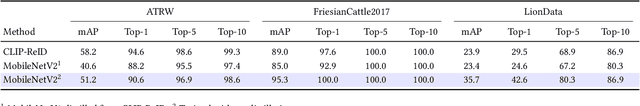

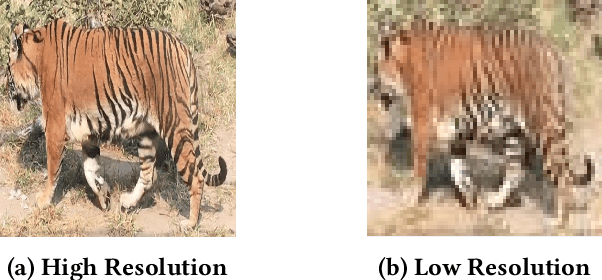

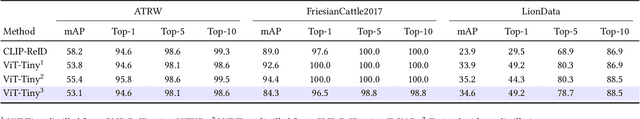

Abstract:Camera-based animal re-identification (Animal Re-ID) can support wildlife monitoring and precision livestock management in large outdoor environments with limited wireless connectivity. In these settings, inference must run directly on collar tags or low-power edge nodes built around microcontrollers (MCUs), yet most Animal Re-ID models are designed for workstations or servers and are too large for devices with small memory and low-resolution inputs. We propose an on-device framework. First, we characterise the gap between state-of-the-art Animal Re-ID models and MCU-class hardware, showing that straightforward knowledge distillation from large teachers offers limited benefit once memory and input resolution are constrained. Second, guided by this analysis, we design a high-accuracy Animal Re-ID architecture by systematically scaling a CNN-based MobileNetV2 backbone for low-resolution inputs. Third, we evaluate the framework with a real-world dataset and introduce a data-efficient fine-tuning strategy to enable fast adaptation with just three images per animal identity at a new site. Across six public Animal Re-ID datasets, our compact model achieves competitive retrieval accuracy while reducing model size by over two orders of magnitude. On a self-collected cattle dataset, the deployed model performs fully on-device inference with only a small accuracy drop and unchanged Top-1 accuracy relative to its cluster version. We demonstrate that practical, adaptable Animal Re-ID is achievable on MCU-class devices, paving the way for scalable deployment in real field environments.

Firefly: Supporting Drone Localization With Visible Light Communication

Dec 13, 2021

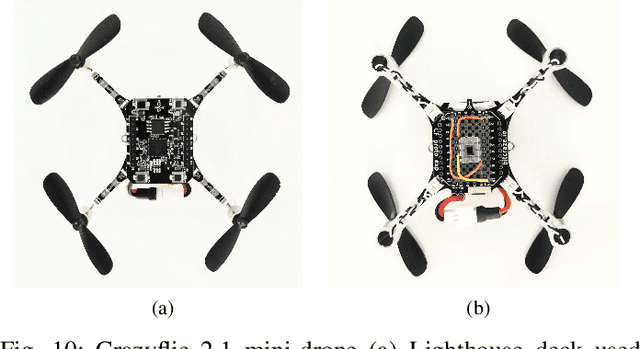

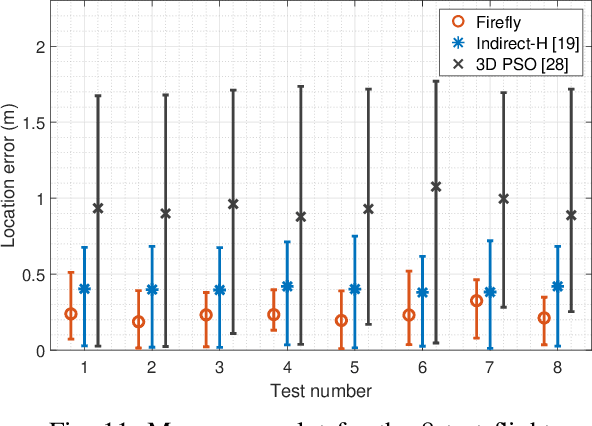

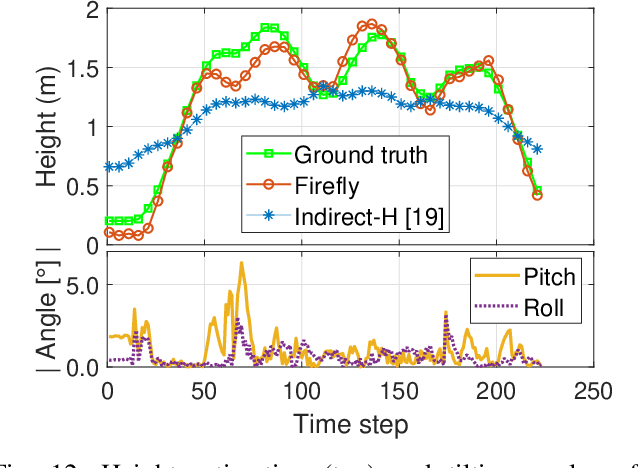

Abstract:Drones are not fully trusted yet. Their reliance on radios and cameras for navigation raises safety and privacy concerns. These systems can fail, causing accidents, or be misused for unauthorized recordings. Considering recent regulations allowing commercial drones to operate only at night, we propose a radically new approach where drones obtain navigation information from artificial lighting. In our system, standard light bulbs modulate their intensity to send beacons and drones decode this information with a simple photodiode. This optical information is combined with the inertial and altitude sensors in the drones to provide localization without the need for radios, GPS or cameras. Our framework is the first to provide 3D drone localization with light and we evaluate it with a testbed consisting of four light beacons and a mini-drone. We show that, our approach allows to locate the drone within a few decimeters of the actual position and compared to state-of-the-art positioning methods, reduces the localization error by 42%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge