Yanqiu Huang

T-Graph: Enhancing Sparse-view Camera Pose Estimation by Pairwise Translation Graph

May 02, 2025Abstract:Sparse-view camera pose estimation, which aims to estimate the 6-Degree-of-Freedom (6-DoF) poses from a limited number of images captured from different viewpoints, is a fundamental yet challenging problem in remote sensing applications. Existing methods often overlook the translation information between each pair of viewpoints, leading to suboptimal performance in sparse-view scenarios. To address this limitation, we introduce T-Graph, a lightweight, plug-and-play module to enhance camera pose estimation in sparse-view settings. T-graph takes paired image features as input and maps them through a Multilayer Perceptron (MLP). It then constructs a fully connected translation graph, where nodes represent cameras and edges encode their translation relationships. It can be seamlessly integrated into existing models as an additional branch in parallel with the original prediction, maintaining efficiency and ease of use. Furthermore, we introduce two pairwise translation representations, relative-t and pair-t, formulated under different local coordinate systems. While relative-t captures intuitive spatial relationships, pair-t offers a rotation-disentangled alternative. The two representations contribute to enhanced adaptability across diverse application scenarios, further improving our module's robustness. Extensive experiments on two state-of-the-art methods (RelPose++ and Forge) using public datasets (C03D and IMC PhotoTourism) validate both the effectiveness and generalizability of T-Graph. The results demonstrate consistent improvements across various metrics, notably camera center accuracy, which improves by 1% to 6% from 2 to 8 viewpoints.

Machine Learning-based Positioning using Multivariate Time Series Classification for Factory Environments

Aug 22, 2023

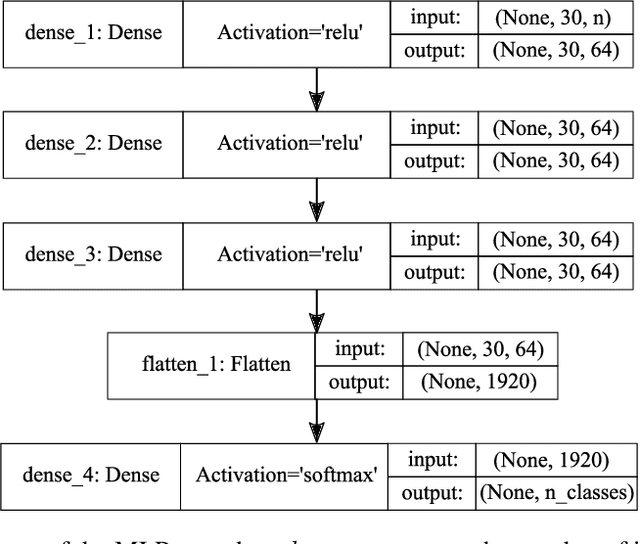

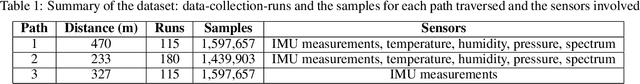

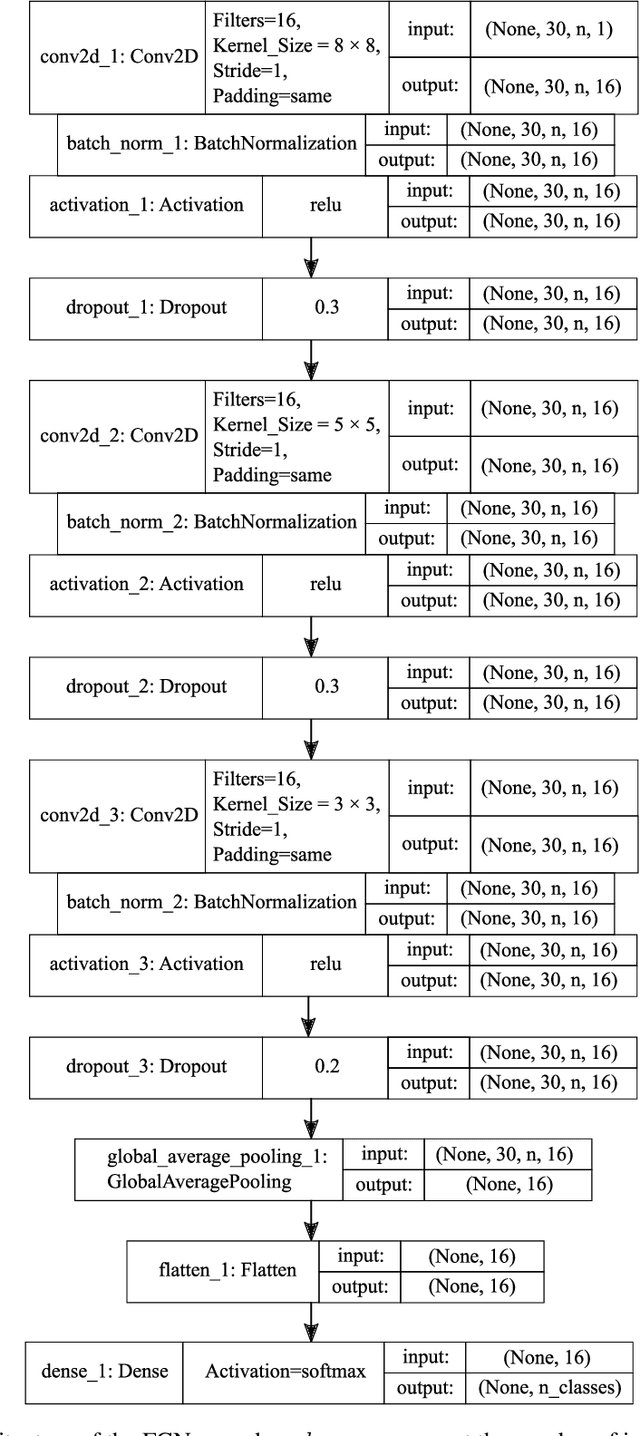

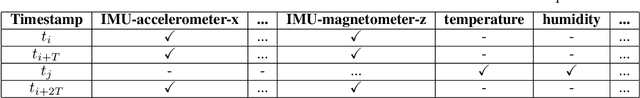

Abstract:Indoor Positioning Systems (IPS) gained importance in many industrial applications. State-of-the-art solutions heavily rely on external infrastructures and are subject to potential privacy compromises, external information requirements, and assumptions, that make it unfavorable for environments demanding privacy and prolonged functionality. In certain environments deploying supplementary infrastructures for indoor positioning could be infeasible and expensive. Recent developments in machine learning (ML) offer solutions to address these limitations relying only on the data from onboard sensors of IoT devices. However, it is unclear which model fits best considering the resource constraints of IoT devices. This paper presents a machine learning-based indoor positioning system, using motion and ambient sensors, to localize a moving entity in privacy concerned factory environments. The problem is formulated as a multivariate time series classification (MTSC) and a comparative analysis of different machine learning models is conducted in order to address it. We introduce a novel time series dataset emulating the assembly lines of a factory. This dataset is utilized to assess and compare the selected models in terms of accuracy, memory footprint and inference speed. The results illustrate that all evaluated models can achieve accuracies above 80 %. CNN-1D shows the most balanced performance, followed by MLP. DT was found to have the lowest memory footprint and inference latency, indicating its potential for a deployment in real-world scenarios.

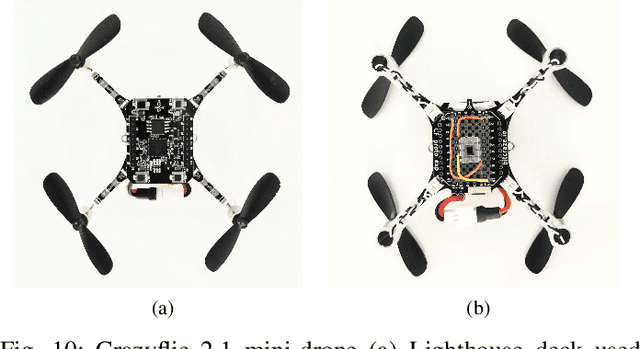

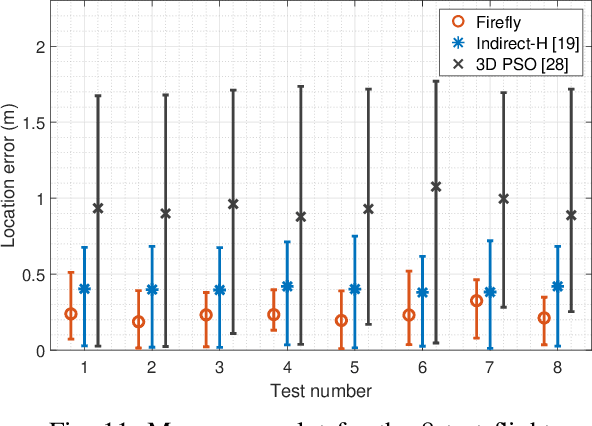

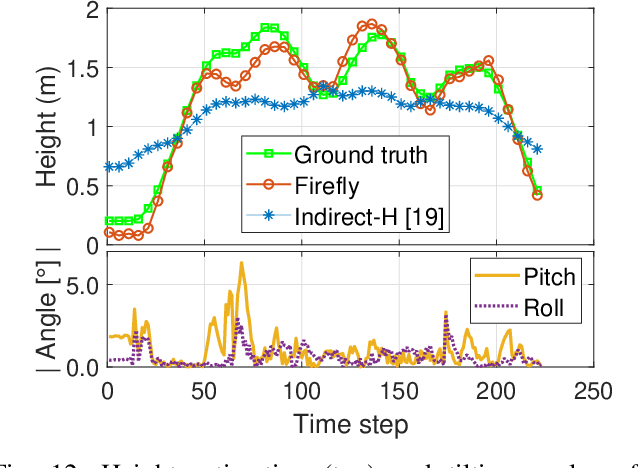

Firefly: Supporting Drone Localization With Visible Light Communication

Dec 13, 2021

Abstract:Drones are not fully trusted yet. Their reliance on radios and cameras for navigation raises safety and privacy concerns. These systems can fail, causing accidents, or be misused for unauthorized recordings. Considering recent regulations allowing commercial drones to operate only at night, we propose a radically new approach where drones obtain navigation information from artificial lighting. In our system, standard light bulbs modulate their intensity to send beacons and drones decode this information with a simple photodiode. This optical information is combined with the inertial and altitude sensors in the drones to provide localization without the need for radios, GPS or cameras. Our framework is the first to provide 3D drone localization with light and we evaluate it with a testbed consisting of four light beacons and a mini-drone. We show that, our approach allows to locate the drone within a few decimeters of the actual position and compared to state-of-the-art positioning methods, reduces the localization error by 42%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge