Taha H. Rashidi

Pólygamma Data Augmentation to address Non-conjugacy in the Bayesian Estimation of Mixed Multinomial Logit Models

Apr 13, 2019Abstract:The standard Gibbs sampler of Mixed Multinomial Logit (MMNL) models involves sampling from conditional densities of utility parameters using Metropolis-Hastings (MH) algorithm due to unavailability of conjugate prior for logit kernel. To address this non-conjugacy concern, we propose the application of P\'olygamma data augmentation (PG-DA) technique for the MMNL estimation. The posterior estimates of the augmented and the default Gibbs sampler are similar for two-alternative scenario (binary choice), but we encounter empirical identification issues in the case of more alternatives ($J \geq 3$).

Bayesian Estimation of Mixed Multinomial Logit Models: Advances and Simulation-Based Evaluations

Apr 12, 2019

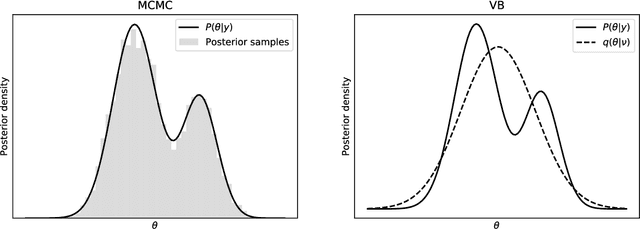

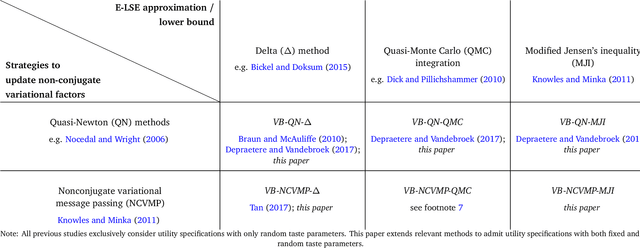

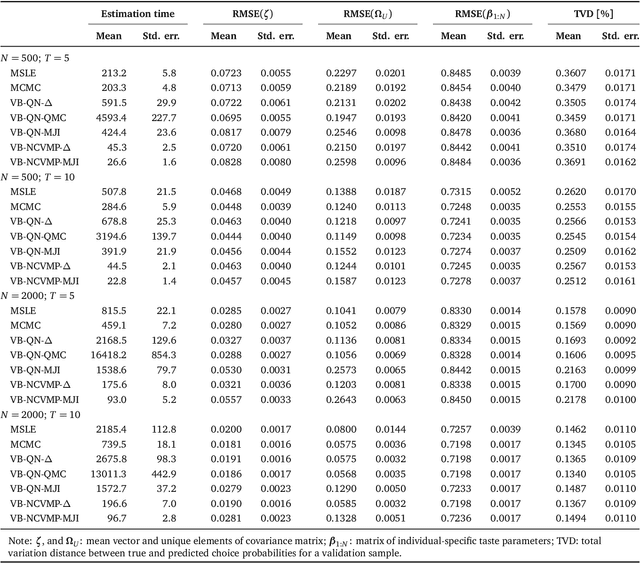

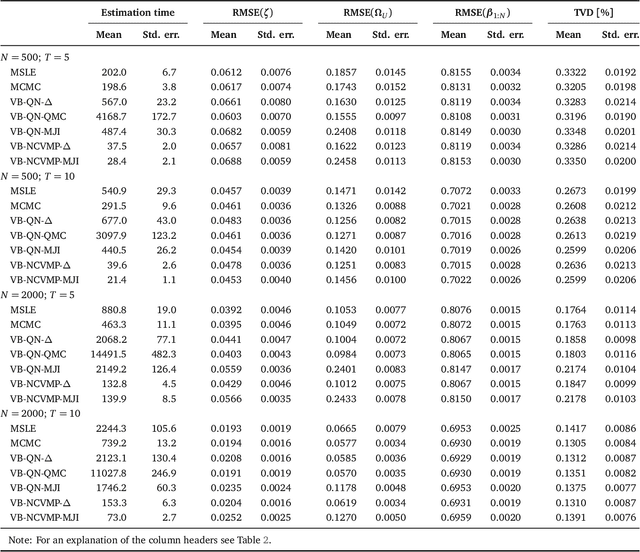

Abstract:Variational Bayes (VB) methods have emerged as a fast and computationally-efficient alternative to Markov chain Monte Carlo (MCMC) methods for Bayesian estimation of mixed multinomial logit (MMNL) models. It has been established that VB is substantially faster than MCMC at practically no compromises in predictive accuracy. In this paper, we address two critical gaps concerning the usage and understanding of VB for MMNL. First, extant VB methods are limited to utility specifications involving only individual-specific taste parameters. Second, the finite-sample properties of VB estimators and the relative performance of VB, MCMC and maximum simulated likelihood estimation (MSLE) are not known. To address the former, this study extends several VB methods for MMNL to admit utility specifications including both fixed and random utility parameters. To address the latter, we conduct an extensive simulation-based evaluation to benchmark the extended VB methods against MCMC and MSLE in terms of estimation times, parameter recovery and predictive accuracy. The results suggest that all VB variants perform as well as MCMC and MSLE at prediction and recovery of all model parameters with the exception of the covariance matrix of the multivariate normal mixing distribution. In particular, VB with nonconjugate variational message passing and the delta-method (VB-NCVMP-Delta) is relatively accurate and up to 15 times faster than MCMC and MSLE. On the whole, VB-NCVMP-Delta is most suitable for applications in which fast predictions are paramount, while MCMC should be preferred in applications in which accurate inferences are most important.

A Dirichlet Process Mixture Model of Discrete Choice

Jan 19, 2018

Abstract:We present a mixed multinomial logit (MNL) model, which leverages the truncated stick-breaking process representation of the Dirichlet process as a flexible nonparametric mixing distribution. The proposed model is a Dirichlet process mixture model and accommodates discrete representations of heterogeneity, like a latent class MNL model. Yet, unlike a latent class MNL model, the proposed discrete choice model does not require the analyst to fix the number of mixture components prior to estimation, as the complexity of the discrete mixing distribution is inferred from the evidence. For posterior inference in the proposed Dirichlet process mixture model of discrete choice, we derive an expectation maximisation algorithm. In a simulation study, we demonstrate that the proposed model framework can flexibly capture differently-shaped taste parameter distributions. Furthermore, we empirically validate the model framework in a case study on motorists' route choice preferences and find that the proposed Dirichlet process mixture model of discrete choice outperforms a latent class MNL model and mixed MNL models with common parametric mixing distributions in terms of both in-sample fit and out-of-sample predictive ability. Compared to extant modelling approaches, the proposed discrete choice model substantially abbreviates specification searches, as it relies on less restrictive parametric assumptions and does not require the analyst to specify the complexity of the discrete mixing distribution prior to estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge