Syed Ali

Attention-based Multi-scale Gated Recurrent Encoder with Novel Correlation Loss for COVID-19 Progression Prediction

Jul 18, 2021

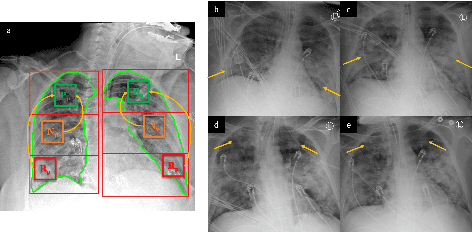

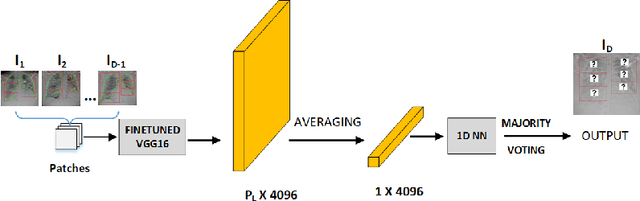

Abstract:COVID-19 image analysis has mostly focused on diagnostic tasks using single timepoint scans acquired upon disease presentation or admission. We present a deep learning-based approach to predict lung infiltrate progression from serial chest radiographs (CXRs) of COVID-19 patients. Our method first utilizes convolutional neural networks (CNNs) for feature extraction from patches within the concerned lung zone, and also from neighboring and remote boundary regions. The framework further incorporates a multi-scale Gated Recurrent Unit (GRU) with a correlation module for effective predictions. The GRU accepts CNN feature vectors from three different areas as input and generates a fused representation. The correlation module attempts to minimize the correlation loss between hidden representations of concerned and neighboring area feature vectors, while maximizing the loss between the same from concerned and remote regions. Further, we employ an attention module over the output hidden states of each encoder timepoint to generate a context vector. This vector is used as an input to a decoder module to predict patch severity grades at a future timepoint. Finally, we ensemble the patch classification scores to calculate patient-wise grades. Specifically, our framework predicts zone-wise disease severity for a patient on a given day by learning representations from the previous temporal CXRs. Our novel multi-institutional dataset comprises sequential CXR scans from N=93 patients. Our approach outperforms transfer learning and radiomic feature-based baseline approaches on this dataset.

Automated detection of oral pre-cancerous tongue lesions using deep learning for early diagnosis of oral cavity cancer

Sep 18, 2019

Abstract:Discovering oral cavity cancer (OCC) at an early stage is an effective way to increase patient survival rate. However, current initial screening process is done manually and is expensive for the average individual, especially in developing countries worldwide. This problem is further compounded due to the lack of specialists in such areas. Automating the initial screening process using artificial intelligence (AI) to detect pre-cancerous lesions can prove to be an effective and inexpensive technique that would allow patients to be triaged accordingly to receive appropriate clinical management. In this study, we have applied and evaluated the efficacy of six deep convolutional neural network (DCNN) models using transfer learning, for identifying pre-cancerous tongue lesions directly using a small data set of clinically annotated photographic images to diagnose early signs of OCC. DCNN model based on Vgg19 architecture was able to differentiate between benign and pre-cancerous tongue lesions with a mean classification accuracy of 0.98, sensitivity 0.89 and specificity 0.97. Additionally, the ResNet50 DCNN model was able to distinguish between five types of tongue lesions i.e. hairy tongue, fissured tongue, geographic tongue, strawberry tongue and oral hairy leukoplakia with a mean classification accuracy of 0.97. Preliminary results using an (AI+Physician) ensemble model demonstrate that an automated initial screening process of tongue lesions using DCNNs can achieve near-human level classification performance for diagnosing early signs of OCC in patients.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge