Sunil Vadera

Methods for Pruning Deep Neural Networks

Oct 31, 2020

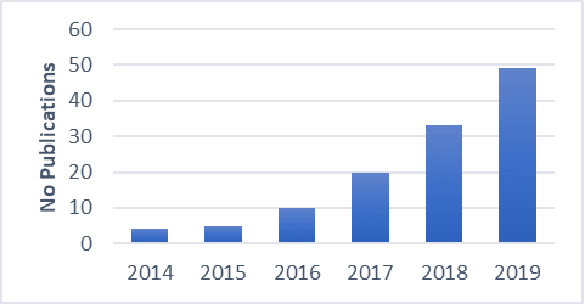

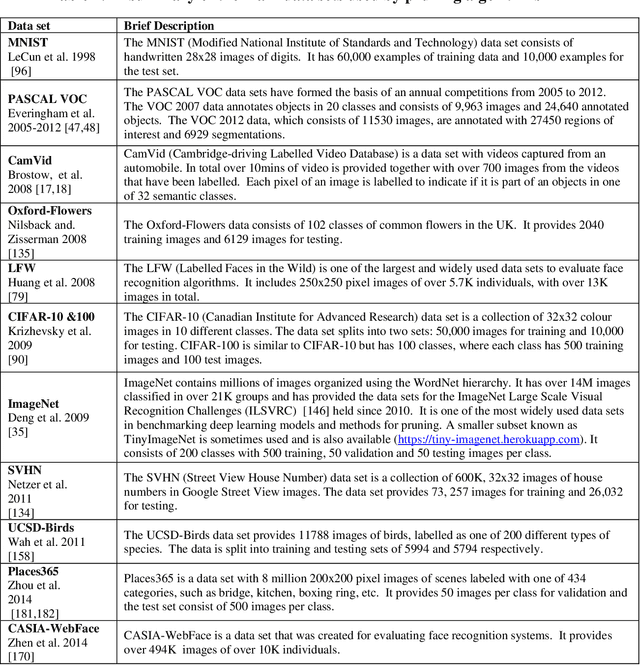

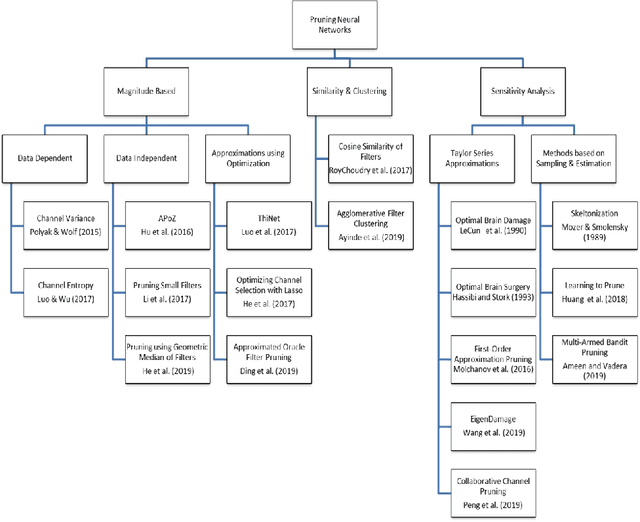

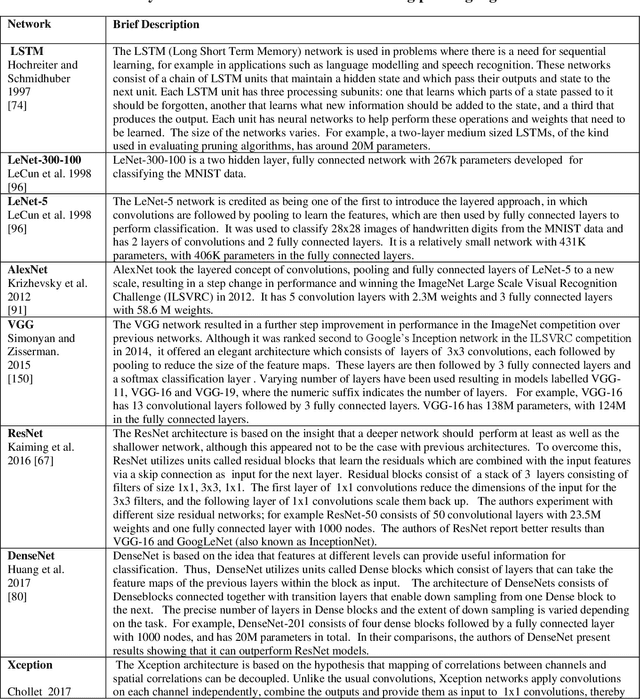

Abstract:This paper presents a survey of methods for pruning deep neural networks, from algorithms first proposed for fully connected networks in the 1990s to the recent methods developed for reducing the size of convolutional neural networks. The paper begins by bringing together many different algorithms by categorising them based on the underlying approach used. It then focuses on three categories: methods that use magnitude-based pruning, methods that utilise clustering to identify redundancy, and methods that utilise sensitivity analysis. Some of the key influencing studies within these categories are presented to illuminate the underlying approaches and results achieved. Most studies on pruning present results from empirical evaluations, which are distributed in the literature as new architectures, algorithms and data sets have evolved with time. This paper brings together the reported results from some key papers in one place by providing a resource that can be used to quickly compare reported results, and trace studies where specific methods, data sets and architectures have been used.

A Probabilistic Model For Sensor Validation

Feb 13, 2013

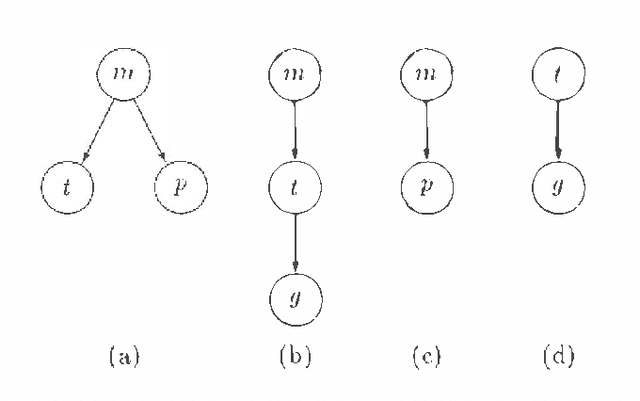

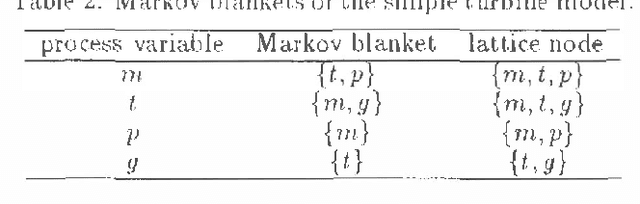

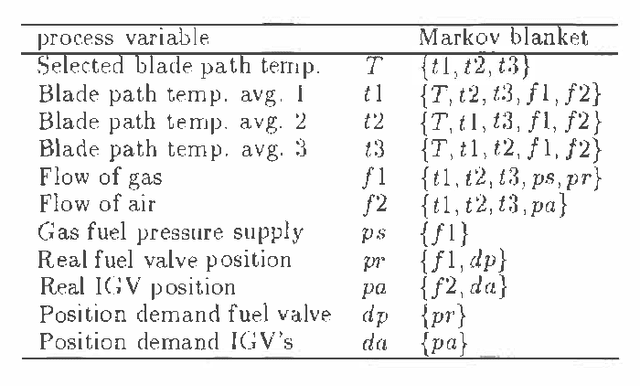

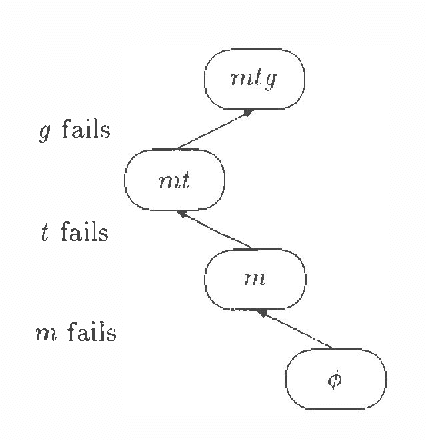

Abstract:The validation of data from sensors has become an important issue in the operation and control of modern industrial plants. One approach is to use knowledge based techniques to detect inconsistencies in measured data. This article presents a probabilistic model for the detection of such inconsistencies. Based on probability propagation, this method is able to find the existence of a possible fault among the set of sensors. That is, if an error exists, many sensors present an apparent fault due to the propagation from the sensor(s) with a real fault. So the fault detection mechanism can only tell if a sensor has a potential fault, but it can not tell if the fault is real or apparent. So the central problem is to develop a theory, and then an algorithm, for distinguishing real and apparent faults, given that one or more sensors can fail at the same time. This article then, presents an approach based on two levels: (i) probabilistic reasoning, to detect a potential fault, and (ii) constraint management, to distinguish the real fault from the apparent ones. The proposed approach is exemplified by applying it to a power plant model.

Any Time Probabilistic Reasoning for Sensor Validation

Jan 30, 2013

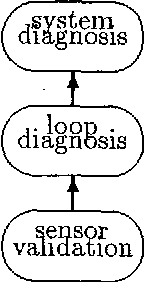

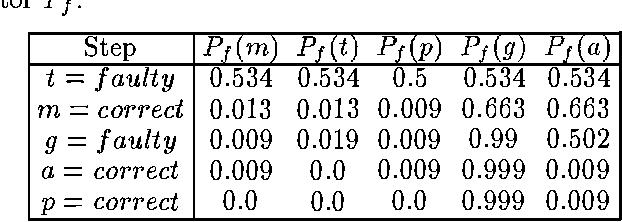

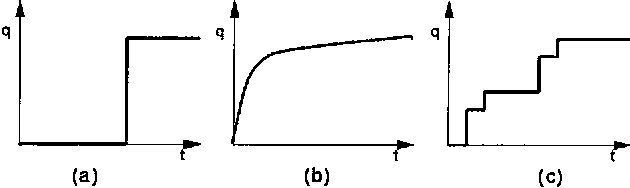

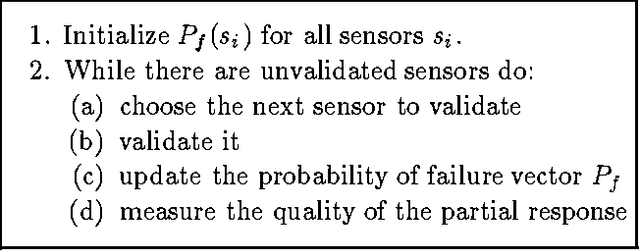

Abstract:For many real time applications, it is important to validate the information received from the sensors before entering higher levels of reasoning. This paper presents an any time probabilistic algorithm for validating the information provided by sensors. The system consists of two Bayesian network models. The first one is a model of the dependencies between sensors and it is used to validate each sensor. It provides a list of potentially faulty sensors. To isolate the real faults, a second Bayesian network is used, which relates the potential faults with the real faults. This second model is also used to make the validation algorithm any time, by validating first the sensors that provide more information. To select the next sensor to validate, and measure the quality of the results at each stage, an entropy function is used. This function captures in a single quantity both the certainty and specificity measures of any time algorithms. Together, both models constitute a mechanism for validating sensors in an any time fashion, providing at each step the probability of correct/faulty for each sensor, and the total quality of the results. The algorithm has been tested in the validation of temperature sensors of a power plant.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge