Methods for Pruning Deep Neural Networks

Paper and Code

Oct 31, 2020

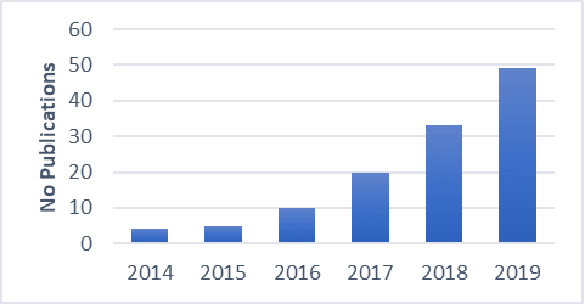

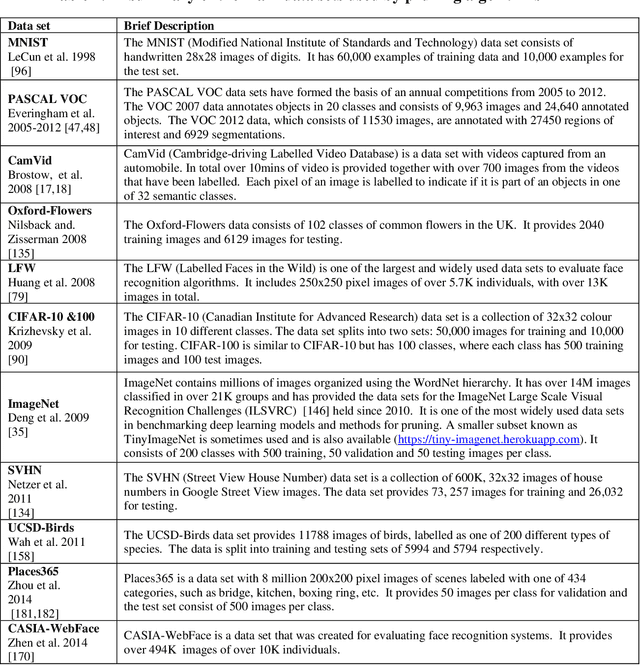

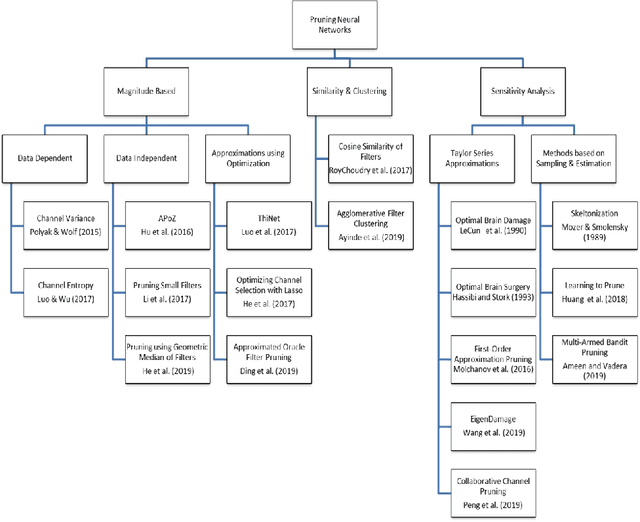

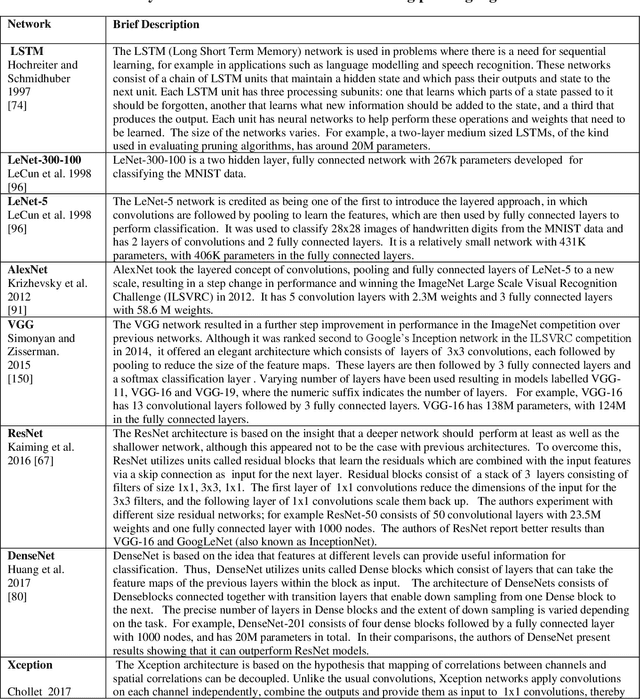

This paper presents a survey of methods for pruning deep neural networks, from algorithms first proposed for fully connected networks in the 1990s to the recent methods developed for reducing the size of convolutional neural networks. The paper begins by bringing together many different algorithms by categorising them based on the underlying approach used. It then focuses on three categories: methods that use magnitude-based pruning, methods that utilise clustering to identify redundancy, and methods that utilise sensitivity analysis. Some of the key influencing studies within these categories are presented to illuminate the underlying approaches and results achieved. Most studies on pruning present results from empirical evaluations, which are distributed in the literature as new architectures, algorithms and data sets have evolved with time. This paper brings together the reported results from some key papers in one place by providing a resource that can be used to quickly compare reported results, and trace studies where specific methods, data sets and architectures have been used.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge