Salem Ameen

Autonomous Mobile Robot Navigation: Tracking problem

Jul 08, 2024

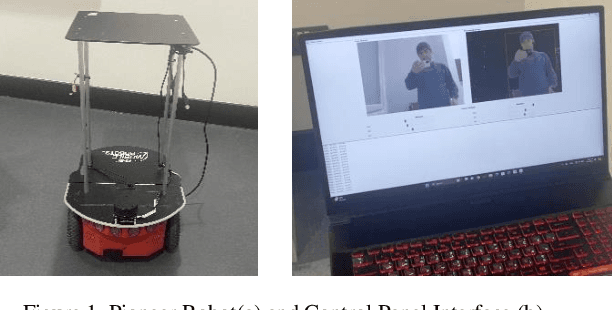

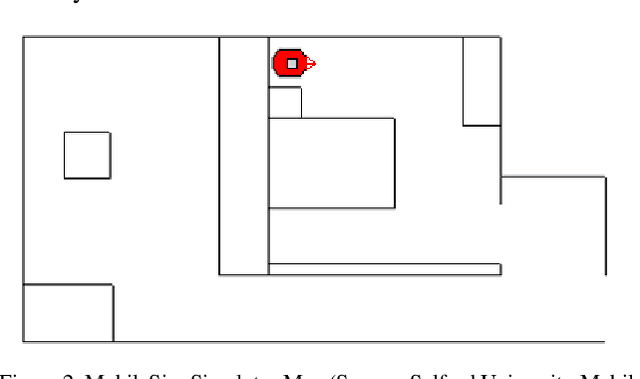

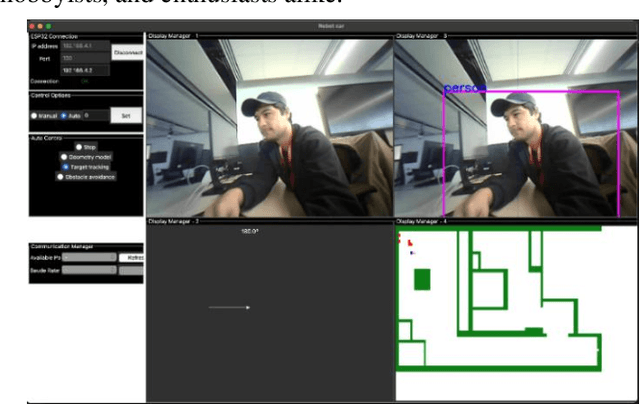

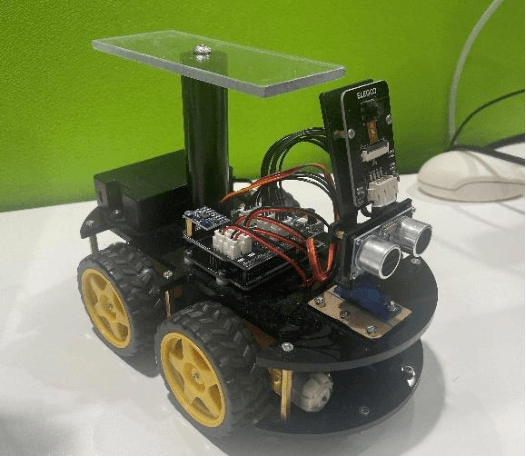

Abstract:This paper presents a study on autonomous robot navigation, focusing on three key behaviors: Odometry, Target Tracking, and Obstacle Avoidance. Each behavior is described in detail, along with experimental setups for simulated and real-world environments. Odometry utilizes wheel encoder data for precise navigation along predefined paths, validated through experiments with a Pioneer robot. Target Tracking employs vision-based techniques for pursuing designated targets while avoiding obstacles, demonstrated on the same platform. Obstacle Avoidance utilizes ultrasonic sensors to navigate cluttered environments safely, validated in both simulated and real-world scenarios. Additionally, the paper extends the project to include an Elegoo robot car, leveraging its features for enhanced experimentation. Through advanced algorithms and experimental validations, this study provides insights into developing robust navigation systems for autonomous robots.

Deriving Hematological Disease Classes Using Fuzzy Logic and Expert Knowledge: A Comprehensive Machine Learning Approach with CBC Parameters

Jun 18, 2024Abstract:In the intricate field of medical diagnostics, capturing the subtle manifestations of diseases remains a challenge. Traditional methods, often binary in nature, may not encapsulate the nuanced variances that exist in real-world clinical scenarios. This paper introduces a novel approach by leveraging Fuzzy Logic Rules to derive disease classes based on expert domain knowledge from a medical practitioner. By recognizing that diseases do not always fit into neat categories, and that expert knowledge can guide the fuzzification of these boundaries, our methodology offers a more sophisticated and nuanced diagnostic tool. Using a dataset procured from a prominent hospital, containing detailed patient blood count records, we harness Fuzzy Logic Rules, a computational technique celebrated for its ability to handle ambiguity. This approach, moving through stages of fuzzification, rule application, inference, and ultimately defuzzification, produces refined diagnostic predictions. When combined with the Random Forest classifier, the system adeptly predicts hematological conditions using Complete Blood Count (CBC) parameters. Preliminary results showcase high accuracy levels, underscoring the advantages of integrating fuzzy logic into the diagnostic process. When juxtaposed with traditional diagnostic techniques, it becomes evident that Fuzzy Logic, especially when guided by medical expertise, offers significant advancements in the realm of hematological diagnostics. This paper not only paves the path for enhanced patient care but also beckons a deeper dive into the potentialities of fuzzy logic in various medical diagnostic applications.

Machine Learning and Optimization Techniques for Solving Inverse Kinematics in a 7-DOF Robotic Arm

Jun 18, 2024

Abstract:As the pace of AI technology continues to accelerate, more tools have become available to researchers to solve longstanding problems, Hybrid approaches available today continue to push the computational limits of efficiency and precision. One of such problems is the inverse kinematics of redundant systems. This paper explores the complexities of a 7 degree of freedom manipulator and explores 13 optimization techniques to solve it. Additionally, a novel approach is proposed to contribute to the field of algorithmic research. This was found to be over 200 times faster than the well-known traditional Particle Swarm Optimization technique. This new method may serve as a new field of search that combines the explorative capabilities of Machine Learning with the exploitative capabilities of numerical methods.

Optimizing Deep Learning Models For Raspberry Pi

Apr 25, 2023Abstract:Deep learning models have become increasingly popular for a wide range of applications, including computer vision, natural language processing, and speech recognition. However, these models typically require large amounts of computational resources, making them challenging to run on low-power devices such as the Raspberry Pi. One approach to addressing this challenge is to use pruning techniques to reduce the size of the deep learning models. Pruning involves removing unimportant weights and connections from the model, resulting in a smaller and more efficient model. Pruning can be done during training or after the model has been trained. Another approach is to optimize the deep learning models specifically for the Raspberry Pi architecture. This can include optimizing the model's architecture and parameters to take advantage of the Raspberry Pi's hardware capabilities, such as its CPU and GPU. Additionally, the model can be optimized for energy efficiency by minimizing the amount of computation required. Pruning and optimizing deep learning models for the Raspberry Pi can help overcome the computational and energy constraints of low-power devices, making it possible to run deep learning models on a wider range of devices. In the following sections, we will explore these approaches in more detail and discuss their effectiveness for optimizing deep learning models for the Raspberry Pi.

Methods for Pruning Deep Neural Networks

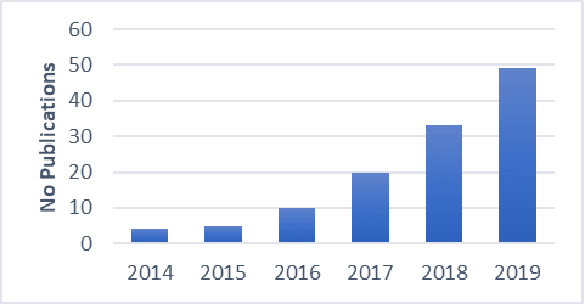

Oct 31, 2020

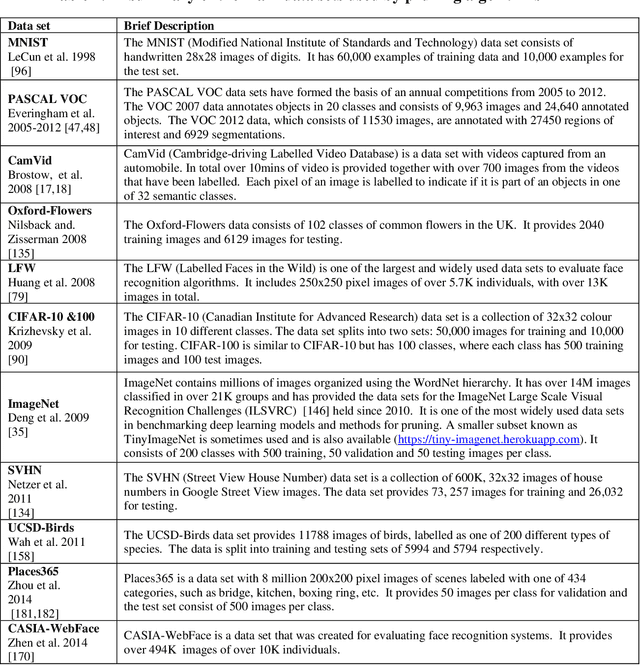

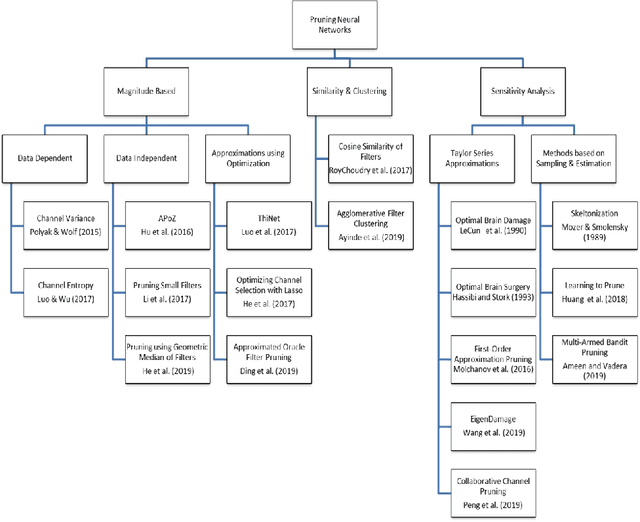

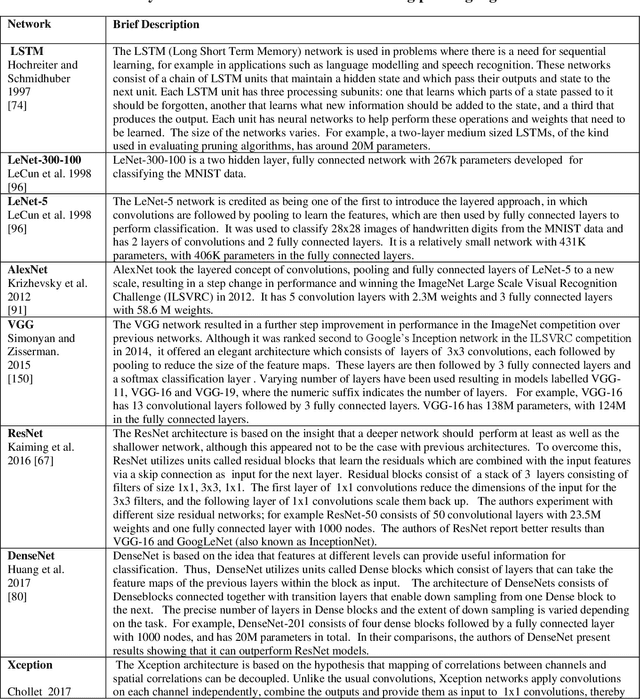

Abstract:This paper presents a survey of methods for pruning deep neural networks, from algorithms first proposed for fully connected networks in the 1990s to the recent methods developed for reducing the size of convolutional neural networks. The paper begins by bringing together many different algorithms by categorising them based on the underlying approach used. It then focuses on three categories: methods that use magnitude-based pruning, methods that utilise clustering to identify redundancy, and methods that utilise sensitivity analysis. Some of the key influencing studies within these categories are presented to illuminate the underlying approaches and results achieved. Most studies on pruning present results from empirical evaluations, which are distributed in the literature as new architectures, algorithms and data sets have evolved with time. This paper brings together the reported results from some key papers in one place by providing a resource that can be used to quickly compare reported results, and trace studies where specific methods, data sets and architectures have been used.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge