Styliani-Christina Fragkouli

Institute of Applied Biosciences, Centre for Research and Technology Hellas, Thessaloniki, Greece and Department of Biology, National & Kapodistrian University of Athens, Athens, Greece

Open and Sustainable AI: challenges, opportunities and the road ahead in the life sciences

May 22, 2025Abstract:Artificial intelligence (AI) has recently seen transformative breakthroughs in the life sciences, expanding possibilities for researchers to interpret biological information at an unprecedented capacity, with novel applications and advances being made almost daily. In order to maximise return on the growing investments in AI-based life science research and accelerate this progress, it has become urgent to address the exacerbation of long-standing research challenges arising from the rapid adoption of AI methods. We review the increased erosion of trust in AI research outputs, driven by the issues of poor reusability and reproducibility, and highlight their consequent impact on environmental sustainability. Furthermore, we discuss the fragmented components of the AI ecosystem and lack of guiding pathways to best support Open and Sustainable AI (OSAI) model development. In response, this perspective introduces a practical set of OSAI recommendations directly mapped to over 300 components of the AI ecosystem. Our work connects researchers with relevant AI resources, facilitating the implementation of sustainable, reusable and transparent AI. Built upon life science community consensus and aligned to existing efforts, the outputs of this perspective are designed to aid the future development of policy and structured pathways for guiding AI implementation.

Synthetic data: How could it be used for infectious disease research?

Jul 03, 2024Abstract:Over the last three to five years, it has become possible to generate machine learning synthetic data for healthcare-related uses. However, concerns have been raised about potential negative factors associated with the possibilities of artificial dataset generation. These include the potential misuse of generative artificial intelligence (AI) in fields such as cybercrime, the use of deepfakes and fake news to deceive or manipulate, and displacement of human jobs across various market sectors. Here, we consider both current and future positive advances and possibilities with synthetic datasets. Synthetic data offers significant benefits, particularly in data privacy, research, in balancing datasets and reducing bias in machine learning models. Generative AI is an artificial intelligence genre capable of creating text, images, video or other data using generative models. The recent explosion of interest in GenAI was heralded by the invention and speedy move to use of large language models (LLM). These computational models are able to achieve general-purpose language generation and other natural language processing tasks and are based on transformer architectures, which made an evolutionary leap from previous neural network architectures. Fuelled by the advent of improved GenAI techniques and wide scale usage, this is surely the time to consider how synthetic data can be used to advance infectious disease research. In this commentary we aim to create an overview of the current and future position of synthetic data in infectious disease research.

Deep Residual Error and Bag-of-Tricks Learning for Gravitational Wave Surrogate Modeling

Mar 16, 2022

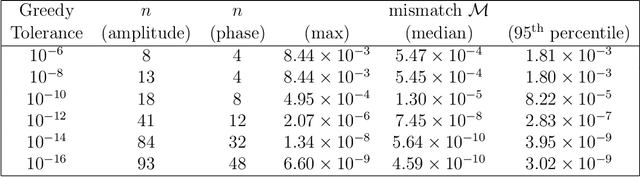

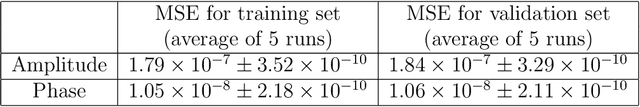

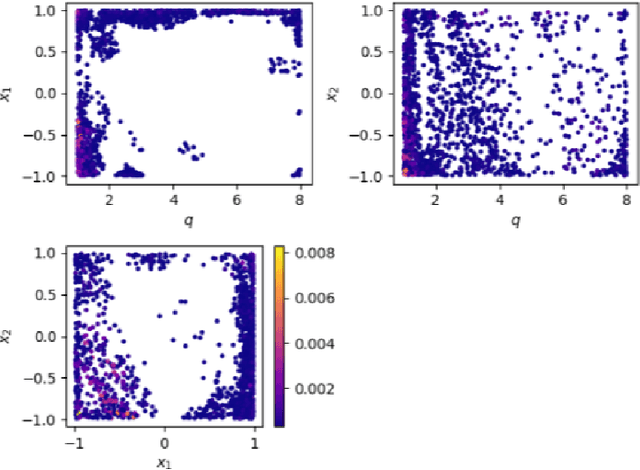

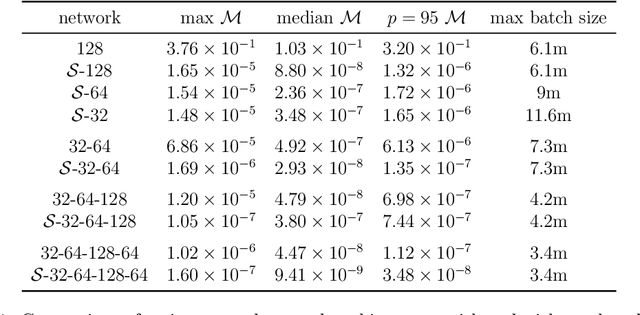

Abstract:Deep learning methods have been employed in gravitational-wave astronomy to accelerate the construction of surrogate waveforms for the inspiral of spin-aligned black hole binaries, among other applications. We demonstrate, that the residual error of an artificial neural network that models the coefficients of the surrogate waveform expansion (especially those of the phase of the waveform) has sufficient structure to be learnable by a second network. Adding this second network, we were able to reduce the maximum mismatch for waveforms in a validation set by more than an order of magnitude. We also explored several other ideas for improving the accuracy of the surrogate model, such as the exploitation of similarities between waveforms, the augmentation of the training set, the dissection of the input space, using dedicated networks per output coefficient and output augmentation. In several cases, small improvements can be observed, but the most significant improvement still comes from the addition of a second network that models the residual error. Since the residual error for more general surrogate waveform models (when e.g. eccentricity is included) may also have a specific structure, one can expect our method to be applicable to cases where the gain in accuracy could lead to significant gains in computational time.

Autoencoder-driven Spiral Representation Learning for Gravitational Wave Surrogate Modelling

Jul 09, 2021

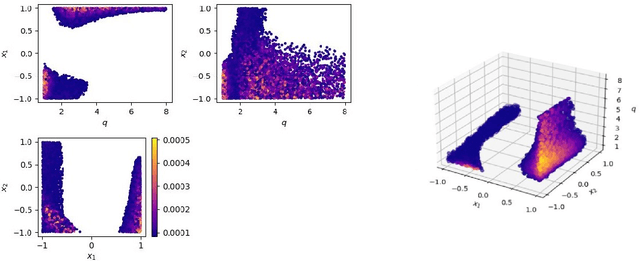

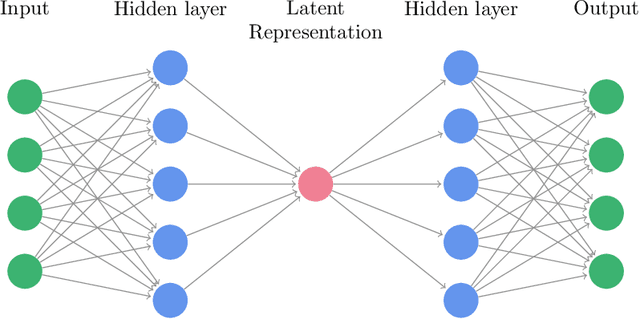

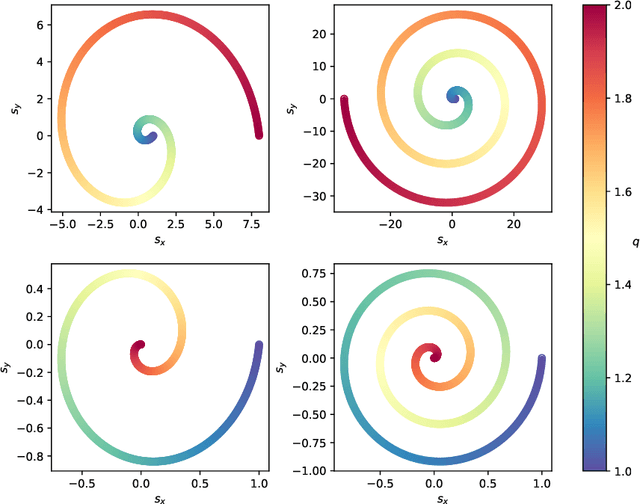

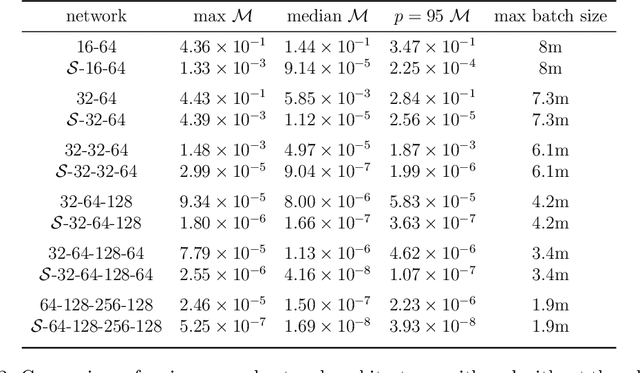

Abstract:Recently, artificial neural networks have been gaining momentum in the field of gravitational wave astronomy, for example in surrogate modelling of computationally expensive waveform models for binary black hole inspiral and merger. Surrogate modelling yields fast and accurate approximations of gravitational waves and neural networks have been used in the final step of interpolating the coefficients of the surrogate model for arbitrary waveforms outside the training sample. We investigate the existence of underlying structures in the empirical interpolation coefficients using autoencoders. We demonstrate that when the coefficient space is compressed to only two dimensions, a spiral structure appears, wherein the spiral angle is linearly related to the mass ratio. Based on this finding, we design a spiral module with learnable parameters, that is used as the first layer in a neural network, which learns to map the input space to the coefficients. The spiral module is evaluated on multiple neural network architectures and consistently achieves better speed-accuracy trade-off than baseline models. A thorough experimental study is conducted and the final result is a surrogate model which can evaluate millions of input parameters in a single forward pass in under 1ms on a desktop GPU, while the mismatch between the corresponding generated waveforms and the ground-truth waveforms is better than the compared baseline methods. We anticipate the existence of analogous underlying structures and corresponding computational gains also in the case of spinning black hole binaries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge