Stela Ishitani Silva

Exoplanet Transit Candidate Identification in TESS Full-Frame Images via a Transformer-Based Algorithm

Feb 11, 2025Abstract:The Transiting Exoplanet Survey Satellite (TESS) is surveying a large fraction of the sky, generating a vast database of photometric time series data that requires thorough analysis to identify exoplanetary transit signals. Automated learning approaches have been successfully applied to identify transit signals. However, most existing methods focus on the classification and validation of candidates, while few efforts have explored new techniques for the search of candidates. To search for new exoplanet transit candidates, we propose an approach to identify exoplanet transit signals without the need for phase folding or assuming periodicity in the transit signals, such as those observed in multi-transit light curves. To achieve this, we implement a new neural network inspired by Transformers to directly process Full Frame Image (FFI) light curves to detect exoplanet transits. Transformers, originally developed for natural language processing, have recently demonstrated significant success in capturing long-range dependencies compared to previous approaches focused on sequential data. This ability allows us to employ multi-head self-attention to identify exoplanet transit signals directly from the complete light curves, combined with background and centroid time series, without requiring prior transit parameters. The network is trained to learn characteristics of the transit signal, like the dip shape, which helps distinguish planetary transits from other variability sources. Our model successfully identified 214 new planetary system candidates, including 122 multi-transit light curves, 88 single-transit and 4 multi-planet systems from TESS sectors 1-26 with a radius > 0.27 $R_{\mathrm{Jupiter}}$, demonstrating its ability to detect transits regardless of their periodicity.

Short-Period Variables in TESS Full-Frame Image Light Curves Identified via Convolutional Neural Networks

Feb 19, 2024Abstract:The Transiting Exoplanet Survey Satellite (TESS) mission measured light from stars in ~85% of the sky throughout its two-year primary mission, resulting in millions of TESS 30-minute cadence light curves to analyze in the search for transiting exoplanets. To search this vast dataset, we aim to provide an approach that is both computationally efficient, produces highly performant predictions, and minimizes the required human search effort. We present a convolutional neural network that we train to identify short period variables. To make a prediction for a given light curve, our network requires no prior target parameters identified using other methods. Our network performs inference on a TESS 30-minute cadence light curve in ~5ms on a single GPU, enabling large scale archival searches. We present a collection of 14156 short-period variables identified by our network. The majority of our identified variables fall into two prominent populations, one of short-period main sequence binaries and another of Delta Scuti stars. Our neural network model and related code is additionally provided as open-source code for public use and extension.

Identifying Planetary Transit Candidates in TESS Full-Frame Image Light Curves via Convolutional Neural Networks

Jan 26, 2021

Abstract:The Transiting Exoplanet Survey Satellite (TESS) mission measured light from stars in ~75% of the sky throughout its two year primary mission, resulting in millions of TESS 30-minute cadence light curves to analyze in the search for transiting exoplanets. To search this vast data trove for transit signals, we aim to provide an approach that is both computationally efficient and produces highly performant predictions. This approach minimizes the required human search effort. We present a convolutional neural network, which we train to identify planetary transit signals and dismiss false positives. To make a prediction for a given light curve, our network requires no prior transit parameters identified using other methods. Our network performs inference on a TESS 30-minute cadence light curve in ~5ms on a single GPU, enabling large scale archival searches. We present 181 new planet candidates identified by our network, which pass subsequent human vetting designed to rule out false positives. Our neural network model is additionally provided as open-source code for public use and extension.

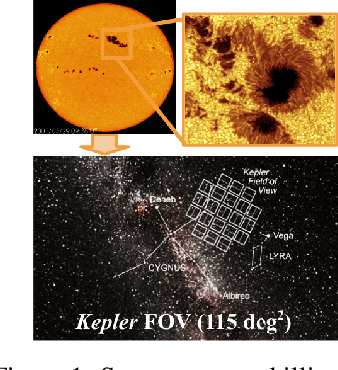

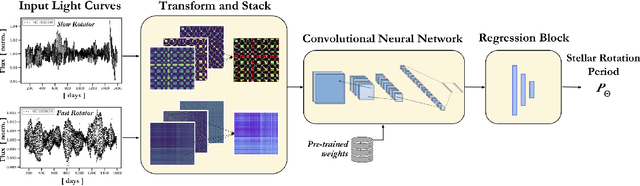

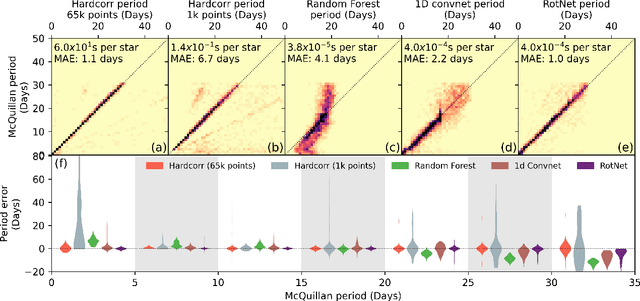

RotNet: Fast and Scalable Estimation of Stellar Rotation Periods Using Convolutional Neural Networks

Dec 04, 2020

Abstract:Magnetic activity in stars manifests as dark spots on their surfaces that modulate the brightness observed by telescopes. These light curves contain important information on stellar rotation. However, the accurate estimation of rotation periods is computationally expensive due to scarce ground truth information, noisy data, and large parameter spaces that lead to degenerate solutions. We harness the power of deep learning and successfully apply Convolutional Neural Networks to regress stellar rotation periods from Kepler light curves. Geometry-preserving time-series to image transformations of the light curves serve as inputs to a ResNet-18 based architecture which is trained through transfer learning. The McQuillan catalog of published rotation periods is used as ansatz to groundtruth. We benchmark the performance of our method against a random forest regressor, a 1D CNN, and the Auto-Correlation Function (ACF) - the current standard to estimate rotation periods. Despite limiting our input to fewer data points (1k), our model yields more accurate results and runs 350 times faster than ACF runs on the same number of data points and 10,000 times faster than ACF runs on 65k data points. With only minimal feature engineering our approach has impressive accuracy, motivating the application of deep learning to regress stellar parameters on an even larger scale

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge