Stefano Carrazza

Benchmarking machine learning models for quantum state classification

Sep 14, 2023Abstract:Quantum computing is a growing field where the information is processed by two-levels quantum states known as qubits. Current physical realizations of qubits require a careful calibration, composed by different experiments, due to noise and decoherence phenomena. Among the different characterization experiments, a crucial step is to develop a model to classify the measured state by discriminating the ground state from the excited state. In this proceedings we benchmark multiple classification techniques applied to real quantum devices.

Product Jacobi-Theta Boltzmann machines with score matching

Mar 10, 2023

Abstract:The estimation of probability density functions is a non trivial task that over the last years has been tackled with machine learning techniques. Successful applications can be obtained using models inspired by the Boltzmann machine (BM) architecture. In this manuscript, the product Jacobi-Theta Boltzmann machine (pJTBM) is introduced as a restricted version of the Riemann-Theta Boltzmann machine (RTBM) with diagonal hidden sector connection matrix. We show that score matching, based on the Fisher divergence, can be used to fit probability densities with the pJTBM more efficiently than with the original RTBM.

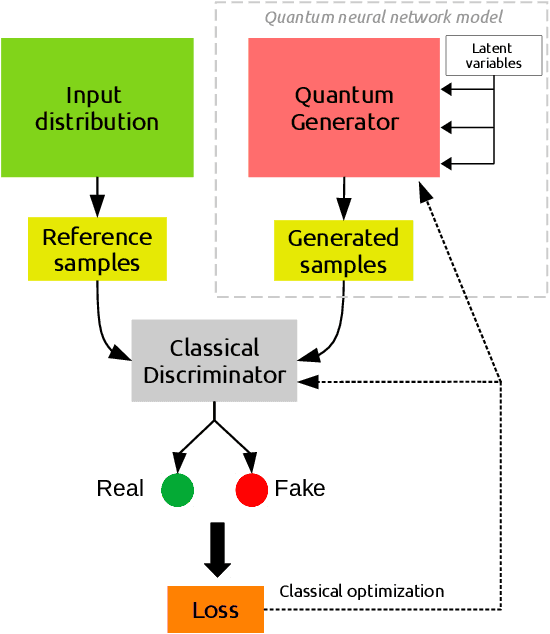

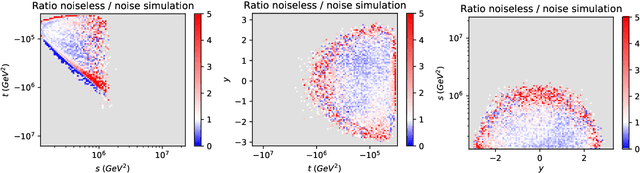

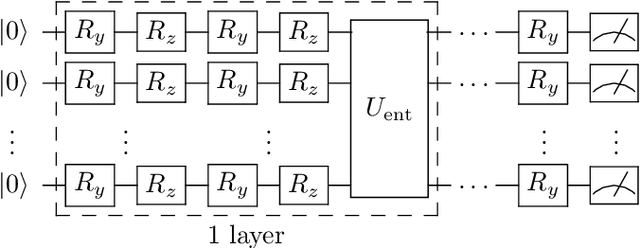

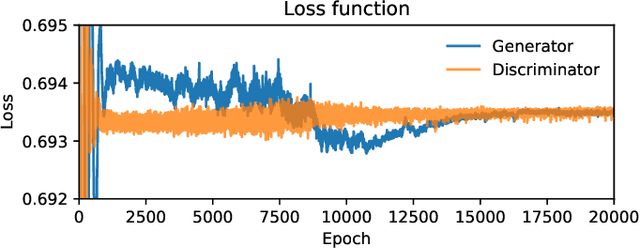

Style-based quantum generative adversarial networks for Monte Carlo events

Oct 13, 2021

Abstract:We propose and assess an alternative quantum generator architecture in the context of generative adversarial learning for Monte Carlo event generation, used to simulate particle physics processes at the Large Hadron Collider (LHC). We validate this methodology by implementing the quantum network on artificial data generated from known underlying distributions. The network is then applied to Monte Carlo-generated datasets of specific LHC scattering processes. The new quantum generator architecture leads to an improvement in state-of-the-art implementations while maintaining shallow-depth networks. Moreover, the quantum generator successfully learns the underlying distribution functions even if trained with small training sample sets; this is particularly interesting for data augmentation applications. We deploy this novel methodology on two different quantum hardware architectures, trapped-ion and superconducting technologies, to test its hardware-independent viability.

A framework for quantitative analysis of Computed Tomography images of viral pneumonitis: radiomic features in COVID and non-COVID patients

Sep 28, 2021

Abstract:Purpose: to optimize a pipeline of clinical data gathering and CT images processing implemented during the COVID-19 pandemic crisis and to develop artificial intelligence model for different of viral pneumonia. Methods: 1028 chest CT image of patients with positive swab were segmented automatically for lung extraction. A Gaussian model developed in Python language was applied to calculate quantitative metrics (QM) describing well-aerated and ill portions of the lungs from the histogram distribution of lung CT numbers in both lungs of each image and in four geometrical subdivision. Furthermore, radiomic features (RF) of first and second order were extracted from bilateral lungs using PyRadiomic tools. QM and RF were used to develop 4 different Multi-Layer Perceptron (MLP) classifier to discriminate images of patients with COVID (n=646) and non-COVID (n=382) viral pneumonia. Results: The Gaussian model applied to lung CT histogram correctly described healthy parenchyma 94% of the patients. The resulting accuracy of the models for COVID diagnosis were in the range 0.76-0.87, as the integral of the receiver operating curve. The best diagnostic performances were associated to the model based on RF of first and second order, with 21 relevant features after LASSO regression and an accuracy of 0.81$\pm$0.02 after 4-fold cross validation Conclusions: Despite these results were obtained with CT images from a single center, a platform for extracting useful quantitative metrics from CT images was developed and optimized. Four artificial intelligence-based models for classifying patients with COVID and non-COVID viral pneumonia were developed and compared showing overall good diagnostic performances

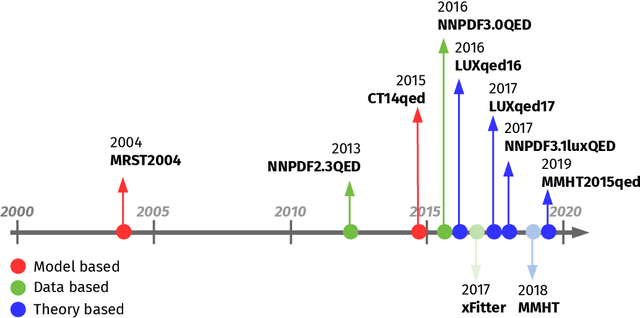

PDFFlow: hardware accelerating parton density access

Dec 15, 2020

Abstract:We present PDFFlow, a new software for fast evaluation of parton distribution functions (PDFs) designed for platforms with hardware accelerators. PDFs are essential for the calculation of particle physics observables through Monte Carlo simulation techniques. The evaluation of a generic set of PDFs for quarks and gluons at a given momentum fraction and energy scale requires the implementation of interpolation algorithms as introduced for the first time by the LHAPDF project. PDFFlow extends and implements these interpolation algorithms using Google's TensorFlow library providing the possibility to perform PDF evaluations taking fully advantage of multi-threading CPU and GPU setups. We benchmark the performance of this library on multiple scenarios relevant for the particle physics community.

PDFFlow: parton distribution functions on GPU

Sep 14, 2020

Abstract:We present PDFFlow, a new software for fast evaluation of parton distribution functions (PDFs) designed for platforms with hardware accelerators. PDFs are essential for the calculation of particle physics observables through Monte Carlo simulation techniques. The evaluation of a generic set of PDFs for quarks and gluon at a given momentum fraction and energy scale requires the implementation of interpolation algorithms as introduced for the first time by the LHAPDF project. PDFFlow extends and implements these interpolation algorithms using Google's TensorFlow library providing the capabilities to perform PDF evaluations taking fully advantage of multi-threading CPU and GPU setups. We benchmark the performance of this library on multiple scenarios relevant for the particle physics community.

Qibo: a framework for quantum simulation with hardware acceleration

Sep 03, 2020

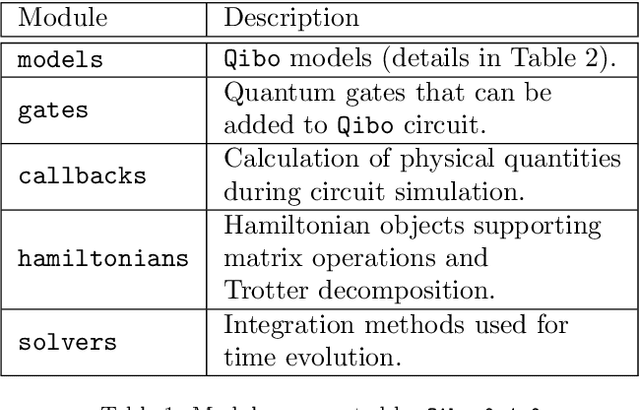

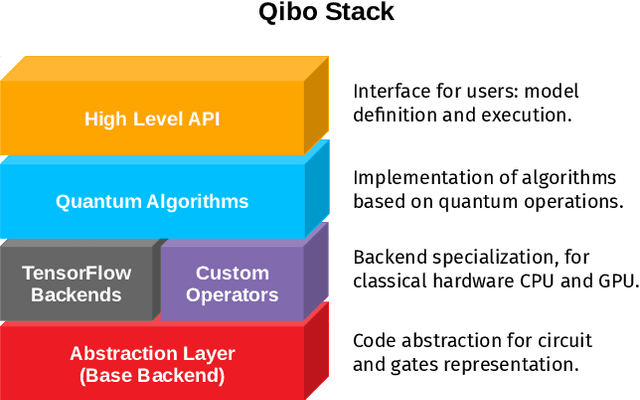

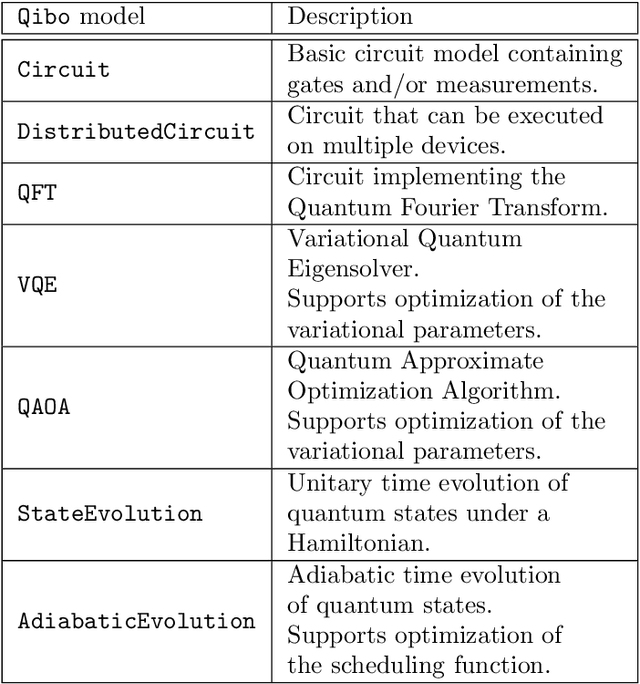

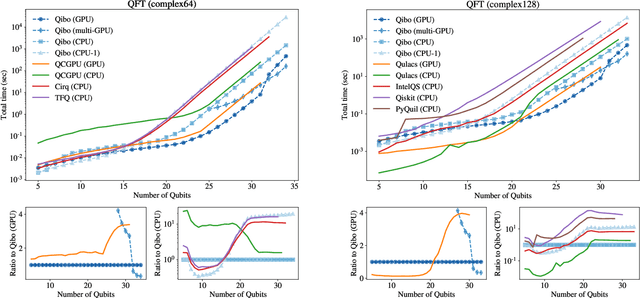

Abstract:We present Qibo, a new open-source software for fast evaluation of quantum circuits and adiabatic evolution which takes full advantage of hardware accelerators. The growing interest in quantum computing and the recent developments of quantum hardware devices motivates the development of new advanced computational tools focused on performance and usage simplicity. In this work we introduce a new quantum simulation framework that enables developers to delegate all complicated aspects of hardware or platform implementation to the library so they can focus on the problem and quantum algorithms at hand. This software is designed from scratch with simulation performance, code simplicity and user friendly interface as target goals. It takes advantage of hardware acceleration such as multi-threading CPU, single GPU and multi-GPU devices.

VegasFlow: accelerating Monte Carlo simulation across multiple hardware platforms

Feb 28, 2020

Abstract:We present VegasFlow, a new software for fast evaluation of high dimensional integrals based on Monte Carlo integration techniques designed for platforms with hardware accelerators. The growing complexity of calculations and simulations in many areas of science have been accompanied by advances in the computational tools which have helped their developments. VegasFlow enables developers to delegate all complicated aspects of hardware or platform implementation to the library so they can focus on the problem at hand. This software is inspired on the Vegas algorithm, ubiquitous in the particle physics community as the driver of cross section integration, and based on Google's powerful TensorFlow library. We benchmark the performance of this library on many different consumer and professional grade GPUs and CPUs.

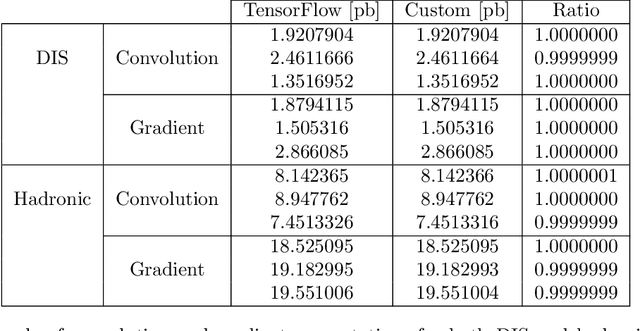

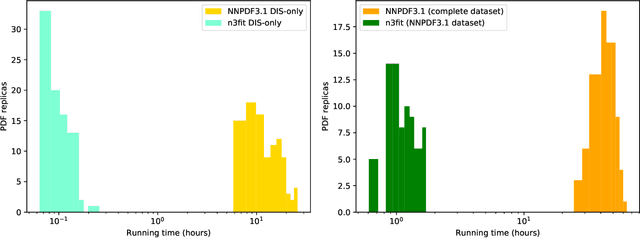

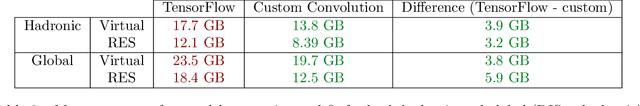

Towards hardware acceleration for parton densities estimation

Sep 23, 2019

Abstract:In this proceedings we describe the computational challenges associated to the determination of parton distribution functions (PDFs). We compare the performance of the convolution of the parton distributions with matrix elements using different hardware instructions. We quantify and identify the most promising data-model configurations to increase PDF fitting performance in adapting the current code frameworks to hardware accelerators such as graphics processing units.

Lund jet images from generative and cycle-consistent adversarial networks

Sep 03, 2019

Abstract:We introduce a generative model to simulate radiation patterns within a jet using the Lund jet plane. We show that using an appropriate neural network architecture with a stochastic generation of images, it is possible to construct a generative model which retrieves the underlying two-dimensional distribution to within a few percent. We compare our model with several alternative state-of-the-art generative techniques. Finally, we show how a mapping can be created between different categories of jets, and use this method to retroactively change simulation settings or the underlying process on an existing sample. These results provide a framework for significantly reducing simulation times through fast inference of the neural network as well as for data augmentation of physical measurements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge