Stavros Theodorakis

Deeplab - Greece, Taboola.com - Israel

An Incremental Learning framework for Large-scale CTR Prediction

Sep 01, 2022

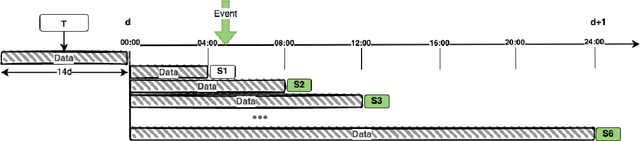

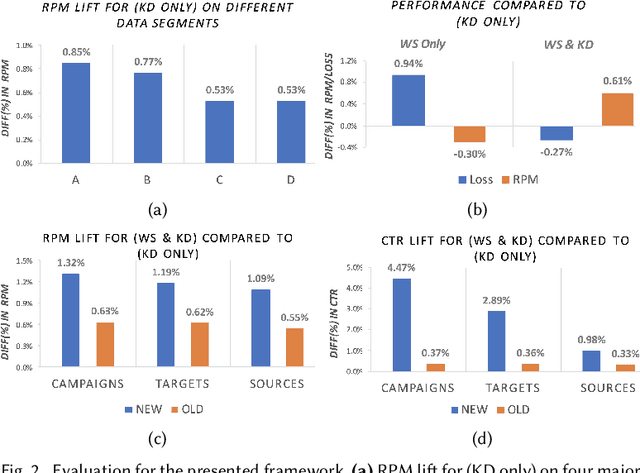

Abstract:In this work we introduce an incremental learning framework for Click-Through-Rate (CTR) prediction and demonstrate its effectiveness for Taboola's massive-scale recommendation service. Our approach enables rapid capture of emerging trends through warm-starting from previously deployed models and fine tuning on "fresh" data only. Past knowledge is maintained via a teacher-student paradigm, where the teacher acts as a distillation technique, mitigating the catastrophic forgetting phenomenon. Our incremental learning framework enables significantly faster training and deployment cycles (x12 speedup). We demonstrate a consistent Revenue Per Mille (RPM) lift over multiple traffic segments and a significant CTR increase on newly introduced items.

Deep density networks and uncertainty in recommender systems

May 06, 2018

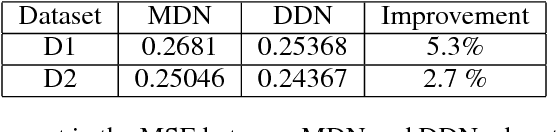

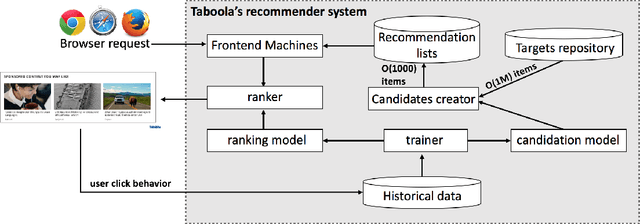

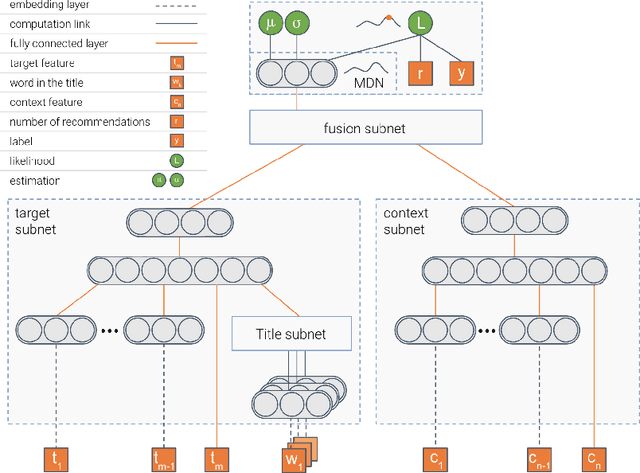

Abstract:Building robust online content recommendation systems requires learning complex interactions between user preferences and content features. The field has evolved rapidly in recent years from traditional multi-arm bandit and collaborative filtering techniques, with new methods employing Deep Learning models to capture non-linearities. Despite progress, the dynamic nature of online recommendations still poses great challenges, such as finding the delicate balance between exploration and exploitation. In this paper we show how uncertainty estimations can be incorporated by employing them in an optimistic exploitation/exploration strategy for more efficient exploration of new recommendations. We provide a novel hybrid deep neural network model, Deep Density Networks (DDN), which integrates content-based deep learning models with a collaborative scheme that is able to robustly model and estimate uncertainty. Finally, we present online and offline results after incorporating DNN into a real world content recommendation system that serves billions of recommendations per day, and show the benefit of using DDN in practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge