Srinath Saikrishnan

University of California, Los Angeles

Improving Generalization and Uncertainty Quantification of Photometric Redshift Models

Jan 23, 2026Abstract:Accurate redshift estimates are a vital component in understanding galaxy evolution and precision cosmology. In this paper, we explore approaches to increase the applicability of machine learning models for photometric redshift estimation on a broader range of galaxy types. Typical models are trained with ground-truth redshifts from spectroscopy. We test the utility and effectiveness of two approaches for combining spectroscopic redshifts and redshifts derived from multiband ($\sim$35 filters) photometry, which sample different types of galaxies compared to spectroscopic surveys. The two approaches are (1) training on a composite dataset and (2) transfer learning from one dataset to another. We compile photometric redshifts from the COSMOS2020 catalog (TransferZ) to complement an established spectroscopic redshift dataset (GalaxiesML). We used two architectures, deterministic neural networks (NN) and Bayesian neural networks (BNN), to examine and evaluate their performance with respect to the Legacy Survey of Space and Time (LSST) photo-$z$ science requirements. We also use split conformal prediction for calibrating uncertainty estimates and producing prediction intervals for the BNN and NN, respectively. We find that a NN trained on a composite dataset predicts photo-$z$'s that are 4.5 times less biased within the redshift range $0.3<z<1.5$, 1.1 times less scattered, and has a 1.4 times lower outlier rate than a model trained on only spectroscopic ground truths. We also find that BNNs produce reliable uncertainty estimates, but are sensitive to the different ground truths. This investigation leverages different sources of ground truths to develop models that can accurately predict photo-$z$'s for a broader population of galaxies crucial for surveys such as Euclid and LSST.

Combining datasets with different ground truths using Low-Rank Adaptation to generalize image-based CNN models for photometric redshift prediction

Jan 01, 2026Abstract:In this work, we demonstrate how Low-Rank Adaptation (LoRA) can be used to combine different galaxy imaging datasets to improve redshift estimation with CNN models for cosmology. LoRA is an established technique for large language models that adds adapter networks to adjust model weights and biases to efficiently fine-tune large base models without retraining. We train a base model using a photometric redshift ground truth dataset, which contains broad galaxy types but is less accurate. We then fine-tune using LoRA on a spectroscopic redshift ground truth dataset. These redshifts are more accurate but limited to bright galaxies and take orders of magnitude more time to obtain, so are less available for large surveys. Ideally, the combination of the two datasets would yield more accurate models that generalize well. The LoRA model performs better than a traditional transfer learning method, with $\sim2.5\times$ less bias and $\sim$2.2$\times$ less scatter. Retraining the model on a combined dataset yields a model that generalizes better than LoRA but at a cost of greater computation time. Our work shows that LoRA is useful for fine-tuning regression models in astrophysics by providing a middle ground between full retraining and no retraining. LoRA shows potential in allowing us to leverage existing pretrained astrophysical models, especially for data sparse tasks.

Multi-Modal Masked Autoencoders for Learning Image-Spectrum Associations for Galaxy Evolution and Cosmology

Oct 26, 2025Abstract:Upcoming surveys will produce billions of galaxy images but comparatively few spectra, motivating models that learn cross-modal representations. We build a dataset of 134,533 galaxy images (HSC-PDR2) and spectra (DESI-DR1) and adapt a Multi-Modal Masked Autoencoder (MMAE) to embed both images and spectra in a shared representation. The MMAE is a transformer-based architecture, which we train by masking 75% of the data and reconstructing missing image and spectral tokens. We use this model to test three applications: spectral and image reconstruction from heavily masked data and redshift regression from images alone. It recovers key physical features, such as galaxy shapes, atomic emission line peaks, and broad continuum slopes, though it struggles with fine image details and line strengths. For redshift regression, the MMAE performs comparably or better than prior multi-modal models in terms of prediction scatter even when missing spectra in testing. These results highlight both the potential and limitations of masked autoencoders in astrophysics and motivate extensions to additional modalities, such as text, for foundation models.

Using different sources of ground truths and transfer learning to improve the generalization of photometric redshift estimation

Nov 27, 2024

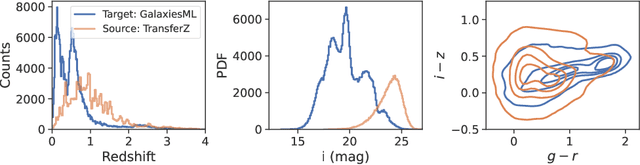

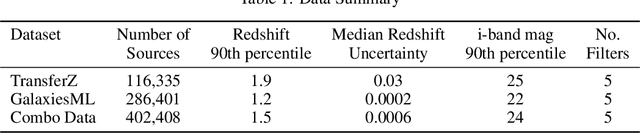

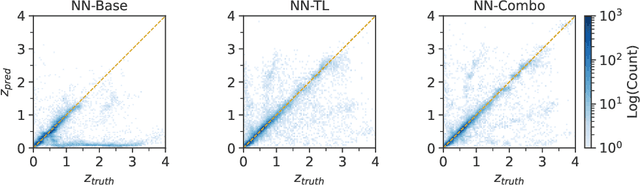

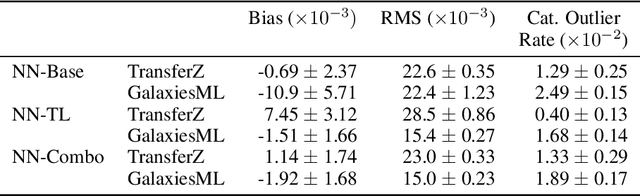

Abstract:In this work, we explore methods to improve galaxy redshift predictions by combining different ground truths. Traditional machine learning models rely on training sets with known spectroscopic redshifts, which are precise but only represent a limited sample of galaxies. To make redshift models more generalizable to the broader galaxy population, we investigate transfer learning and directly combining ground truth redshifts derived from photometry and spectroscopy. We use the COSMOS2020 survey to create a dataset, TransferZ, which includes photometric redshift estimates derived from up to 35 imaging filters using template fitting. This dataset spans a wider range of galaxy types and colors compared to spectroscopic samples, though its redshift estimates are less accurate. We first train a base neural network on TransferZ and then refine it using transfer learning on a dataset of galaxies with more precise spectroscopic redshifts (GalaxiesML). In addition, we train a neural network on a combined dataset of TransferZ and GalaxiesML. Both methods reduce bias by $\sim$ 5x, RMS error by $\sim$ 1.5x, and catastrophic outlier rates by 1.3x on GalaxiesML, compared to a baseline trained only on TransferZ. However, we also find a reduction in performance for RMS and bias when evaluated on TransferZ data. Overall, our results demonstrate these approaches can meet cosmological requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge