Soumya Jana

AI-based 3-Lead to 12-Lead ECG Reconstruction: Towards Smartphone-based Public Healthcare

Oct 17, 2024Abstract:Clinicians generally diagnose cardiovascular diseases (CVDs) using standard 12-Lead electrocardiogram (ECG). However, for smartphone-based public healthcare systems, a reduced 3-lead system may be preferred because of (i) increased portability, and (ii) reduced requirement for power, storage and bandwidth. Subsequently, clinicians require accurate 3-lead to 12-Lead ECG reconstruction, which has so far been studied only in the personalized setting. When each device is dedicated to one individual, artificial intelligence (AI) methods such as temporal long short-term memory (LSTM) and a further improved spatio-temporal LSTM-UNet combine have proven effective. In contrast, in the current smartphone-based public health setting where a common device is shared by many, developing an AI lead-reconstruction model that caters to the extensive ECG signal variability in the general population appears a far greater challenge. In this direction, we take a first step, and observe that the performance improvement achieved by a generative model, specifically, 1D Pix2Pix GAN (generative adversarial network), over LSTM-UNet is encouraging.

Next-Generation Teleophthalmology: AI-enabled Quality Assessment Aiding Remote Smartphone-based Consultation

Feb 11, 2024Abstract:Blindness and other eye diseases are a global health concern, particularly in low- and middle-income countries like India. In this regard, during the COVID-19 pandemic, teleophthalmology became a lifeline, and the Grabi attachment for smartphone-based eye imaging gained in use. However, quality of user-captured image often remained inadequate, requiring clinician vetting and delays. In this backdrop, we propose an AI-based quality assessment system with instant feedback mimicking clinicians' judgments and tested on patient-captured images. Dividing the complex problem hierarchically, here we tackle a nontrivial part, and demonstrate a proof of the concept.

3-Lead to 12-Lead ECG Reconstruction: A Novel AI-based Spatio-Temporal Method

Aug 12, 2023Abstract:Diagnosis of cardiovascular diseases usually relies on the widely used standard 12-Lead (S12) ECG system. However, such a system could be bulky, too resource-intensive, and too specialized for personalized home-based monitoring. In contrast, clinicians are generally not trained on the alternative proposal, i.e., the reduced lead (RL) system. This necessitates mapping RL to S12. In this context, to improve upon traditional linear transformation (LT) techniques, artificial intelligence (AI) approaches like long short-term memory (LSTM) networks capturing non-linear temporal dependencies, have been suggested. However, LSTM does not adequately interpolate spatially (in 3D). To fill this gap, we propose a combined LSTM-UNet model that also handles spatial aspects of the problem, and demonstrate performance improvement. Evaluated on PhysioNet PTBDB database, our LSTM-UNet achieved a mean R^2 value of 94.37%, surpassing LSTM by 0.79% and LT by 2.73%. Similarly, for PhysioNet INCARTDB database, LSTM-UNet achieved a mean R^2 value of 93.91%, outperforming LSTM by 1.78% and LT by 12.17%.

Diagnostic Quality Assessment of Fundus Photographs: Hierarchical Deep Learning with Clinically Significant Explanations

Feb 18, 2023

Abstract:Fundus photography (FP) remains the primary imaging modality in screening various retinal diseases including age-related macular degeneration, diabetic retinopathy and glaucoma. FP allows the clinician to examine the ocular fundus structures such as the macula, the optic disc (OD) and retinal vessels, whose visibility and clarity in an FP image remain central to ensuring diagnostic accuracy, and hence determine the diagnostic quality (DQ). Images with low DQ, resulting from eye movement, improper illumination and other possible causes, should obviously be recaptured. However, the technician, often unfamiliar with DQ criteria, initiates recapture only based on expert feedback. The process potentially engages the imaging device multiple times for single subject, and wastes the time and effort of the ophthalmologist, the technician and the subject. The burden could be prohibitive in case of teleophthalmology, where obtaining feedback from the remote expert entails additional communication cost and delay. Accordingly, a strong need for automated diagnostic quality assessment (DQA) has been felt, where an image is immediately assigned a DQ category. In response, motivated by the notional continuum of DQ, we propose a hierarchical deep learning (DL) architecture to distinguish between good, usable and unusable categories. On the public EyeQ dataset, we achieve an accuracy of 89.44%, improving upon existing methods. In addition, using gradient based class activation map (Grad-CAM), we generate a visual explanation which agrees with the expert intuition. Future FP cameras equipped with the proposed DQA algorithm will potentially improve the efficacy of the teleophthalmology as well as the traditional system.

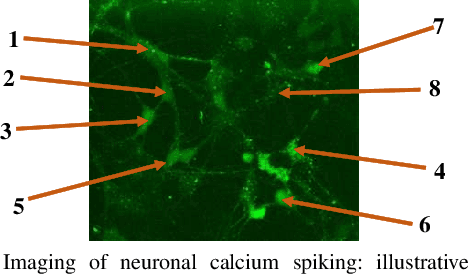

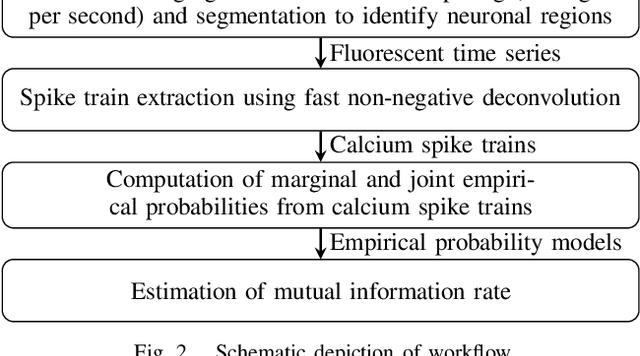

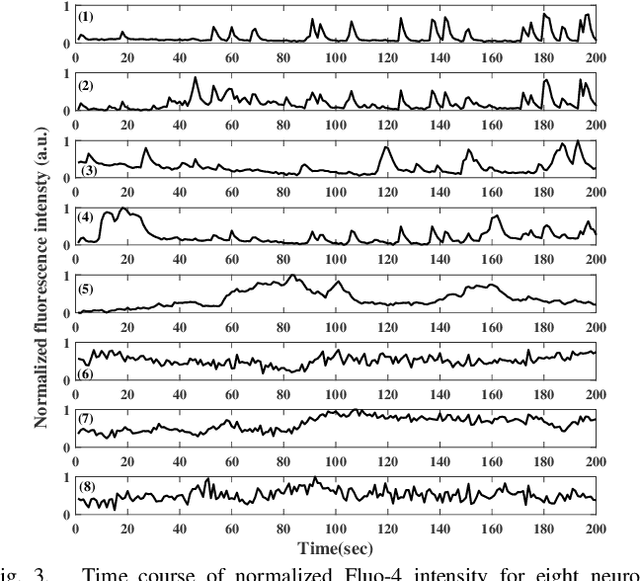

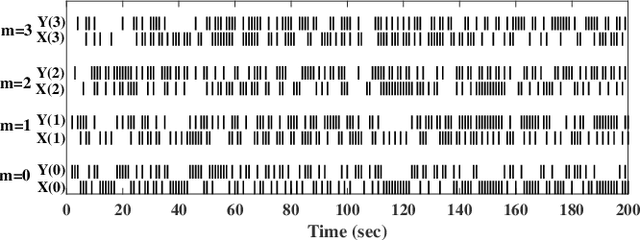

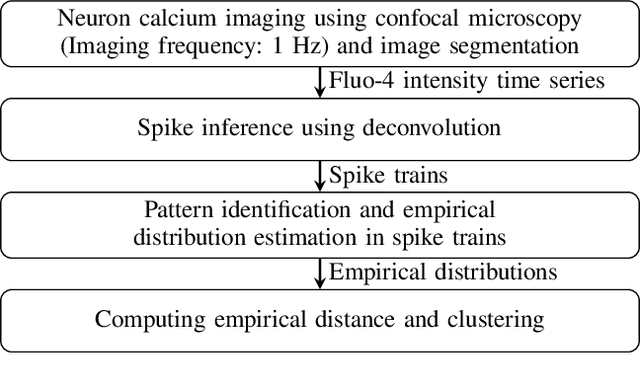

Correlation in Neuronal Calcium Spiking: Quantification based on Empirical Mutual Information Rate

May 07, 2021

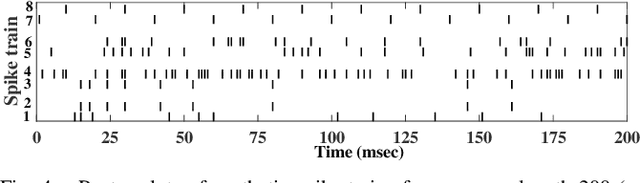

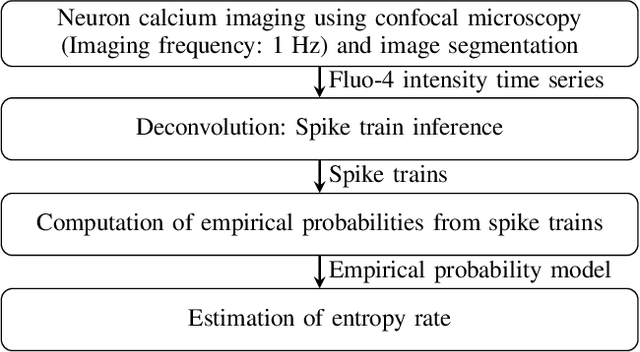

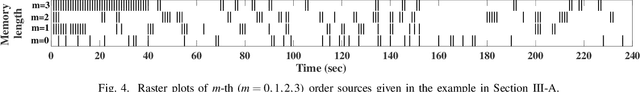

Abstract:Quantification of neuronal correlations in neuron populations helps us to understand neural coding rules. Such quantification could also reveal how neurons encode information in normal and disease conditions like Alzheimer's and Parkinson's. While neurons communicate with each other by transmitting spikes, there would be a change in calcium concentration within the neurons inherently. Accordingly, there would be correlations in calcium spike trains and they could have heterogeneous memory structures. In this context, estimation of mutual information rate in calcium spike trains assumes primary significance. However, such estimation is difficult with available methods which would consider longer blocks for convergence without noticing that neuronal information changes in short time windows. Against this backdrop, we propose a faster method that exploits the memory structures in pair of calcium spike trains to quantify mutual information shared between them. Our method has shown superior performance with example Markov processes as well as experimental spike trains. Such mutual information rate analysis could be used to identify signatures of neuronal behavior in large populations in normal and abnormal conditions.

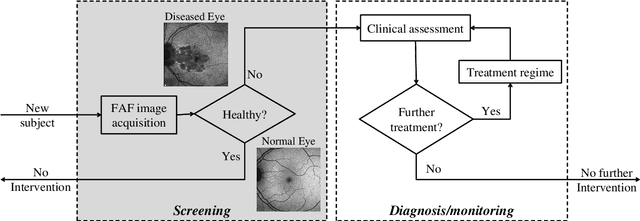

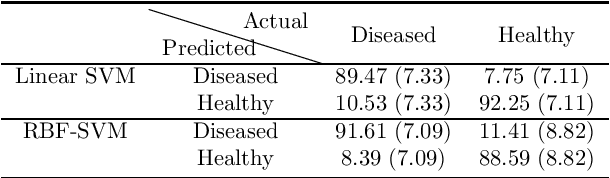

Efficient Screening of Diseased Eyes based on Fundus Autofluorescence Images using Support Vector Machine

Apr 17, 2021

Abstract:A variety of vision ailments are associated with geographic atrophy (GA) in the foveal region of the eye. In current clinical practice, the ophthalmologist manually detects potential presence of such GA based on fundus autofluorescence (FAF) images, and hence diagnoses the disease, when relevant. However, in view of the general scarcity of ophthalmologists relative to the large number of subjects seeking eyecare, especially in remote regions, it becomes imperative to develop methods to direct expert time and effort to medically significant cases. Further, subjects from either disadvantaged background or remote localities, who face considerable economic/physical barrier in consulting trained ophthalmologists, tend to seek medical attention only after being reasonably certain that an adverse condition exists. To serve the interest of both the ophthalmologist and the potential patient, we plan a screening step, where healthy and diseased eyes are algorithmically differentiated with limited input from only optometrists who are relatively more abundant in number. Specifically, an early treatment diabetic retinopathy study (ETDRS) grid is placed by an optometrist on each FAF image, based on which sectoral statistics are automatically collected. Using such statistics as features, healthy and diseased eyes are proposed to be classified by training an algorithm using available medical records. In this connection, we demonstrate the efficacy of support vector machines (SVM). Specifically, we consider SVM with linear as well as radial basis function (RBF) kernel, and observe satisfactory performance of both variants. Among those, we recommend the latter in view of its slight superiority in terms of classification accuracy (90.55% at a standard training-to-test ratio of 80:20), and practical class-conditional costs.

Heterogeneity in Neuronal Calcium Spike Trains based on Empirical Distance

Mar 13, 2021

Abstract:Statistical similarities between neuronal spike trains could reveal significant information on complex underlying processing. In general, the similarity between synchronous spike trains is somewhat easy to identify. However, the similar patterns also potentially appear in an asynchronous manner. However, existing methods for their identification tend to converge slowly, and cannot be applied to short sequences. In response, we propose Hellinger distance measure based on empirical probabilities, which we show to be as accurate as existing techniques, yet faster to converge for synthetic as well as experimental spike trains. Further, we cluster pairs of neuronal spike trains based on statistical similarities and found two non-overlapping classes, which could indicate functional similarities in neurons. Significantly, our technique detected functional heterogeneity in pairs of neuronal responses with the same performance as existing techniques, while exhibiting faster convergence. We expect the proposed method to facilitate large-scale studies of functional clustering, especially involving short sequences, which would in turn identify signatures of various diseases in terms of clustering patterns.

Information Content in Neuronal Calcium Spike Trains: Entropy Rate Estimation based on Empirical Probabilities

Feb 01, 2021

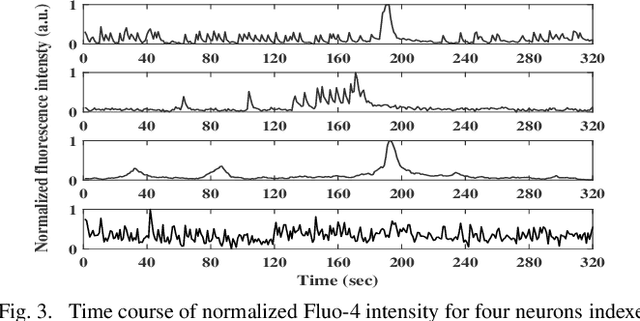

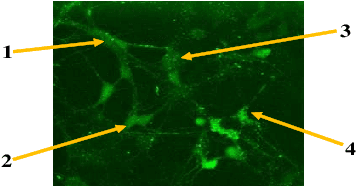

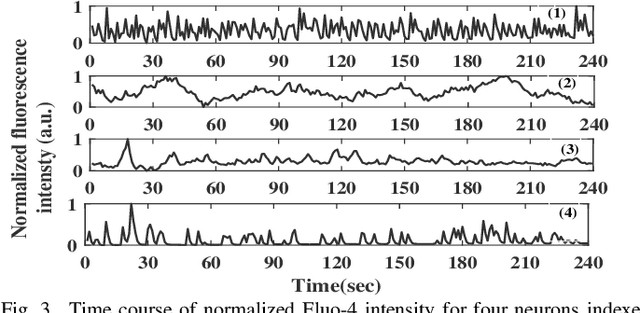

Abstract:Quantification of information content and its temporal variation in intracellular calcium spike trains in neurons helps one understand functions such as memory, learning, and cognition. Such quantification could also reveal pathological signaling perturbation that potentially leads to devastating neurodegenerative conditions including Parkinson's, Alzheimer's, and Huntington's diseases. Accordingly, estimation of entropy rate, an information-theoretic measure of information content, assumes primary significance. However, such estimation in the present context is challenging because, while entropy rate is traditionally defined asymptotically for long blocks under the assumption of stationarity, neurons are known to encode information in short intervals and the associated spike trains often exhibit nonstationarity. Against this backdrop, we propose an entropy rate estimator based on empirical probabilities that operates within windows, short enough to ensure approximate stationarity. Specifically, our estimator, parameterized by the length of encoding contexts, attempts to model the underlying memory structures in neuronal spike trains. In an example Markov process, we compared the performance of the proposed method with that of versions of the Lempel-Ziv algorithm as well as with that of a certain stationary distribution method and found the former to exhibit higher accuracy levels and faster convergence. Also, in experimentally recorded calcium responses of four hippocampal neurons, the proposed method showed faster convergence. Significantly, our technique detected structural heterogeneity in the underlying process memory in the responses of the aforementioned neurons. We believe that the proposed method facilitates large-scale studies of such heterogeneity, which could in turn identify signatures of various diseases in terms of entropy rate estimates.

Dictionary-based Monitoring of Premature Ventricular Contractions: An Ultra-Low-Cost Point-of-Care Service

May 24, 2017

Abstract:While cardiovascular diseases (CVDs) are prevalent across economic strata, the economically disadvantaged population is disproportionately affected due to the high cost of traditional CVD management. Accordingly, developing an ultra-low-cost alternative, affordable even to groups at the bottom of the economic pyramid, has emerged as a societal imperative. Against this backdrop, we propose an inexpensive yet accurate home-based electrocardiogram(ECG) monitoring service. Specifically, we seek to provide point-of-care monitoring of premature ventricular contractions (PVCs), high frequency of which could indicate the onset of potentially fatal arrhythmia. Note that a traditional telecardiology system acquires the ECG, transmits it to a professional diagnostic centre without processing, and nearly achieves the diagnostic accuracy of a bedside setup, albeit at high bandwidth cost. In this context, we aim at reducing cost without significantly sacrificing reliability. To this end, we develop a dictionary-based algorithm that detects with high sensitivity the anomalous beats only which are then transmitted. We further compress those transmitted beats using class-specific dictionaries subject to suitable reconstruction/diagnostic fidelity. Such a scheme would not only reduce the overall bandwidth requirement, but also localising anomalous beats, thereby reducing physicians' burden. Finally, using Monte Carlo cross validation on MIT/BIH arrhythmia database, we evaluate the performance of the proposed system. In particular, with a sensitivity target of at most one undetected PVC in one hundred beats, and a percentage root mean squared difference less than 9% (a clinically acceptable level of fidelity), we achieved about 99.15% reduction in bandwidth cost, equivalent to 118-fold savings over traditional telecardiology.

Euclidean Auto Calibration of Camera Networks: Baseline Constraint Removes Scale Ambiguity

Oct 06, 2015

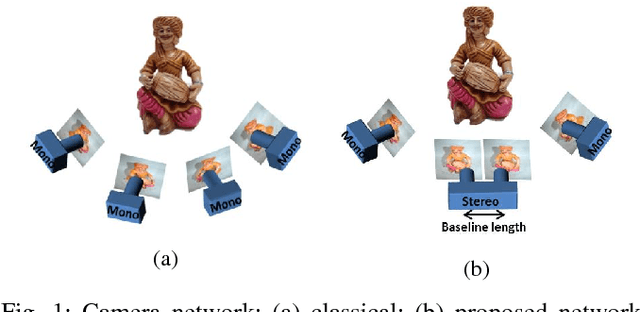

Abstract:Metric auto calibration of a camera network from multiple views has been reported by several authors. Resulting 3D reconstruction recovers shape faithfully, but not scale. However, preservation of scale becomes critical in applications, such as multi-party telepresence, where multiple 3D scenes need to be fused into a single coordinate system. In this context, we propose a camera network configuration that includes a stereo pair with known baseline separation, and analytically demonstrate Euclidean auto calibration of such network under mild conditions. Further, we experimentally validate our theory using a four-camera network. Importantly, our method not only recovers scale, but also compares favorably with the well known Zhang and Pollefeys methods in terms of shape recovery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge