Sophia Abraham

Temporal Egonet Subgraph Transitions

Mar 26, 2023

Abstract:How do we summarize dynamic behavioral interactions? We introduce a possible node-embedding-based solution to this question: temporal egonet subgraph transitions.

Adaptive Autonomy in Human-on-the-Loop Vision-Based Robotics Systems

Mar 28, 2021

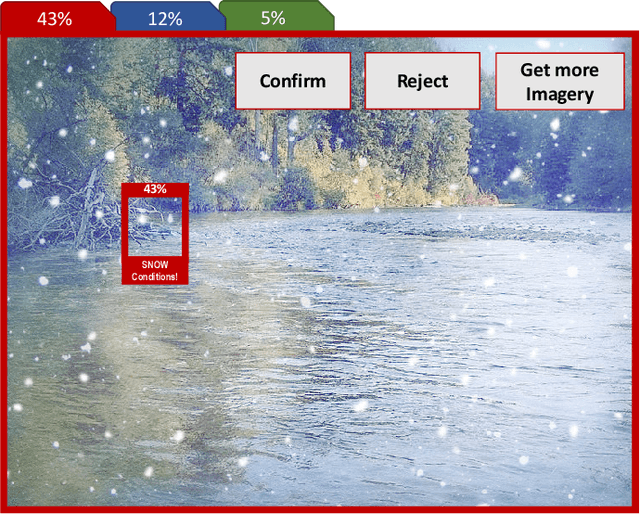

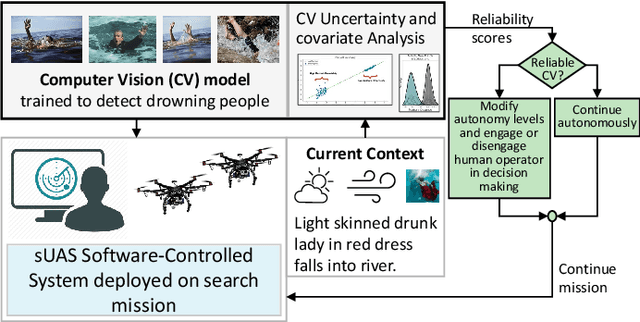

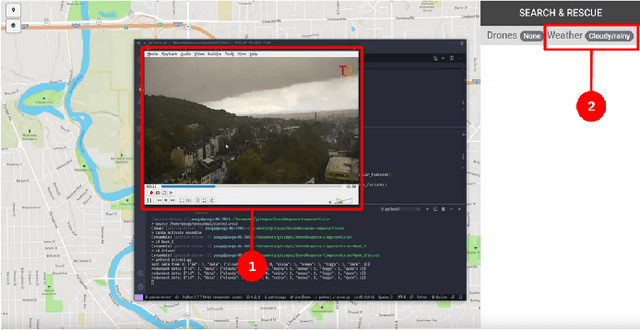

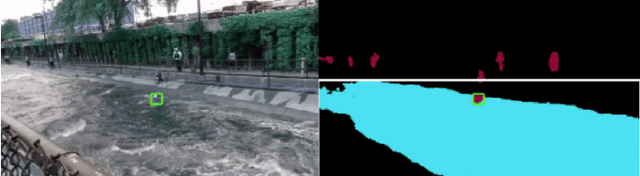

Abstract:Computer vision approaches are widely used by autonomous robotic systems to sense the world around them and to guide their decision making as they perform diverse tasks such as collision avoidance, search and rescue, and object manipulation. High accuracy is critical, particularly for Human-on-the-loop (HoTL) systems where decisions are made autonomously by the system, and humans play only a supervisory role. Failures of the vision model can lead to erroneous decisions with potentially life or death consequences. In this paper, we propose a solution based upon adaptive autonomy levels, whereby the system detects loss of reliability of these models and responds by temporarily lowering its own autonomy levels and increasing engagement of the human in the decision-making process. Our solution is applicable for vision-based tasks in which humans have time to react and provide guidance. When implemented, our approach would estimate the reliability of the vision task by considering uncertainty in its model, and by performing covariate analysis to determine when the current operating environment is ill-matched to the model's training data. We provide examples from DroneResponse, in which small Unmanned Aerial Systems are deployed for Emergency Response missions, and show how the vision model's reliability would be used in addition to confidence scores to drive and specify the behavior and adaptation of the system's autonomy. This workshop paper outlines our proposed approach and describes open challenges at the intersection of Computer Vision and Software Engineering for the safe and reliable deployment of vision models in the decision making of autonomous systems.

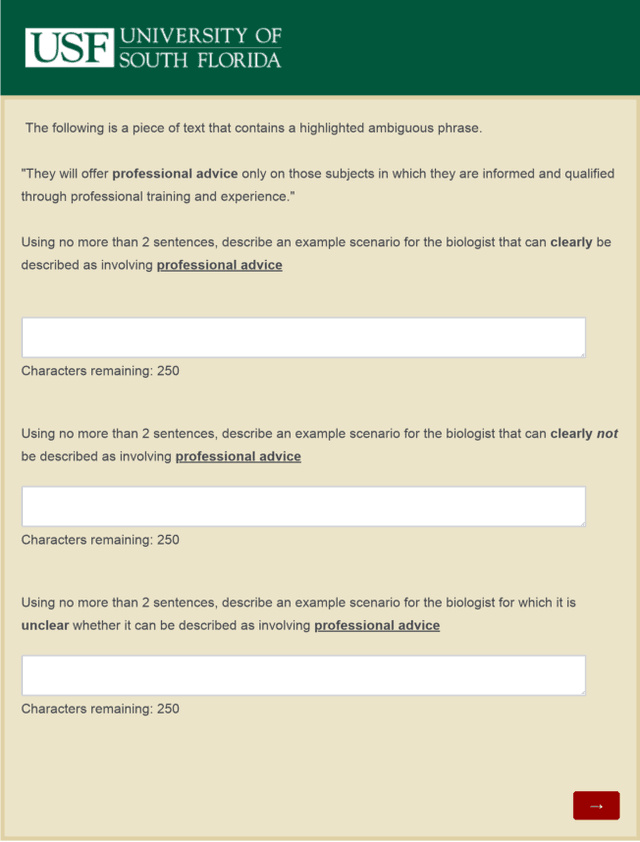

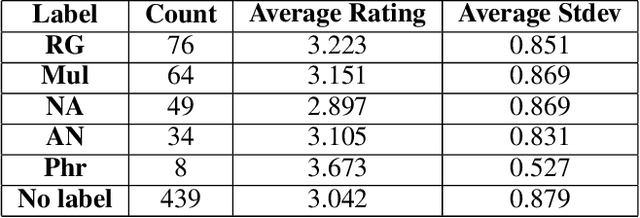

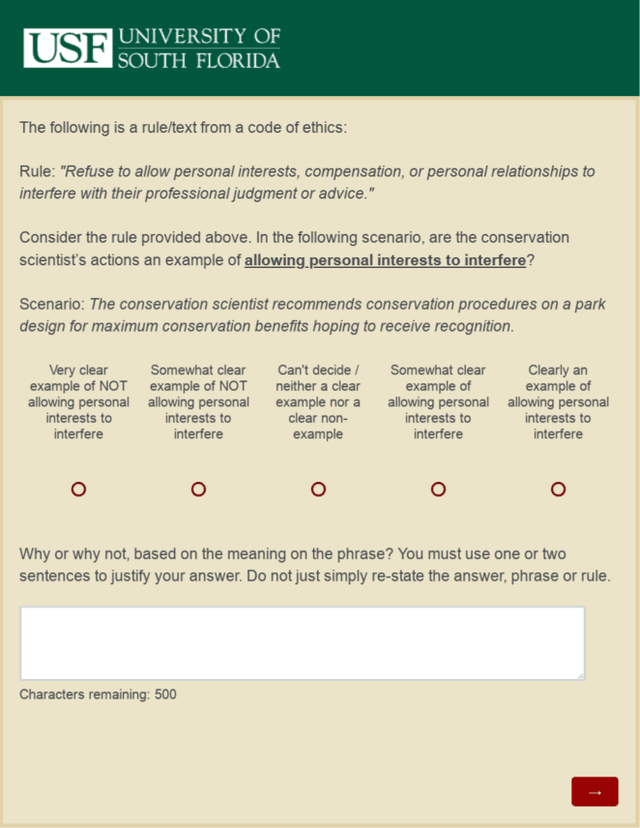

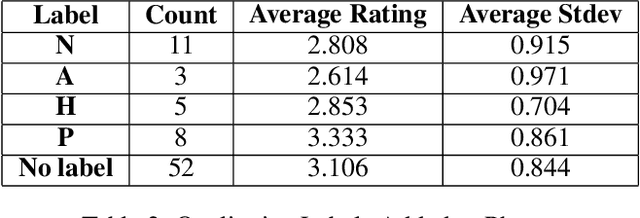

Scenarios and Recommendations for Ethical Interpretive AI

Nov 05, 2019

Abstract:Artificially intelligent systems, given a set of non-trivial ethical rules to follow, will inevitably be faced with scenarios which call into question the scope of those rules. In such cases, human reasoners typically will engage in interpretive reasoning, where interpretive arguments are used to support or attack claims that some rule should be understood a certain way. Artificially intelligent reasoners, however, currently lack the ability to carry out human-like interpretive reasoning, and we argue that bridging this gulf is of tremendous importance to human-centered AI. In order to better understand how future artificial reasoners capable of human-like interpretive reasoning must be developed, we have collected a dataset of ethical rules, scenarios designed to invoke interpretive reasoning, and interpretations of those scenarios. We perform a qualitative analysis of our dataset, and summarize our findings in the form of practical recommendations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge