Soham Shah

EMAFusion: A Self-Optimizing System for Seamless LLM Selection and Integration

Apr 14, 2025Abstract:While recent advances in large language models (LLMs) have significantly enhanced performance across diverse natural language tasks, the high computational and financial costs associated with their deployment remain substantial barriers. Existing routing strategies partially alleviate this challenge by assigning queries to cheaper or specialized models, but they frequently rely on extensive labeled data or fragile task-specific heuristics. Conversely, fusion techniques aggregate multiple LLM outputs to boost accuracy and robustness, yet they often exacerbate cost and may reinforce shared biases. We introduce EMAFusion, a new framework that self-optimizes for seamless LLM selection and reliable execution for a given query. Specifically, EMAFusion integrates a taxonomy-based router for familiar query types, a learned router for ambiguous inputs, and a cascading approach that progressively escalates from cheaper to more expensive models based on multi-judge confidence evaluations. Through extensive evaluations, we find EMAFusion outperforms the best individual models by over 2.6 percentage points (94.3% vs. 91.7%), while being 4X cheaper than the average cost. EMAFusion further achieves a remarkable 17.1 percentage point improvement over models like GPT-4 at less than 1/20th the cost. Our combined routing approach delivers 94.3% accuracy compared to taxonomy-based (88.1%) and learned model predictor-based (91.7%) methods alone, demonstrating the effectiveness of our unified strategy. Finally, EMAFusion supports flexible cost-accuracy trade-offs, allowing users to balance their budgetary constraints and performance needs.

Distilling Script Knowledge from Large Language Models for Constrained Language Planning

May 22, 2023

Abstract:In everyday life, humans often plan their actions by following step-by-step instructions in the form of goal-oriented scripts. Previous work has exploited language models (LMs) to plan for abstract goals of stereotypical activities (e.g., "make a cake"), but leaves more specific goals with multi-facet constraints understudied (e.g., "make a cake for diabetics"). In this paper, we define the task of constrained language planning for the first time. We propose an overgenerate-then-filter approach to improve large language models (LLMs) on this task, and use it to distill a novel constrained language planning dataset, CoScript, which consists of 55,000 scripts. Empirical results demonstrate that our method significantly improves the constrained language planning ability of LLMs, especially on constraint faithfulness. Furthermore, CoScript is demonstrated to be quite effective in endowing smaller LMs with constrained language planning ability.

Embedded Systems and Computer Vision Techniques utilized in Spray Painting Robots: A Review

Oct 02, 2020

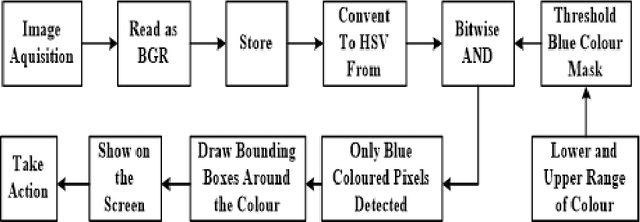

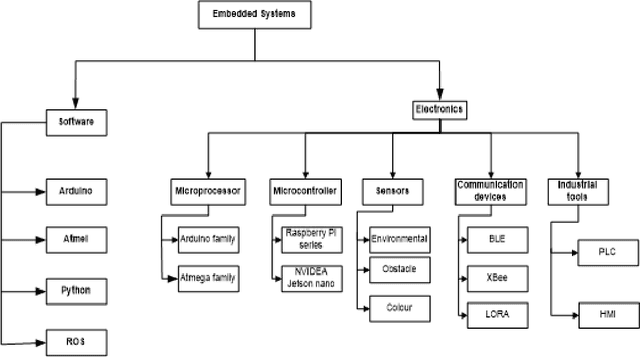

Abstract:The advent of the era of machines has limited human interaction and this has increased their presence in the last decade. The requirement to increase the effectiveness, durability and reliability in the robots has also risen quite drastically too. Present paper covers the various embedded system and computer vision methodologies, techniques and innovations used in the field of spray painting robots. There have been many advancements in the sphere of painting robots utilized for high rise buildings, wall painting, road marking paintings, etc. Review focuses on image processing, computational and computer vision techniques that can be applied in the product to increase efficiency of the performance drastically. Image analysis, filtering, enhancement, object detection, edge detection methods, path and localization methods and fine tuning of parameters are being discussed in depth to use while developing such products. Dynamic system design is being deliberated by using which results in reduction of human interaction, environment sustainability and better quality of work in detail. Embedded systems involving the micro-controllers, processors, communicating devices, sensors and actuators, soft-ware to use them; is being explained for end-to-end development and enhancement of accuracy and precision in Spray Painting Robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge