Sofiane Ouaari

Robust Representation Learning for Privacy-Preserving Machine Learning: A Multi-Objective Autoencoder Approach

Sep 08, 2023Abstract:Several domains increasingly rely on machine learning in their applications. The resulting heavy dependence on data has led to the emergence of various laws and regulations around data ethics and privacy and growing awareness of the need for privacy-preserving machine learning (ppML). Current ppML techniques utilize methods that are either purely based on cryptography, such as homomorphic encryption, or that introduce noise into the input, such as differential privacy. The main criticism given to those techniques is the fact that they either are too slow or they trade off a model s performance for improved confidentiality. To address this performance reduction, we aim to leverage robust representation learning as a way of encoding our data while optimizing the privacy-utility trade-off. Our method centers on training autoencoders in a multi-objective manner and then concatenating the latent and learned features from the encoding part as the encoded form of our data. Such a deep learning-powered encoding can then safely be sent to a third party for intensive training and hyperparameter tuning. With our proposed framework, we can share our data and use third party tools without being under the threat of revealing its original form. We empirically validate our results on unimodal and multimodal settings, the latter following a vertical splitting system and show improved performance over state-of-the-art.

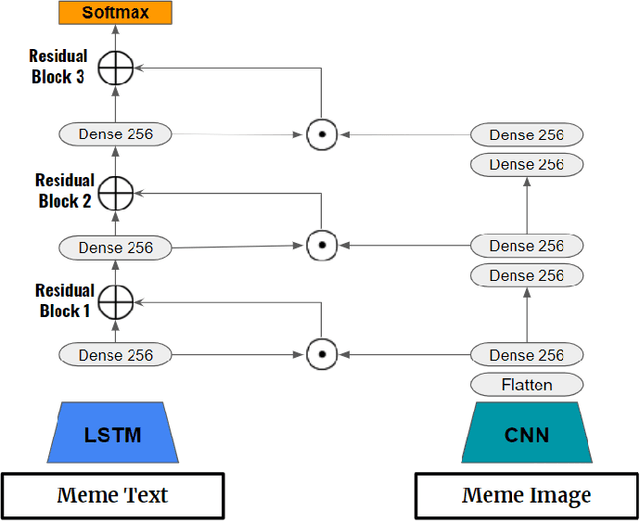

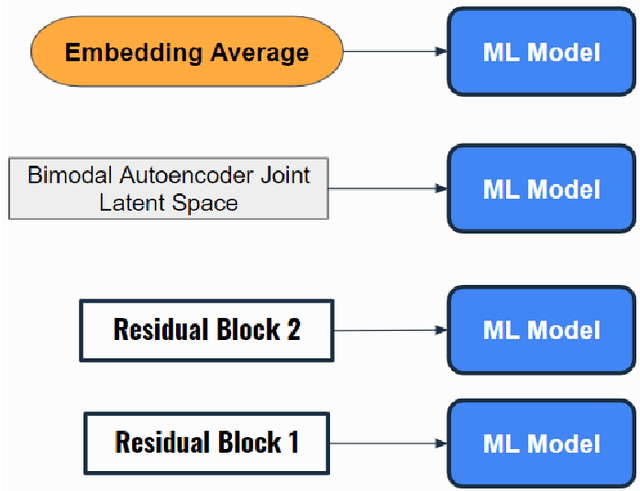

Multimodal Feature Extraction for Memes Sentiment Classification

Jul 07, 2022

Abstract:In this study, we propose feature extraction for multimodal meme classification using Deep Learning approaches. A meme is usually a photo or video with text shared by the young generation on social media platforms that expresses a culturally relevant idea. Since they are an efficient way to express emotions and feelings, a good classifier that can classify the sentiment behind the meme is important. To make the learning process more efficient, reduce the likelihood of overfitting, and improve the generalizability of the model, one needs a good approach for joint feature extraction from all modalities. In this work, we proposed to use different multimodal neural network approaches for multimodal feature extraction and use the extracted features to train a classifier to identify the sentiment in a meme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge