So

Anti-bullying Adaptive Cruise Control: A proactive right-of-way protection approach

Dec 14, 2024

Abstract:The current Adaptive Cruise Control (ACC) systems are vulnerable to "road bully" such as cut-ins. This paper proposed an Anti-bullying Adaptive Cruise Control (AACC) approach with proactive right-of-way protection ability. It bears the following features: i) with the enhanced capability of preventing bullying from cut-ins; ii) optimal but not unsafe; iii) adaptive to various driving styles of cut-in vehicles; iv) with real-time field implementation capability. The proposed approach can identify other road users' driving styles online and conduct game-based motion planning for right-of-way protection. A detailed investigation of the simulation results shows that the proposed approach can prevent bullying from cut-ins and be adaptive to different cut-in vehicles' driving styles. The proposed approach is capable of enhancing travel efficiency by up to 29.55% under different cut-in gaps and can strengthen driving safety compared with the current ACC controller. The proposed approach is flexible and robust against traffic congestion levels. It can improve mobility by up to 11.93% and robustness by 8.74% in traffic flow. Furthermore, the proposed approach can support real-time field implementation by ensuring less than 50 milliseconds computation time.

Accelerating the Evolution of Personalized Automated Lane Change through Lesson Learning

May 13, 2024

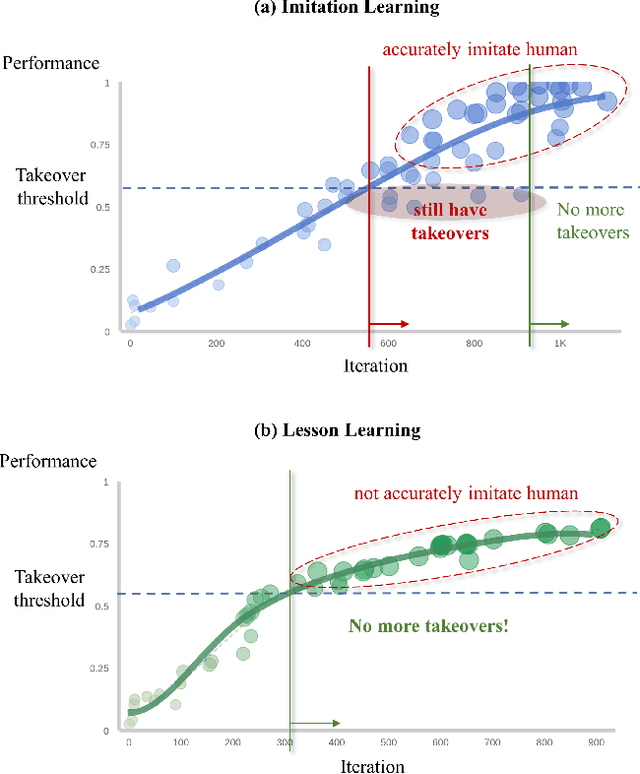

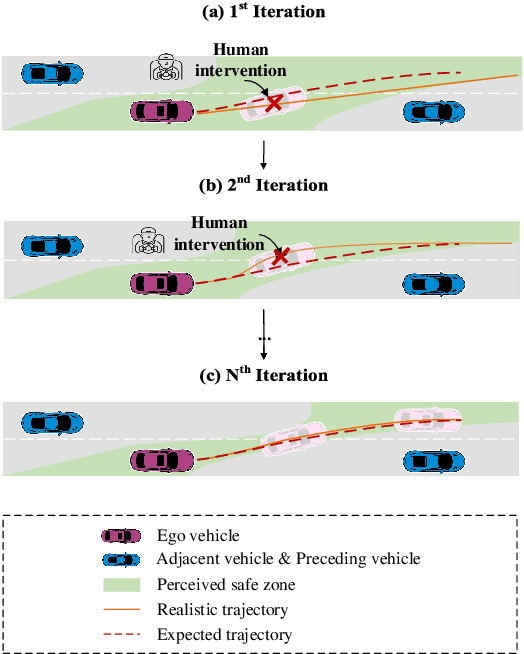

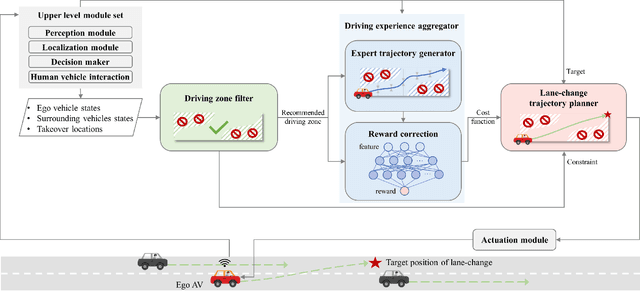

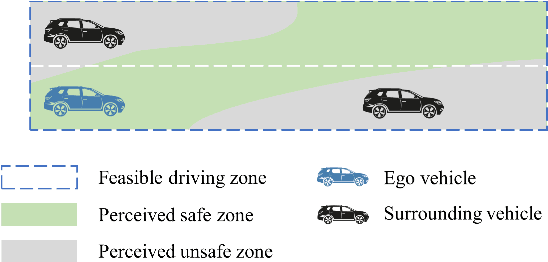

Abstract:Personalization is crucial for the widespread adoption of advanced driver assistance system. To match up with each user's preference, the online evolution capability is a must. However, conventional evolution methods learn from naturalistic driving data, which requires a lot computing power and cannot be applied online. To address this challenge, this paper proposes a lesson learning approach: learning from driver's takeover interventions. By leveraging online takeover data, the driving zone is generated to ensure perceived safety using Gaussian discriminant analysis. Real-time corrections to trajectory planning rewards are enacted through apprenticeship learning. Guided by the objective of optimizing rewards within the constraints of the driving zone, this approach employs model predictive control for trajectory planning. This lesson learning framework is highlighted for its faster evolution capability, adeptness at experience accumulating, assurance of perceived safety, and computational efficiency. Simulation results demonstrate that the proposed system consistently achieves a successful customization without further takeover interventions. Accumulated experience yields a 24% enhancement in evolution efficiency. The average number of learning iterations is only 13.8. The average computation time is 0.08 seconds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge