Snehal Walunj

Enhancing Object Detection with Hybrid dataset in Manufacturing Environments: Comparing Federated Learning to Conventional Techniques

Aug 16, 2024

Abstract:Federated Learning (FL) has garnered significant attention in manufacturing for its robust model development and privacy-preserving capabilities. This paper contributes to research focused on the robustness of FL models in object detection, hereby presenting a comparative study with conventional techniques using a hybrid dataset for small object detection. Our findings demonstrate the superior performance of FL over centralized training models and different deep learning techniques when tested on test data recorded in a different environment with a variety of object viewpoints, lighting conditions, cluttered backgrounds, etc. These results highlight the potential of FL in achieving robust global models that perform efficiently even in unseen environments. The study provides valuable insights for deploying resilient object detection models in manufacturing environments.

Enhancing Object Detection Performance for Small Objects through Synthetic Data Generation and Proportional Class-Balancing Technique: A Comparative Study in Industrial Scenarios

Jan 29, 2024

Abstract:Object Detection (OD) has proven to be a significant computer vision method in extracting localized class information and has multiple applications in the industry. Although many of the state-of-the-art (SOTA) OD models perform well on medium and large sized objects, they seem to under perform on small objects. In most of the industrial use cases, it is difficult to collect and annotate data for small objects, as it is time-consuming and prone to human errors. Additionally, those datasets are likely to be unbalanced and often result in an inefficient model convergence. To tackle this challenge, this study presents a novel approach that injects additional data points to improve the performance of the OD models. Using synthetic data generation, the difficulties in data collection and annotations for small object data points can be minimized and to create a dataset with balanced distribution. This paper discusses the effects of a simple proportional class-balancing technique, to enable better anchor matching of the OD models. A comparison was carried out on the performances of the SOTA OD models: YOLOv5, YOLOv7 and SSD, for combinations of real and synthetic datasets within an industrial use case.

Small Object Detection for Near Real-Time Egocentric Perception in a Manual Assembly Scenario

Jun 11, 2021

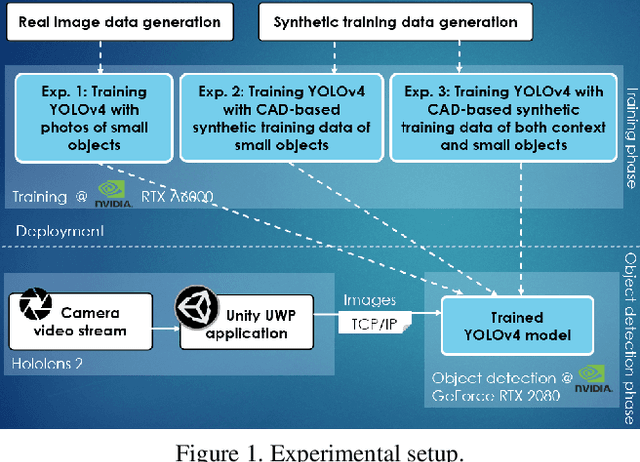

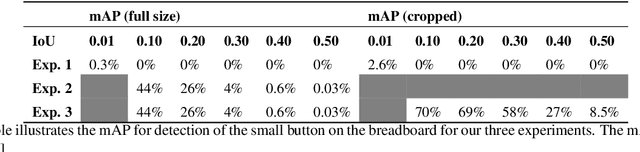

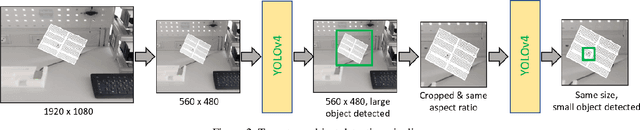

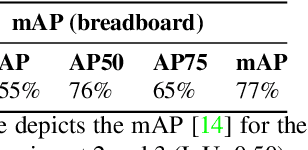

Abstract:Detecting small objects in video streams of head-worn augmented reality devices in near real-time is a huge challenge: training data is typically scarce, the input video stream can be of limited quality, and small objects are notoriously hard to detect. In industrial scenarios, however, it is often possible to leverage contextual knowledge for the detection of small objects. Furthermore, CAD data of objects are typically available and can be used to generate synthetic training data. We describe a near real-time small object detection pipeline for egocentric perception in a manual assembly scenario: We generate a training data set based on CAD data and realistic backgrounds in Unity. We then train a YOLOv4 model for a two-stage detection process: First, the context is recognized, then the small object of interest is detected. We evaluate our pipeline on the augmented reality device Microsoft Hololens 2.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge