Small Object Detection for Near Real-Time Egocentric Perception in a Manual Assembly Scenario

Paper and Code

Jun 11, 2021

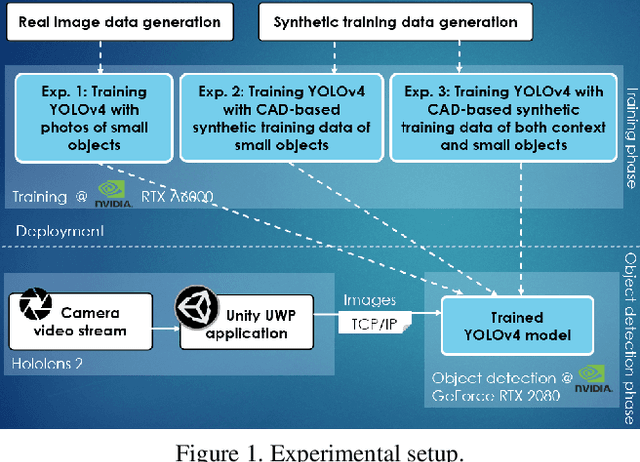

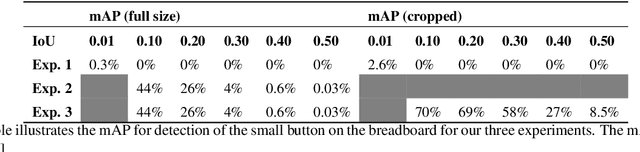

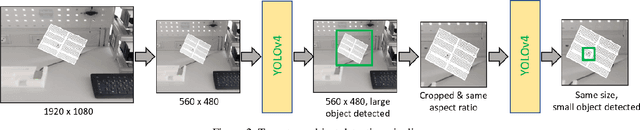

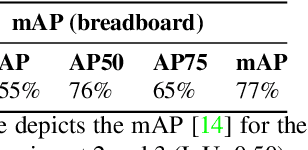

Detecting small objects in video streams of head-worn augmented reality devices in near real-time is a huge challenge: training data is typically scarce, the input video stream can be of limited quality, and small objects are notoriously hard to detect. In industrial scenarios, however, it is often possible to leverage contextual knowledge for the detection of small objects. Furthermore, CAD data of objects are typically available and can be used to generate synthetic training data. We describe a near real-time small object detection pipeline for egocentric perception in a manual assembly scenario: We generate a training data set based on CAD data and realistic backgrounds in Unity. We then train a YOLOv4 model for a two-stage detection process: First, the context is recognized, then the small object of interest is detected. We evaluate our pipeline on the augmented reality device Microsoft Hololens 2.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge