Sizai Hou

DeDe: Detecting Backdoor Samples for SSL Encoders via Decoders

Nov 25, 2024Abstract:Self-supervised learning (SSL) is pervasively exploited in training high-quality upstream encoders with a large amount of unlabeled data. However, it is found to be susceptible to backdoor attacks merely via polluting a small portion of training data. The victim encoders mismatch triggered inputs with target embeddings, e.g., match the triggered cat input to an airplane embedding, such that the downstream tasks are affected to misbehave when the trigger is activated. Emerging backdoor attacks have shown great threats in different SSL paradigms such as contrastive learning and CLIP, while few research is devoted to defending against such attacks. Besides, the existing ones fall short in detecting advanced stealthy backdoors. To address the limitations, we propose a novel detection mechanism, DeDe, which detects the activation of the backdoor mapping with the cooccurrence of victim encoder and trigger inputs. Specifically, DeDe trains a decoder for the SSL encoder on an auxiliary dataset (can be out-of-distribution or even slightly poisoned), such that for any triggered input that misleads to the target embedding, the decoder outputs an image significantly different from the input. We empirically evaluate DeDe on both contrastive learning and CLIP models against various types of backdoor attacks, and demonstrate its superior performance over SOTA detection methods in both upstream detection performance and ability of preventing backdoors in downstream tasks.

Leveraging Label Information for Stealthy Data Stealing in Vertical Federated Learning

Apr 30, 2024Abstract:We develop DMAVFL, a novel attack strategy that evades current detection mechanisms. The key idea is to integrate a discriminator with auxiliary classifier that takes a full advantage of the label information (which was completely ignored in previous attacks): on one hand, label information helps to better characterize embeddings of samples from distinct classes, yielding an improved reconstruction performance; on the other hand, computing malicious gradients with label information better mimics the honest training, making the malicious gradients indistinguishable from the honest ones, and the attack much more stealthy. Our comprehensive experiments demonstrate that DMAVFL significantly outperforms existing attacks, and successfully circumvents SOTA defenses for malicious attacks. Additional ablation studies and evaluations on other defenses further underscore the robustness and effectiveness of DMAVFL.

Secure Federated Clustering

May 31, 2022

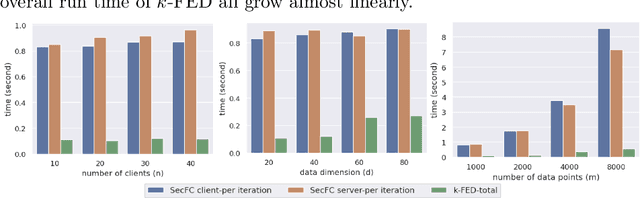

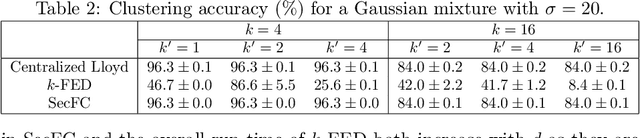

Abstract:We consider a foundational unsupervised learning task of $k$-means data clustering, in a federated learning (FL) setting consisting of a central server and many distributed clients. We develop SecFC, which is a secure federated clustering algorithm that simultaneously achieves 1) universal performance: no performance loss compared with clustering over centralized data, regardless of data distribution across clients; 2) data privacy: each client's private data and the cluster centers are not leaked to other clients and the server. In SecFC, the clients perform Lagrange encoding on their local data and share the coded data in an information-theoretically private manner; then leveraging the algebraic structure of the coding, the FL network exactly executes the Lloyd's $k$-means heuristic over the coded data to obtain the final clustering. Experiment results on synthetic and real datasets demonstrate the universally superior performance of SecFC for different data distributions across clients, and its computational practicality for various combinations of system parameters. Finally, we propose an extension of SecFC to further provide membership privacy for all data points.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge