Sindhu Ghanta

A Study of Data Augmentation Techniques to Overcome Data Scarcity in Wound Classification using Deep Learning

Nov 04, 2024

Abstract:Chronic wounds are a significant burden on individuals and the healthcare system, affecting millions of people and incurring high costs. Wound classification using deep learning techniques is a promising approach for faster diagnosis and treatment initiation. However, lack of high quality data to train the ML models is a major challenge to realize the potential of ML in wound care. In fact, data limitations are the biggest challenge in studies using medical or forensic imaging today. We study data augmentation techniques that can be used to overcome the data scarcity limitations and unlock the potential of deep learning based solutions. In our study we explore a range of data augmentation techniques from geometric transformations of wound images to advanced GANs, to enrich and expand datasets. Using the Keras, Tensorflow, and Pandas libraries, we implemented the data augmentation techniques that can generate realistic wound images. We show that geometric data augmentation can improve classification performance, F1 scores, by up to 11% on top of state-of-the-art models, across several key classes of wounds. Our experiments with GAN based augmentation prove the viability of using DE-GANs to generate wound images with richer variations. Our study and results show that data augmentation is a valuable privacy-preserving tool with huge potential to overcome the data scarcity limitations and we believe it will be part of any real-world ML-based wound care system.

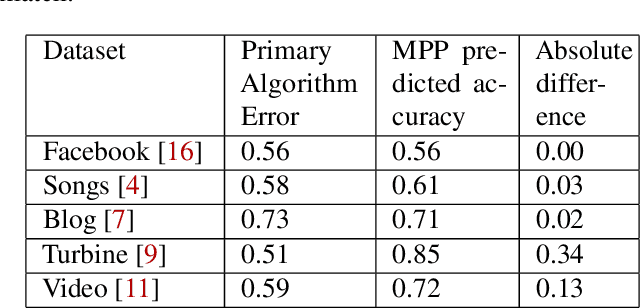

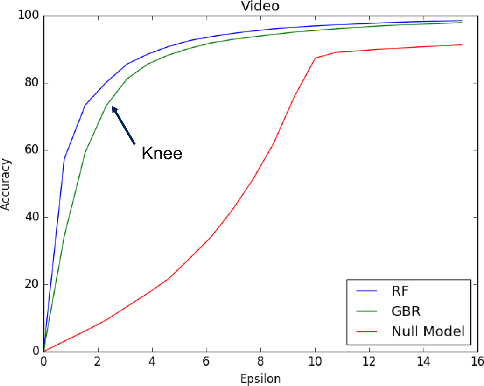

MPP: Model Performance Predictor

Feb 22, 2019

Abstract:Operations is a key challenge in the domain of machine learning pipeline deployments involving monitoring and management of real-time prediction quality. Typically, metrics like accuracy, RMSE etc., are used to track the performance of models in deployment. However, these metrics cannot be calculated in production due to the absence of labels. We propose using an ML algorithm, Model Performance Predictor (MPP), to track the performance of the models in deployment. We argue that an ensemble of such metrics can be used to create a score representing the prediction quality in production. This in turn facilitates formulation and customization of ML alerts, that can be escalated by an operations team to the data science team. Such a score automates monitoring and enables ML deployments at scale.

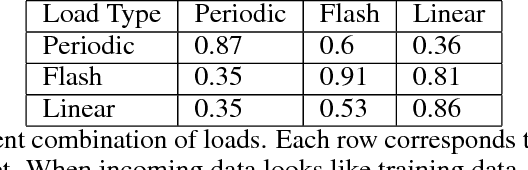

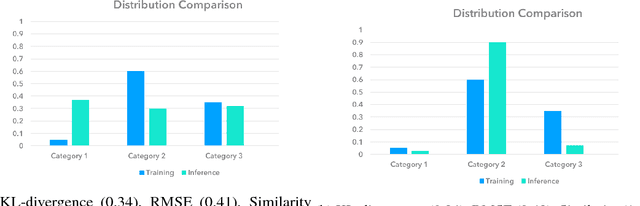

ML Health: Fitness Tracking for Production Models

Feb 07, 2019

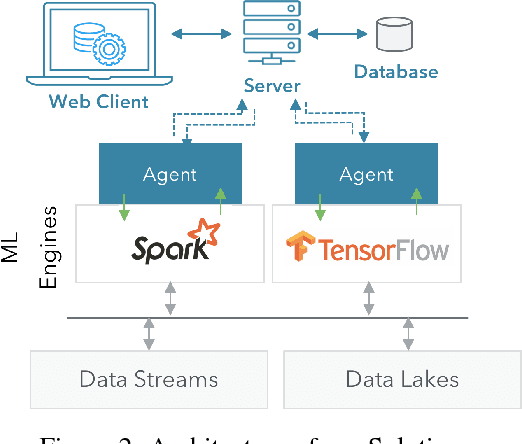

Abstract:Deployment of machine learning (ML) algorithms in production for extended periods of time has uncovered new challenges such as monitoring and management of real-time prediction quality of a model in the absence of labels. However, such tracking is imperative to prevent catastrophic business outcomes resulting from incorrect predictions. The scale of these deployments makes manual monitoring prohibitive, making automated techniques to track and raise alerts imperative. We present a framework, ML Health, for tracking potential drops in the predictive performance of ML models in the absence of labels. The framework employs diagnostic methods to generate alerts for further investigation. We develop one such method to monitor potential problems when production data patterns do not match training data distributions. We demonstrate that our method performs better than standard "distance metrics", such as RMSE, KL-Divergence, and Wasserstein at detecting issues with mismatched data sets. Finally, we present a working system that incorporates the ML Health approach to monitor and manage ML deployments within a realistic full production ML lifecycle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge