Simone Gasparini

IRIT-REVA

Lunar-G2R: Geometry-to-Reflectance Learning for High-Fidelity Lunar BRDF Estimation

Jan 15, 2026Abstract:We address the problem of estimating realistic, spatially varying reflectance for complex planetary surfaces such as the lunar regolith, which is critical for high-fidelity rendering and vision-based navigation. Existing lunar rendering pipelines rely on simplified or spatially uniform BRDF models whose parameters are difficult to estimate and fail to capture local reflectance variations, limiting photometric realism. We propose Lunar-G2R, a geometry-to-reflectance learning framework that predicts spatially varying BRDF parameters directly from a lunar digital elevation model (DEM), without requiring multi-view imagery, controlled illumination, or dedicated reflectance-capture hardware at inference time. The method leverages a U-Net trained with differentiable rendering to minimize photometric discrepancies between real orbital images and physically based renderings under known viewing and illumination geometry. Experiments on a geographically held-out region of the Tycho crater show that our approach reduces photometric error by 38 % compared to a state-of-the-art baseline, while achieving higher PSNR and SSIM and improved perceptual similarity, capturing fine-scale reflectance variations absent from spatially uniform models. To our knowledge, this is the first method to infer a spatially varying reflectance model directly from terrain geometry.

ROI-GS: Interest-based Local Quality 3D Gaussian Splatting

Oct 02, 2025Abstract:We tackle the challenge of efficiently reconstructing 3D scenes with high detail on objects of interest. Existing 3D Gaussian Splatting (3DGS) methods allocate resources uniformly across the scene, limiting fine detail to Regions Of Interest (ROIs) and leading to inflated model size. We propose ROI-GS, an object-aware framework that enhances local details through object-guided camera selection, targeted Object training, and seamless integration of high-fidelity object of interest reconstructions into the global scene. Our method prioritizes higher resolution details on chosen objects while maintaining real-time performance. Experiments show that ROI-GS significantly improves local quality (up to 2.96 dB PSNR), while reducing overall model size by $\approx 17\%$ of baseline and achieving faster training for a scene with a single object of interest, outperforming existing methods.

ROI-NeRFs: Hi-Fi Visualization of Objects of Interest within a Scene by NeRFs Composition

Feb 18, 2025Abstract:Efficient and accurate 3D reconstruction is essential for applications in cultural heritage. This study addresses the challenge of visualizing objects within large-scale scenes at a high level of detail (LOD) using Neural Radiance Fields (NeRFs). The aim is to improve the visual fidelity of chosen objects while maintaining the efficiency of the computations by focusing on details only for relevant content. The proposed ROI-NeRFs framework divides the scene into a Scene NeRF, which represents the overall scene at moderate detail, and multiple ROI NeRFs that focus on user-defined objects of interest. An object-focused camera selection module automatically groups relevant cameras for each NeRF training during the decomposition phase. In the composition phase, a Ray-level Compositional Rendering technique combines information from the Scene NeRF and ROI NeRFs, allowing simultaneous multi-object rendering composition. Quantitative and qualitative experiments conducted on two real-world datasets, including one on a complex eighteen's century cultural heritage room, demonstrate superior performance compared to baseline methods, improving LOD for object regions, minimizing artifacts, and without significantly increasing inference time.

A Note on Geometric Calibration of Multiple Cameras and Projectors

Oct 24, 2024

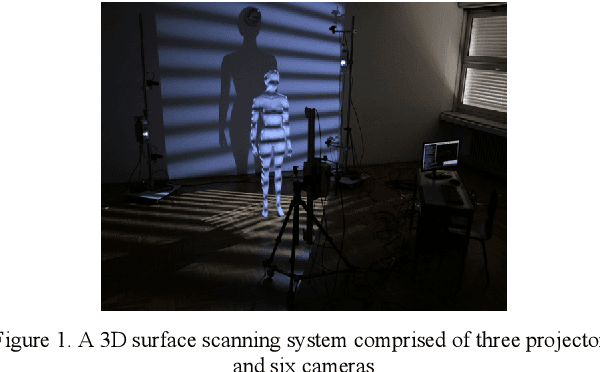

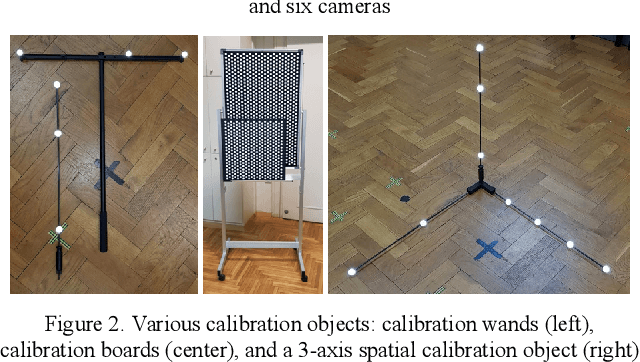

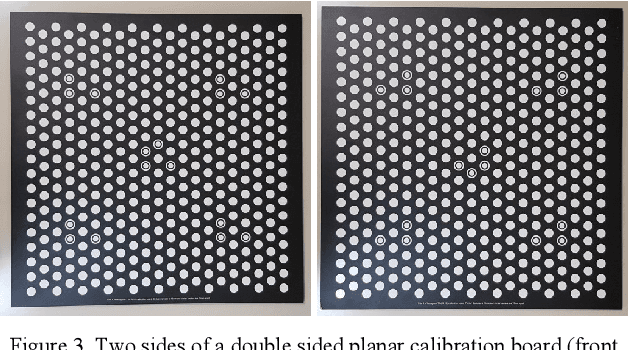

Abstract:Geometric calibration of cameras and projectors is an essential step that must be performed before any imaging system can be used. There are many well-known geometric calibration methods for calibrating systems comprised of multiple cameras, but simultaneous geometric calibration of multiple projectors and cameras has received less attention. This leaves unresolved several practical issues which must be considered to achieve the simplicity of use required for real world applications. In this work we discuss several important components of a real-world geometric calibration procedure used in our laboratory to calibrate surface imaging systems comprised of many projectors and cameras. We specifically discuss the design of the calibration object and the image processing pipeline used to analyze it in the acquired images. We also provide quantitative calibration results in the form of reprojection errors and compare them to the classic approaches such as Zhang's calibration method.

LapisGS: Layered Progressive 3D Gaussian Splatting for Adaptive Streaming

Aug 27, 2024

Abstract:The rise of Extended Reality (XR) requires efficient streaming of 3D online worlds, challenging current 3DGS representations to adapt to bandwidth-constrained environments. This paper proposes LapisGS, a layered 3DGS that supports adaptive streaming and progressive rendering. Our method constructs a layered structure for cumulative representation, incorporates dynamic opacity optimization to maintain visual fidelity, and utilizes occupancy maps to efficiently manage Gaussian splats. This proposed model offers a progressive representation supporting a continuous rendering quality adapted for bandwidth-aware streaming. Extensive experiments validate the effectiveness of our approach in balancing visual fidelity with the compactness of the model, with up to 50.71% improvement in SSIM, 286.53% improvement in LPIPS, and 318.41% reduction in model size, and shows its potential for bandwidth-adapted 3D streaming and rendering applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge