Quoc-Anh Bui

ROI-GS: Interest-based Local Quality 3D Gaussian Splatting

Oct 02, 2025Abstract:We tackle the challenge of efficiently reconstructing 3D scenes with high detail on objects of interest. Existing 3D Gaussian Splatting (3DGS) methods allocate resources uniformly across the scene, limiting fine detail to Regions Of Interest (ROIs) and leading to inflated model size. We propose ROI-GS, an object-aware framework that enhances local details through object-guided camera selection, targeted Object training, and seamless integration of high-fidelity object of interest reconstructions into the global scene. Our method prioritizes higher resolution details on chosen objects while maintaining real-time performance. Experiments show that ROI-GS significantly improves local quality (up to 2.96 dB PSNR), while reducing overall model size by $\approx 17\%$ of baseline and achieving faster training for a scene with a single object of interest, outperforming existing methods.

ROI-NeRFs: Hi-Fi Visualization of Objects of Interest within a Scene by NeRFs Composition

Feb 18, 2025Abstract:Efficient and accurate 3D reconstruction is essential for applications in cultural heritage. This study addresses the challenge of visualizing objects within large-scale scenes at a high level of detail (LOD) using Neural Radiance Fields (NeRFs). The aim is to improve the visual fidelity of chosen objects while maintaining the efficiency of the computations by focusing on details only for relevant content. The proposed ROI-NeRFs framework divides the scene into a Scene NeRF, which represents the overall scene at moderate detail, and multiple ROI NeRFs that focus on user-defined objects of interest. An object-focused camera selection module automatically groups relevant cameras for each NeRF training during the decomposition phase. In the composition phase, a Ray-level Compositional Rendering technique combines information from the Scene NeRF and ROI NeRFs, allowing simultaneous multi-object rendering composition. Quantitative and qualitative experiments conducted on two real-world datasets, including one on a complex eighteen's century cultural heritage room, demonstrate superior performance compared to baseline methods, improving LOD for object regions, minimizing artifacts, and without significantly increasing inference time.

Image Translation Based Nuclei Segmentation for Immunohistochemistry Images

Aug 12, 2022

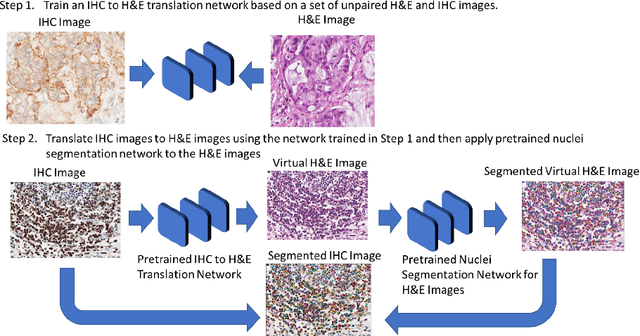

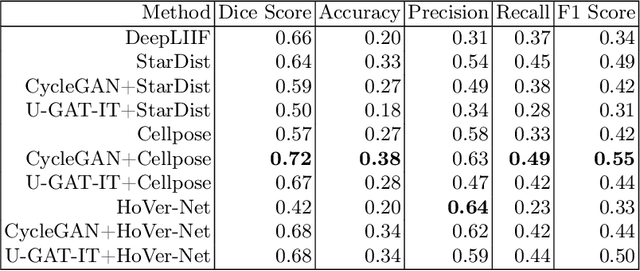

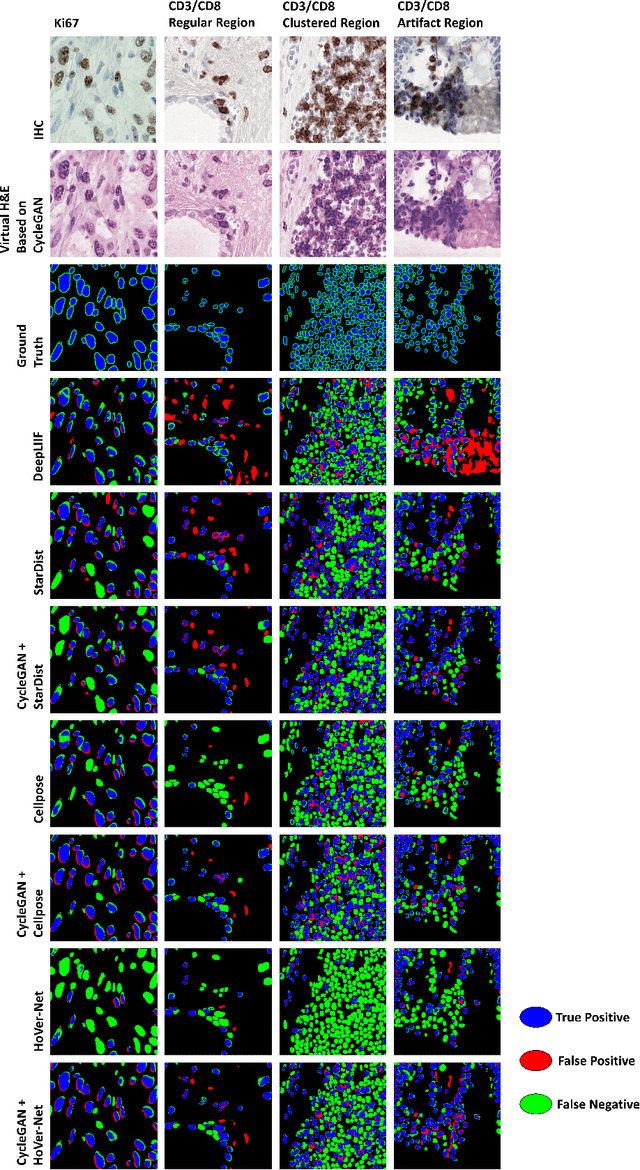

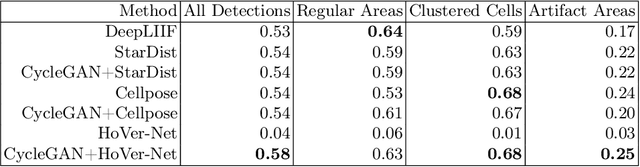

Abstract:Numerous deep learning based methods have been developed for nuclei segmentation for H&E images and have achieved close to human performance. However, direct application of such methods to another modality of images, such as Immunohistochemistry (IHC) images, may not achieve satisfactory performance. Thus, we developed a Generative Adversarial Network (GAN) based approach to translate an IHC image to an H&E image while preserving nuclei location and morphology and then apply pre-trained nuclei segmentation models to the virtual H&E image. We demonstrated that the proposed methods work better than several baseline methods including direct application of state of the art nuclei segmentation methods such as Cellpose and HoVer-Net, trained on H&E and a generative method, DeepLIIF, using two public IHC image datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge