Simone D'Agostino

Synaptic metaplasticity with multi-level memristive devices

Jun 21, 2023

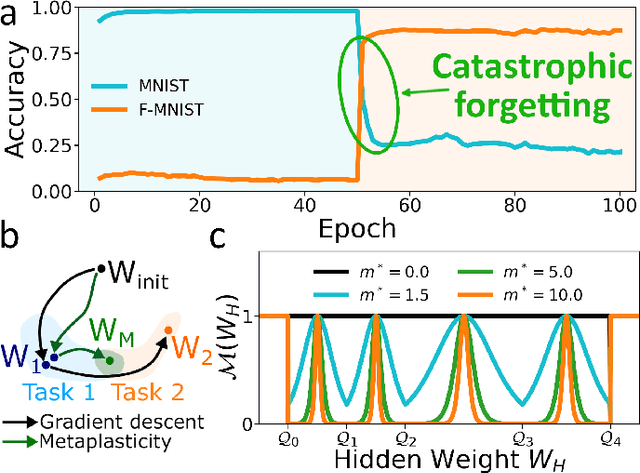

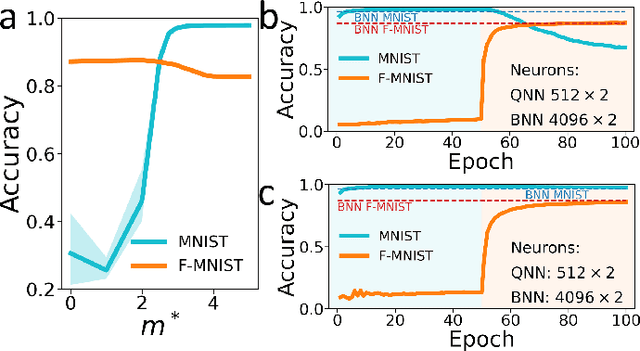

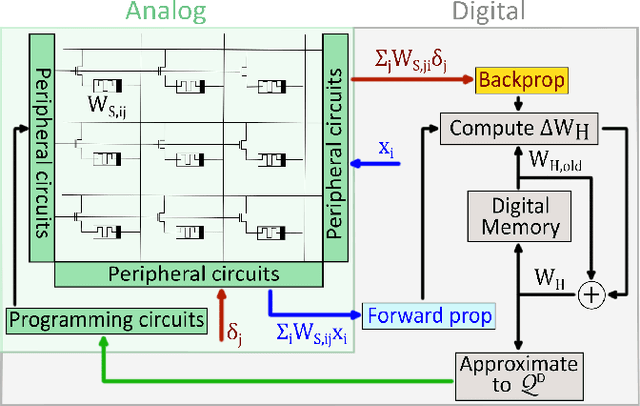

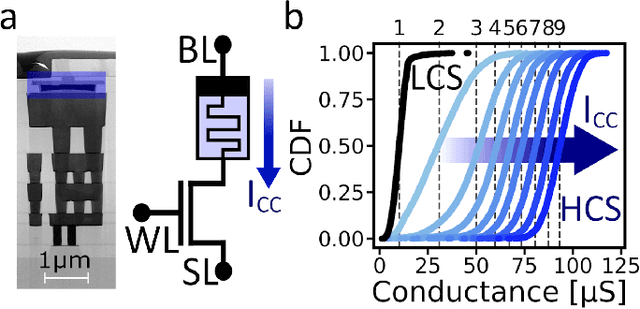

Abstract:Deep learning has made remarkable progress in various tasks, surpassing human performance in some cases. However, one drawback of neural networks is catastrophic forgetting, where a network trained on one task forgets the solution when learning a new one. To address this issue, recent works have proposed solutions based on Binarized Neural Networks (BNNs) incorporating metaplasticity. In this work, we extend this solution to quantized neural networks (QNNs) and present a memristor-based hardware solution for implementing metaplasticity during both inference and training. We propose a hardware architecture that integrates quantized weights in memristor devices programmed in an analog multi-level fashion with a digital processing unit for high-precision metaplastic storage. We validated our approach using a combined software framework and memristor based crossbar array for in-memory computing fabricated in 130 nm CMOS technology. Our experimental results show that a two-layer perceptron achieves 97% and 86% accuracy on consecutive training of MNIST and Fashion-MNIST, equal to software baseline. This result demonstrates immunity to catastrophic forgetting and the resilience to analog device imperfections of the proposed solution. Moreover, our architecture is compatible with the memristor limited endurance and has a 15x reduction in memory

Dendritic Computation through Exploiting Resistive Memory as both Delays and Weights

May 11, 2023

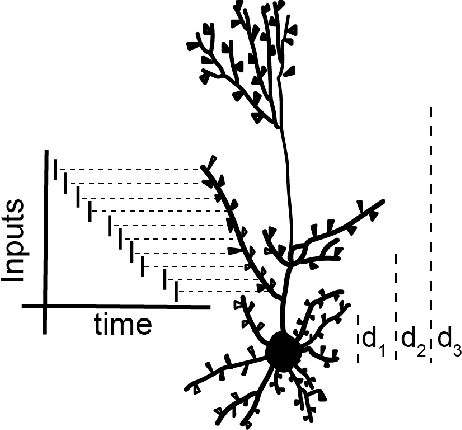

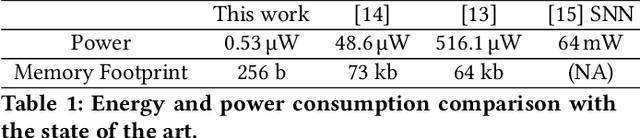

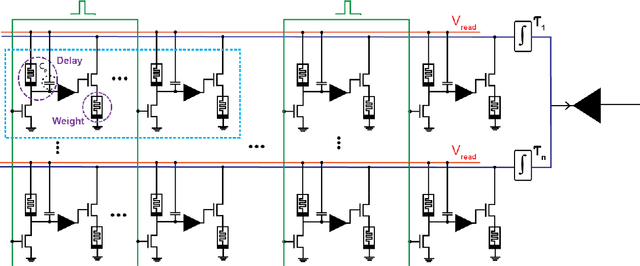

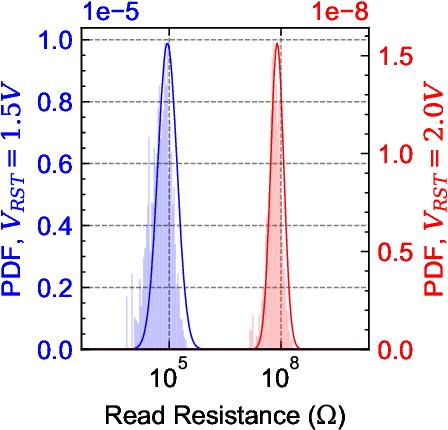

Abstract:Biological neurons can detect complex spatio-temporal features in spiking patterns via their synapses spread across across their dendritic branches. This is achieved by modulating the efficacy of the individual synapses, and by exploiting the temporal delays of their response to input spikes, depending on their position on the dendrite. Inspired by this mechanism, we propose a neuromorphic hardware architecture equipped with multiscale dendrites, each of which has synapses with tunable weight and delay elements. Weights and delays are both implemented using Resistive Random Access Memory (RRAM). We exploit the variability in the high resistance state of RRAM to implement a distribution of delays in the millisecond range for enabling spatio-temporal detection of sensory signals. We demonstrate the validity of the approach followed with a RRAM-aware simulation of a heartbeat anomaly detection task. In particular we show that, by incorporating delays directly into the network, the network's power and memory footprint can be reduced by up to 100x compared to equivalent state-of-the-art spiking recurrent networks with no delays.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge