Sidra Nasir

ArrhythmiaVision: Resource-Conscious Deep Learning Models with Visual Explanations for ECG Arrhythmia Classification

Apr 30, 2025Abstract:Cardiac arrhythmias are a leading cause of life-threatening cardiac events, highlighting the urgent need for accurate and timely detection. Electrocardiography (ECG) remains the clinical gold standard for arrhythmia diagnosis; however, manual interpretation is time-consuming, dependent on clinical expertise, and prone to human error. Although deep learning has advanced automated ECG analysis, many existing models abstract away the signal's intrinsic temporal and morphological features, lack interpretability, and are computationally intensive-hindering their deployment on resource-constrained platforms. In this work, we propose two novel lightweight 1D convolutional neural networks, ArrhythmiNet V1 and V2, optimized for efficient, real-time arrhythmia classification on edge devices. Inspired by MobileNet's depthwise separable convolutional design, these models maintain memory footprints of just 302.18 KB and 157.76 KB, respectively, while achieving classification accuracies of 0.99 (V1) and 0.98 (V2) on the MIT-BIH Arrhythmia Dataset across five classes: Normal Sinus Rhythm, Left Bundle Branch Block, Right Bundle Branch Block, Atrial Premature Contraction, and Premature Ventricular Contraction. In order to ensure clinical transparency and relevance, we integrate Shapley Additive Explanations and Gradient-weighted Class Activation Mapping, enabling both local and global interpretability. These techniques highlight physiologically meaningful patterns such as the QRS complex and T-wave that contribute to the model's predictions. We also discuss performance-efficiency trade-offs and address current limitations related to dataset diversity and generalizability. Overall, our findings demonstrate the feasibility of combining interpretability, predictive accuracy, and computational efficiency in practical, wearable, and embedded ECG monitoring systems.

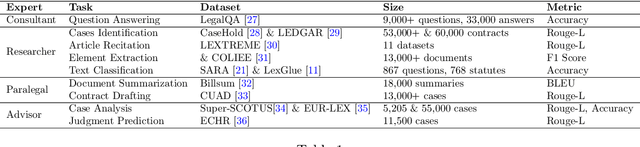

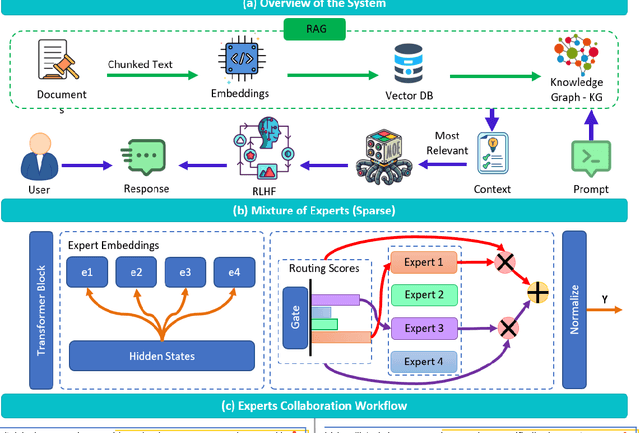

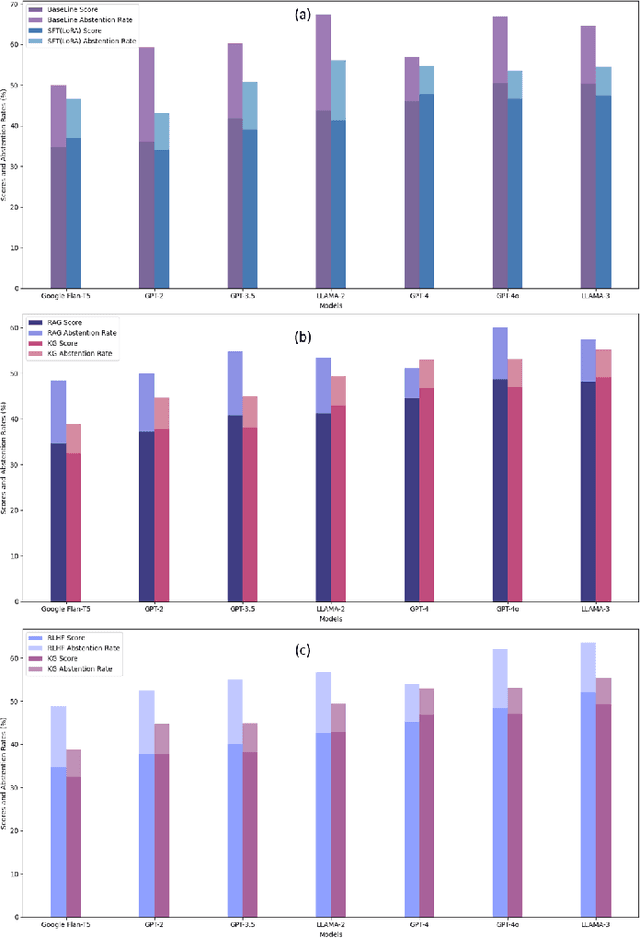

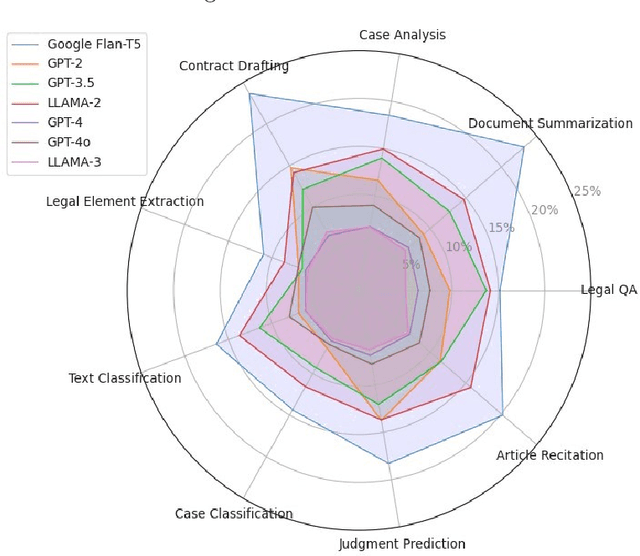

A Comprehensive Framework for Reliable Legal AI: Combining Specialized Expert Systems and Adaptive Refinement

Dec 29, 2024

Abstract:This article discusses the evolving role of artificial intelligence (AI) in the legal profession, focusing on its potential to streamline tasks such as document review, research, and contract drafting. However, challenges persist, particularly the occurrence of "hallucinations" in AI models, where they generate inaccurate or misleading information, undermining their reliability in legal contexts. To address this, the article proposes a novel framework combining a mixture of expert systems with a knowledge-based architecture to improve the precision and contextual relevance of AI-driven legal services. This framework utilizes specialized modules, each focusing on specific legal areas, and incorporates structured operational guidelines to enhance decision-making. Additionally, it leverages advanced AI techniques like Retrieval-Augmented Generation (RAG), Knowledge Graphs (KG), and Reinforcement Learning from Human Feedback (RLHF) to improve the system's accuracy. The proposed approach demonstrates significant improvements over existing AI models, showcasing enhanced performance in legal tasks and offering a scalable solution to provide more accessible and affordable legal services. The article also outlines the methodology, system architecture, and promising directions for future research in AI applications for the legal sector.

Breast Cancer Diagnosis: A Comprehensive Exploration of Explainable Artificial Intelligence (XAI) Techniques

Jun 01, 2024

Abstract:Breast cancer (BC) stands as one of the most common malignancies affecting women worldwide, necessitating advancements in diagnostic methodologies for better clinical outcomes. This article provides a comprehensive exploration of the application of Explainable Artificial Intelligence (XAI) techniques in the detection and diagnosis of breast cancer. As Artificial Intelligence (AI) technologies continue to permeate the healthcare sector, particularly in oncology, the need for transparent and interpretable models becomes imperative to enhance clinical decision-making and patient care. This review discusses the integration of various XAI approaches, such as SHAP, LIME, Grad-CAM, and others, with machine learning and deep learning models utilized in breast cancer detection and classification. By investigating the modalities of breast cancer datasets, including mammograms, ultrasounds and their processing with AI, the paper highlights how XAI can lead to more accurate diagnoses and personalized treatment plans. It also examines the challenges in implementing these techniques and the importance of developing standardized metrics for evaluating XAI's effectiveness in clinical settings. Through detailed analysis and discussion, this article aims to highlight the potential of XAI in bridging the gap between complex AI models and practical healthcare applications, thereby fostering trust and understanding among medical professionals and improving patient outcomes.

Enhancing Breast Cancer Diagnosis in Mammography: Evaluation and Integration of Convolutional Neural Networks and Explainable AI

Apr 09, 2024

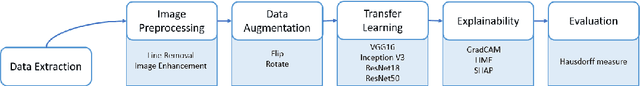

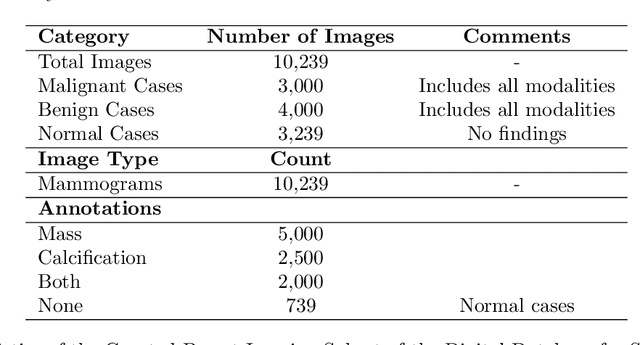

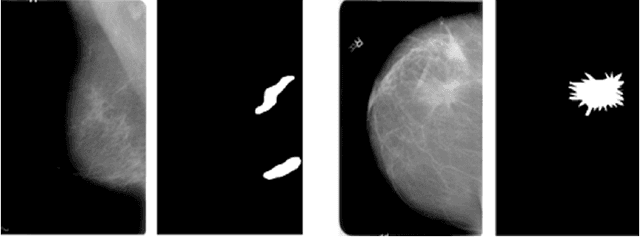

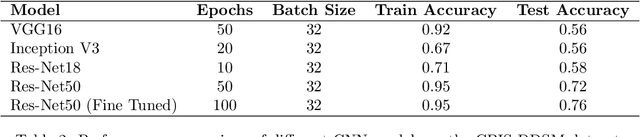

Abstract:The study introduces an integrated framework combining Convolutional Neural Networks (CNNs) and Explainable Artificial Intelligence (XAI) for the enhanced diagnosis of breast cancer using the CBIS-DDSM dataset. Utilizing a fine-tuned ResNet50 architecture, our investigation not only provides effective differentiation of mammographic images into benign and malignant categories but also addresses the opaque "black-box" nature of deep learning models by employing XAI methodologies, namely Grad-CAM, LIME, and SHAP, to interpret CNN decision-making processes for healthcare professionals. Our methodology encompasses an elaborate data preprocessing pipeline and advanced data augmentation techniques to counteract dataset limitations, and transfer learning using pre-trained networks, such as VGG-16, DenseNet and ResNet was employed. A focal point of our study is the evaluation of XAI's effectiveness in interpreting model predictions, highlighted by utilising the Hausdorff measure to assess the alignment between AI-generated explanations and expert annotations quantitatively. This approach plays a critical role for XAI in promoting trustworthiness and ethical fairness in AI-assisted diagnostics. The findings from our research illustrate the effective collaboration between CNNs and XAI in advancing diagnostic methods for breast cancer, thereby facilitating a more seamless integration of advanced AI technologies within clinical settings. By enhancing the interpretability of AI-driven decisions, this work lays the groundwork for improved collaboration between AI systems and medical practitioners, ultimately enriching patient care. Furthermore, the implications of our research extend well beyond the current methodologies, advocating for subsequent inquiries into the integration of multimodal data and the refinement of AI explanations to satisfy the needs of clinical practice.

Ethical Framework for Harnessing the Power of AI in Healthcare and Beyond

Aug 31, 2023Abstract:In the past decade, the deployment of deep learning (Artificial Intelligence (AI)) methods has become pervasive across a spectrum of real-world applications, often in safety-critical contexts. This comprehensive research article rigorously investigates the ethical dimensions intricately linked to the rapid evolution of AI technologies, with a particular focus on the healthcare domain. Delving deeply, it explores a multitude of facets including transparency, adept data management, human oversight, educational imperatives, and international collaboration within the realm of AI advancement. Central to this article is the proposition of a conscientious AI framework, meticulously crafted to accentuate values of transparency, equity, answerability, and a human-centric orientation. The second contribution of the article is the in-depth and thorough discussion of the limitations inherent to AI systems. It astutely identifies potential biases and the intricate challenges of navigating multifaceted contexts. Lastly, the article unequivocally accentuates the pressing need for globally standardized AI ethics principles and frameworks. Simultaneously, it aptly illustrates the adaptability of the ethical framework proposed herein, positioned skillfully to surmount emergent challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge