Samita Bai

SBAN: A Framework \& Multi-Dimensional Dataset for Large Language Model Pre-Training and Software Code Mining

Oct 21, 2025Abstract:This paper introduces SBAN (Source code, Binary, Assembly, and Natural Language Description), a large-scale, multi-dimensional dataset designed to advance the pre-training and evaluation of large language models (LLMs) for software code analysis. SBAN comprises more than 3 million samples, including 2.9 million benign and 672,000 malware respectively, each represented across four complementary layers: binary code, assembly instructions, natural language descriptions, and source code. This unique multimodal structure enables research on cross-representation learning, semantic understanding of software, and automated malware detection. Beyond security applications, SBAN supports broader tasks such as code translation, code explanation, and other software mining tasks involving heterogeneous data. It is particularly suited for scalable training of deep models, including transformers and other LLM architectures. By bridging low-level machine representations and high-level human semantics, SBAN provides a robust foundation for building intelligent systems that reason about code. We believe that this dataset opens new opportunities for mining software behavior, improving security analytics, and enhancing LLM capabilities in pre-training and fine-tuning tasks for software code mining.

FlexiDataGen: An Adaptive LLM Framework for Dynamic Semantic Dataset Generation in Sensitive Domains

Oct 21, 2025Abstract:Dataset availability and quality remain critical challenges in machine learning, especially in domains where data are scarce, expensive to acquire, or constrained by privacy regulations. Fields such as healthcare, biomedical research, and cybersecurity frequently encounter high data acquisition costs, limited access to annotated data, and the rarity or sensitivity of key events. These issues-collectively referred to as the dataset challenge-hinder the development of accurate and generalizable machine learning models in such high-stakes domains. To address this, we introduce FlexiDataGen, an adaptive large language model (LLM) framework designed for dynamic semantic dataset generation in sensitive domains. FlexiDataGen autonomously synthesizes rich, semantically coherent, and linguistically diverse datasets tailored to specialized fields. The framework integrates four core components: (1) syntactic-semantic analysis, (2) retrieval-augmented generation, (3) dynamic element injection, and (4) iterative paraphrasing with semantic validation. Together, these components ensure the generation of high-quality, domain-relevant data. Experimental results show that FlexiDataGen effectively alleviates data shortages and annotation bottlenecks, enabling scalable and accurate machine learning model development.

Large Language Model (LLM) for Software Security: Code Analysis, Malware Analysis, Reverse Engineering

Apr 07, 2025

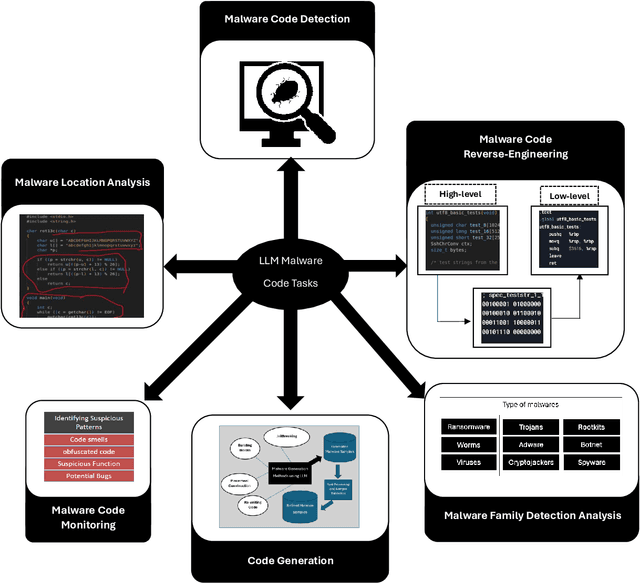

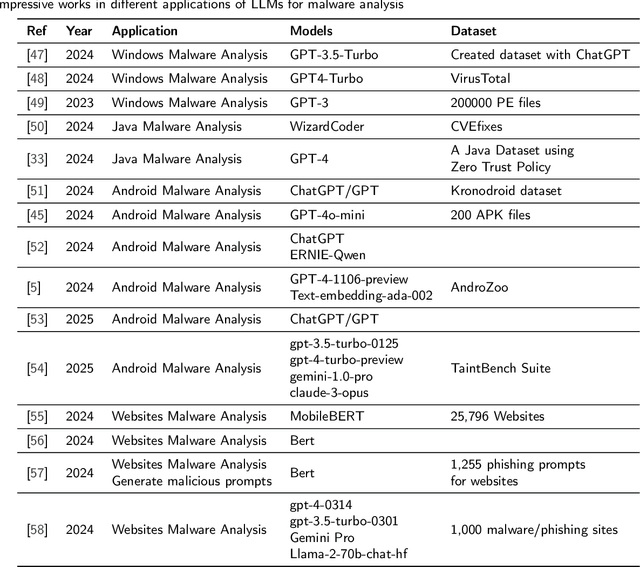

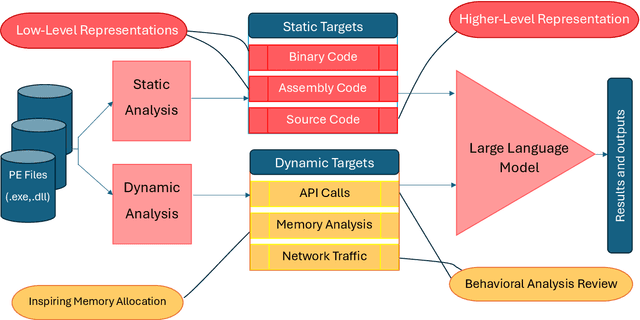

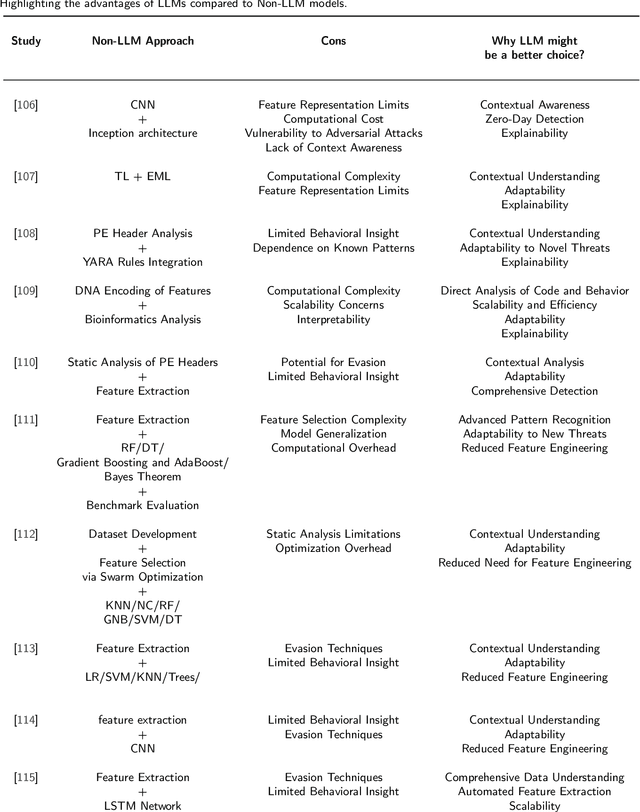

Abstract:Large Language Models (LLMs) have recently emerged as powerful tools in cybersecurity, offering advanced capabilities in malware detection, generation, and real-time monitoring. Numerous studies have explored their application in cybersecurity, demonstrating their effectiveness in identifying novel malware variants, analyzing malicious code structures, and enhancing automated threat analysis. Several transformer-based architectures and LLM-driven models have been proposed to improve malware analysis, leveraging semantic and structural insights to recognize malicious intent more accurately. This study presents a comprehensive review of LLM-based approaches in malware code analysis, summarizing recent advancements, trends, and methodologies. We examine notable scholarly works to map the research landscape, identify key challenges, and highlight emerging innovations in LLM-driven cybersecurity. Additionally, we emphasize the role of static analysis in malware detection, introduce notable datasets and specialized LLM models, and discuss essential datasets supporting automated malware research. This study serves as a valuable resource for researchers and cybersecurity professionals, offering insights into LLM-powered malware detection and defence strategies while outlining future directions for strengthening cybersecurity resilience.

A Comprehensive Framework for Reliable Legal AI: Combining Specialized Expert Systems and Adaptive Refinement

Dec 29, 2024

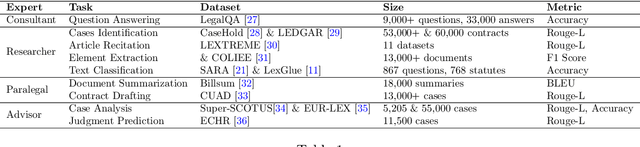

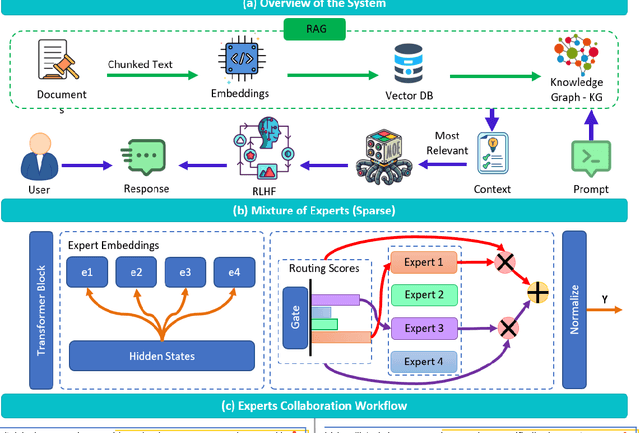

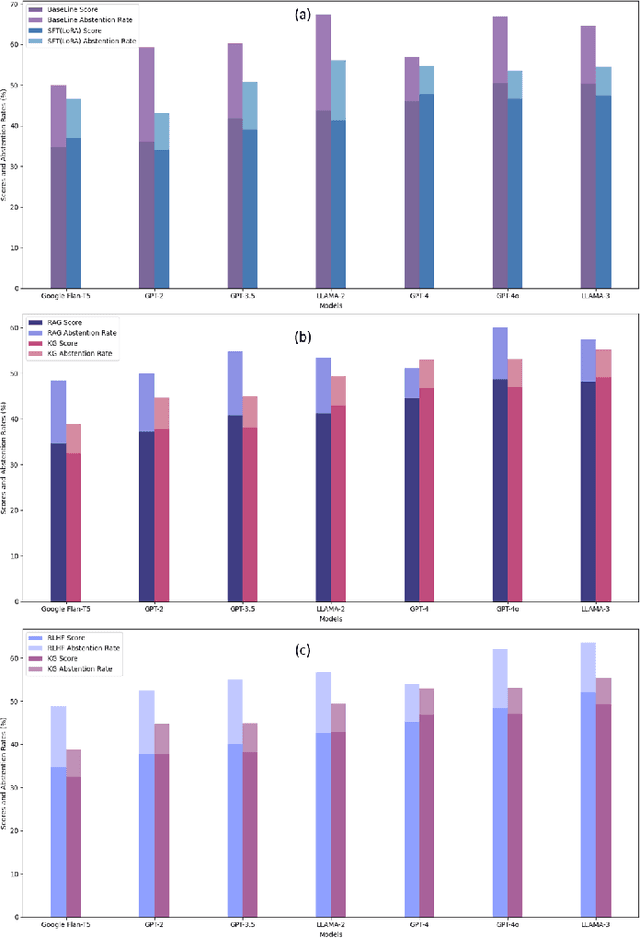

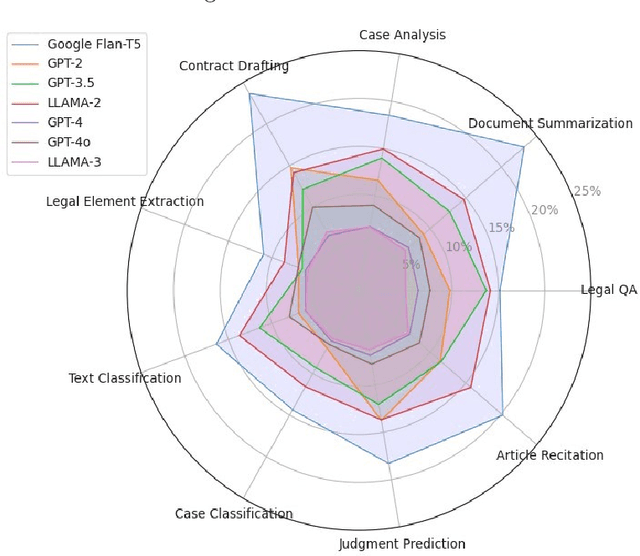

Abstract:This article discusses the evolving role of artificial intelligence (AI) in the legal profession, focusing on its potential to streamline tasks such as document review, research, and contract drafting. However, challenges persist, particularly the occurrence of "hallucinations" in AI models, where they generate inaccurate or misleading information, undermining their reliability in legal contexts. To address this, the article proposes a novel framework combining a mixture of expert systems with a knowledge-based architecture to improve the precision and contextual relevance of AI-driven legal services. This framework utilizes specialized modules, each focusing on specific legal areas, and incorporates structured operational guidelines to enhance decision-making. Additionally, it leverages advanced AI techniques like Retrieval-Augmented Generation (RAG), Knowledge Graphs (KG), and Reinforcement Learning from Human Feedback (RLHF) to improve the system's accuracy. The proposed approach demonstrates significant improvements over existing AI models, showcasing enhanced performance in legal tasks and offering a scalable solution to provide more accessible and affordable legal services. The article also outlines the methodology, system architecture, and promising directions for future research in AI applications for the legal sector.

Breast Cancer Diagnosis: A Comprehensive Exploration of Explainable Artificial Intelligence (XAI) Techniques

Jun 01, 2024

Abstract:Breast cancer (BC) stands as one of the most common malignancies affecting women worldwide, necessitating advancements in diagnostic methodologies for better clinical outcomes. This article provides a comprehensive exploration of the application of Explainable Artificial Intelligence (XAI) techniques in the detection and diagnosis of breast cancer. As Artificial Intelligence (AI) technologies continue to permeate the healthcare sector, particularly in oncology, the need for transparent and interpretable models becomes imperative to enhance clinical decision-making and patient care. This review discusses the integration of various XAI approaches, such as SHAP, LIME, Grad-CAM, and others, with machine learning and deep learning models utilized in breast cancer detection and classification. By investigating the modalities of breast cancer datasets, including mammograms, ultrasounds and their processing with AI, the paper highlights how XAI can lead to more accurate diagnoses and personalized treatment plans. It also examines the challenges in implementing these techniques and the importance of developing standardized metrics for evaluating XAI's effectiveness in clinical settings. Through detailed analysis and discussion, this article aims to highlight the potential of XAI in bridging the gap between complex AI models and practical healthcare applications, thereby fostering trust and understanding among medical professionals and improving patient outcomes.

Ethical Framework for Harnessing the Power of AI in Healthcare and Beyond

Aug 31, 2023Abstract:In the past decade, the deployment of deep learning (Artificial Intelligence (AI)) methods has become pervasive across a spectrum of real-world applications, often in safety-critical contexts. This comprehensive research article rigorously investigates the ethical dimensions intricately linked to the rapid evolution of AI technologies, with a particular focus on the healthcare domain. Delving deeply, it explores a multitude of facets including transparency, adept data management, human oversight, educational imperatives, and international collaboration within the realm of AI advancement. Central to this article is the proposition of a conscientious AI framework, meticulously crafted to accentuate values of transparency, equity, answerability, and a human-centric orientation. The second contribution of the article is the in-depth and thorough discussion of the limitations inherent to AI systems. It astutely identifies potential biases and the intricate challenges of navigating multifaceted contexts. Lastly, the article unequivocally accentuates the pressing need for globally standardized AI ethics principles and frameworks. Simultaneously, it aptly illustrates the adaptability of the ethical framework proposed herein, positioned skillfully to surmount emergent challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge