Sichao Huang

Planning Irregular Object Packing via Hierarchical Reinforcement Learning

Nov 17, 2022

Abstract:Object packing by autonomous robots is an im-portant challenge in warehouses and logistics industry. Most conventional data-driven packing planning approaches focus on regular cuboid packing, which are usually heuristic and limit the practical use in realistic applications with everyday objects. In this paper, we propose a deep hierarchical reinforcement learning approach to simultaneously plan packing sequence and placement for irregular object packing. Specifically, the top manager network infers packing sequence from six principal view heightmaps of all objects, and then the bottom worker network receives heightmaps of the next object to predict the placement position and orientation. The two networks are trained hierarchically in a self-supervised Q-Learning framework, where the rewards are provided by the packing results based on the top height , object volume and placement stability in the box. The framework repeats sequence and placement planning iteratively until all objects have been packed into the box or no space is remained for unpacked items. We compare our approach with existing robotic packing methods for irregular objects in a physics simulator. Experiments show that our approach can pack more objects with less time cost than the state-of-the-art packing methods of irregular objects. We also implement our packing plan with a robotic manipulator to show the generalization ability in the real world.

GE-Grasp: Efficient Target-Oriented Grasping in Dense Clutter

Jul 25, 2022

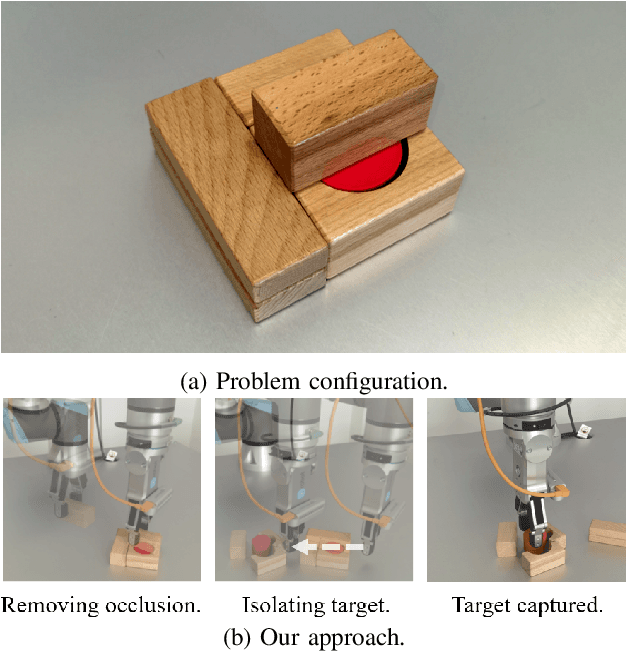

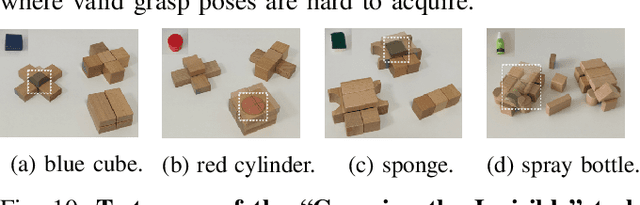

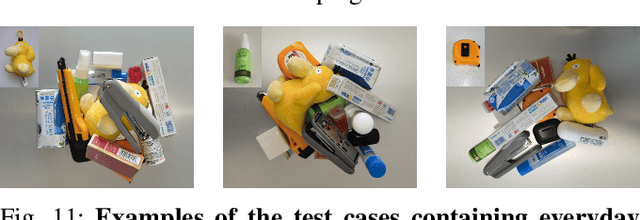

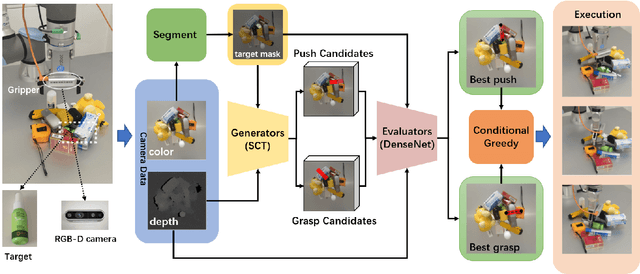

Abstract:Grasping in dense clutter is a fundamental skill for autonomous robots. However, the crowdedness and occlusions in the cluttered scenario cause significant difficulties to generate valid grasp poses without collisions, which results in low efficiency and high failure rates. To address these, we present a generic framework called GE-Grasp for robotic motion planning in dense clutter, where we leverage diverse action primitives for occluded object removal and present the generator-evaluator architecture to avoid spatial collisions. Therefore, our GE-Grasp is capable of grasping objects in dense clutter efficiently with promising success rates. Specifically, we define three action primitives: target-oriented grasping for target capturing, pushing, and nontarget-oriented grasping to reduce the crowdedness and occlusions. The generators effectively provide various action candidates referring to the spatial information. Meanwhile, the evaluators assess the selected action primitive candidates, where the optimal action is implemented by the robot. Extensive experiments in simulated and real-world environments show that our approach outperforms the state-of-the-art methods of grasping in clutter with respect to motion efficiency and success rates. Moreover, we achieve comparable performance in the real world as that in the simulation environment, which indicates the strong generalization ability of our GE-Grasp. Supplementary material is available at: https://github.com/CaptainWuDaoKou/GE-Grasp.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge