Sibo Dong

ViSTA: Visual Storytelling using Multi-modal Adapters for Text-to-Image Diffusion Models

Jun 13, 2025Abstract:Text-to-image diffusion models have achieved remarkable success, yet generating coherent image sequences for visual storytelling remains challenging. A key challenge is effectively leveraging all previous text-image pairs, referred to as history text-image pairs, which provide contextual information for maintaining consistency across frames. Existing auto-regressive methods condition on all past image-text pairs but require extensive training, while training-free subject-specific approaches ensure consistency but lack adaptability to narrative prompts. To address these limitations, we propose a multi-modal history adapter for text-to-image diffusion models, \textbf{ViSTA}. It consists of (1) a multi-modal history fusion module to extract relevant history features and (2) a history adapter to condition the generation on the extracted relevant features. We also introduce a salient history selection strategy during inference, where the most salient history text-image pair is selected, improving the quality of the conditioning. Furthermore, we propose to employ a Visual Question Answering-based metric TIFA to assess text-image alignment in visual storytelling, providing a more targeted and interpretable assessment of generated images. Evaluated on the StorySalon and FlintStonesSV dataset, our proposed ViSTA model is not only consistent across different frames, but also well-aligned with the narrative text descriptions.

D-Feat Occlusions: Diffusion Features for Robustness to Partial Visual Occlusions in Object Recognition

Apr 08, 2025Abstract:Applications of diffusion models for visual tasks have been quite noteworthy. This paper targets making classification models more robust to occlusions for the task of object recognition by proposing a pipeline that utilizes a frozen diffusion model. Diffusion features have demonstrated success in image generation and image completion while understanding image context. Occlusion can be posed as an image completion problem by deeming the pixels of the occluder to be `missing.' We hypothesize that such features can help hallucinate object visual features behind occluding objects, and hence we propose using them to enable models to become more occlusion robust. We design experiments to include input-based augmentations as well as feature-based augmentations. Input-based augmentations involve finetuning on images where the occluder pixels are inpainted, and feature-based augmentations involve augmenting classification features with intermediate diffusion features. We demonstrate that our proposed use of diffusion-based features results in models that are more robust to partial object occlusions for both Transformers and ConvNets on ImageNet with simulated occlusions. We also propose a dataset that encompasses real-world occlusions and demonstrate that our method is more robust to partial object occlusions.

SEINE: SEgment-based Indexing for NEural information retrieval

Nov 27, 2023

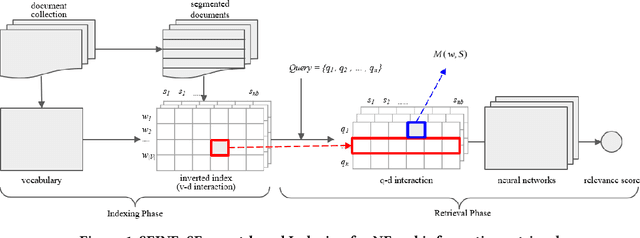

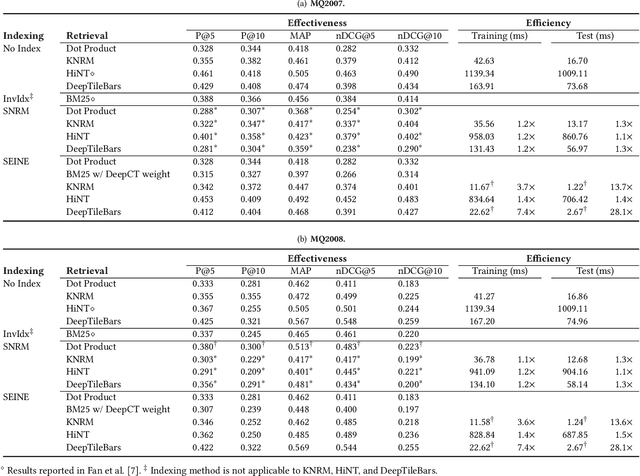

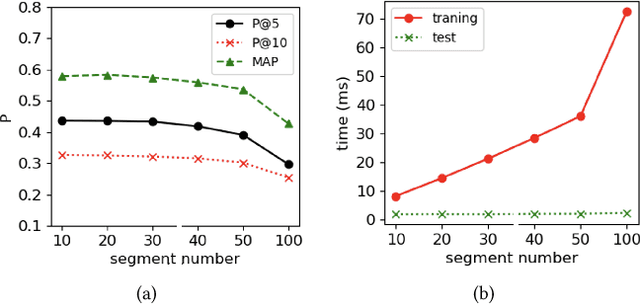

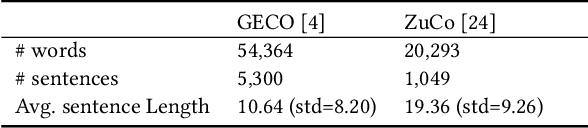

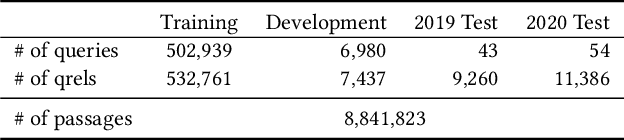

Abstract:Many early neural Information Retrieval (NeurIR) methods are re-rankers that rely on a traditional first-stage retriever due to expensive query time computations. Recently, representation-based retrievers have gained much attention, which learns query representation and document representation separately, making it possible to pre-compute document representations offline and reduce the workload at query time. Both dense and sparse representation-based retrievers have been explored. However, these methods focus on finding the representation that best represents a text (aka metric learning) and the actual retrieval function that is responsible for similarity matching between query and document is kept at a minimum by using dot product. One drawback is that unlike traditional term-level inverted index, the index formed by these embeddings cannot be easily re-used by another retrieval method. Another drawback is that keeping the interaction at minimum hurts retrieval effectiveness. On the contrary, interaction-based retrievers are known for their better retrieval effectiveness. In this paper, we propose a novel SEgment-based Neural Indexing method, SEINE, which provides a general indexing framework that can flexibly support a variety of interaction-based neural retrieval methods. We emphasize on a careful decomposition of common components in existing neural retrieval methods and propose to use segment-level inverted index to store the atomic query-document interaction values. Experiments on LETOR MQ2007 and MQ2008 datasets show that our indexing method can accelerate multiple neural retrieval methods up to 28-times faster without sacrificing much effectiveness.

GazBy: Gaze-Based BERT Model to Incorporate Human Attention in Neural Information Retrieval

Jul 04, 2022

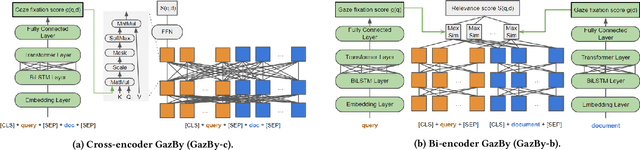

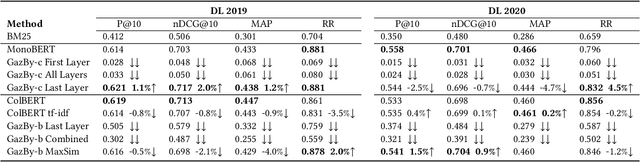

Abstract:This paper is interested in investigating whether human gaze signals can be leveraged to improve state-of-the-art search engine performance and how to incorporate this new input signal marked by human attention into existing neural retrieval models. In this paper, we propose GazBy ({\bf Gaz}e-based {\bf B}ert model for document relevanc{\bf y}), a light-weight joint model that integrates human gaze fixation estimation into transformer models to predict document relevance, incorporating more nuanced information about cognitive processing into information retrieval (IR). We evaluate our model on the Text Retrieval Conference (TREC) Deep Learning (DL) 2019 and 2020 Tracks. Our experiments show encouraging results and illustrate the effective and ineffective entry points for using human gaze to help with transformer-based neural retrievers. With the rise of virtual reality (VR) and augmented reality (AR), human gaze data will become more available. We hope this work serves as a first step exploring using gaze signals in modern neural search engines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge