Siavash Alemzadeh

Adaptive Traffic Control with Deep Reinforcement Learning: Towards State-of-the-art and Beyond

Jul 21, 2020

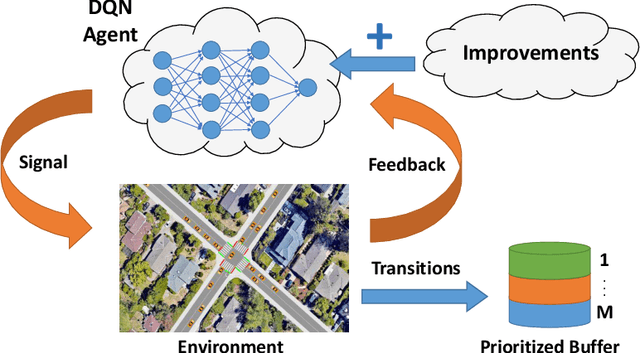

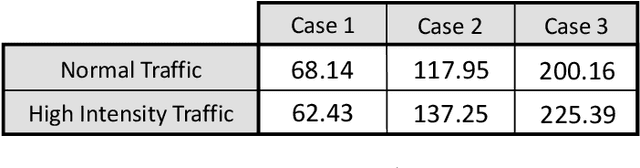

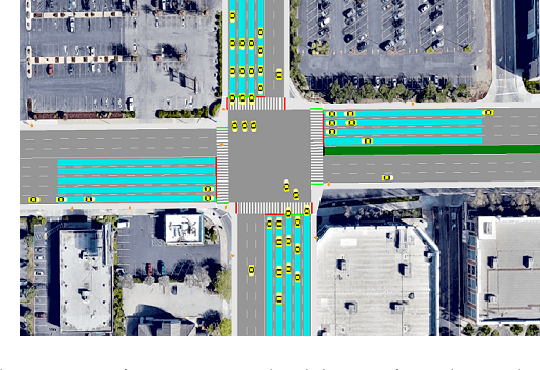

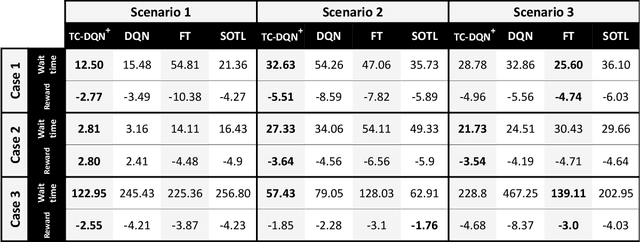

Abstract:In this work, we study adaptive data-guided traffic planning and control using Reinforcement Learning (RL). We shift from the plain use of classic methods towards state-of-the-art in deep RL community. We embed several recent techniques in our algorithm that improve the original Deep Q-Networks (DQN) for discrete control and discuss the traffic-related interpretations that follow. We propose a novel DQN-based algorithm for Traffic Control (called TC-DQN+) as a tool for fast and more reliable traffic decision-making. We introduce a new form of reward function which is further discussed using illustrative examples with comparisons to traditional traffic control methods.

Deep Learning-based Resource Allocation for Infrastructure Resilience

Jul 12, 2020

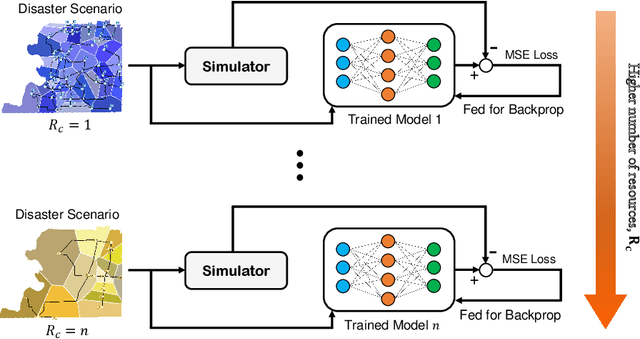

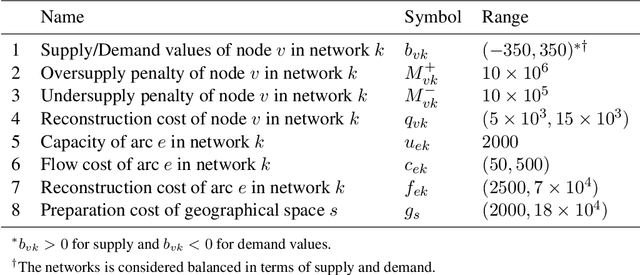

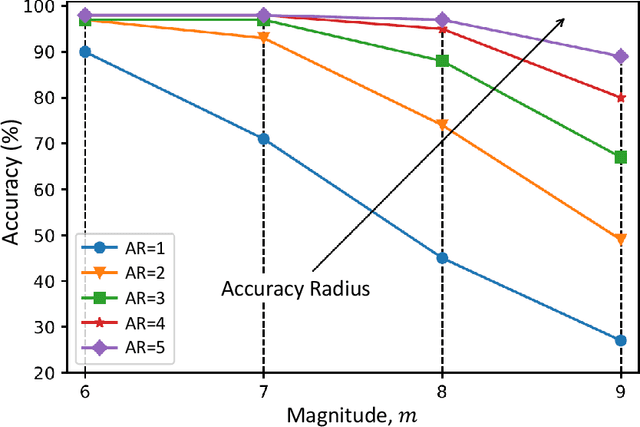

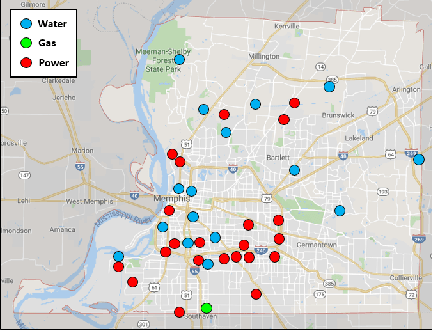

Abstract:From an optimization point of view, resource allocation is one of the cornerstones of research for addressing limiting factors commonly arising in applications such as power outages and traffic jams. In this paper, we take a data-driven approach to estimate an optimal nodal restoration sequence for immediate recovery of the infrastructure networks after natural disasters such as earthquakes. We generate data from td-INDP, a high-fidelity simulator of optimal restoration strategies for interdependent networks, and employ deep neural networks to approximate those strategies. Despite the fact that the underlying problem is NP-complete, the restoration sequences obtained by our method are observed to be nearly optimal. In addition, by training multiple models---the so-called estimators---for a variety of resource availability levels, our proposed method balances a trade-off between resource utilization and restoration time. Decision-makers can use our trained models to allocate resources more efficiently after contingencies, and in turn, improve the community resilience. Besides their predictive power, such trained estimators unravel the effect of interdependencies among different nodal functionalities in the restoration strategies. We showcase our methodology by the real-world interdependent infrastructure of Shelby County, TN.

Online Regulation of Unstable LTI Systems from a Single Trajectory

Jun 09, 2020

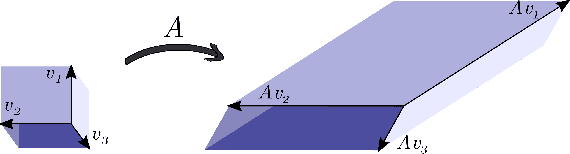

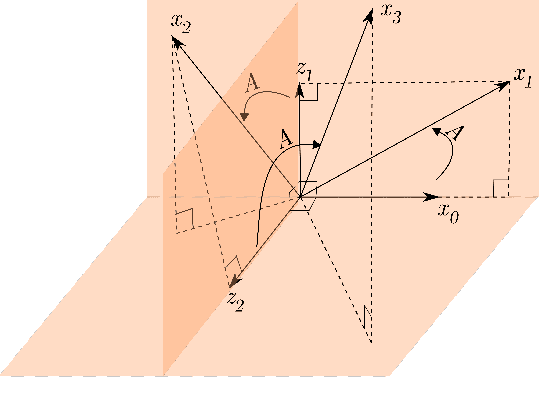

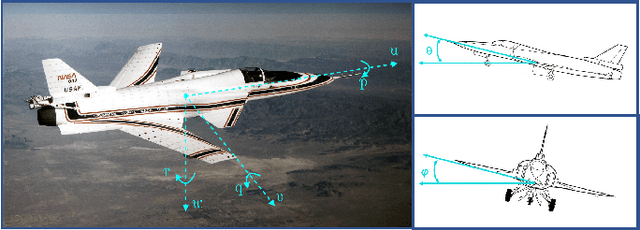

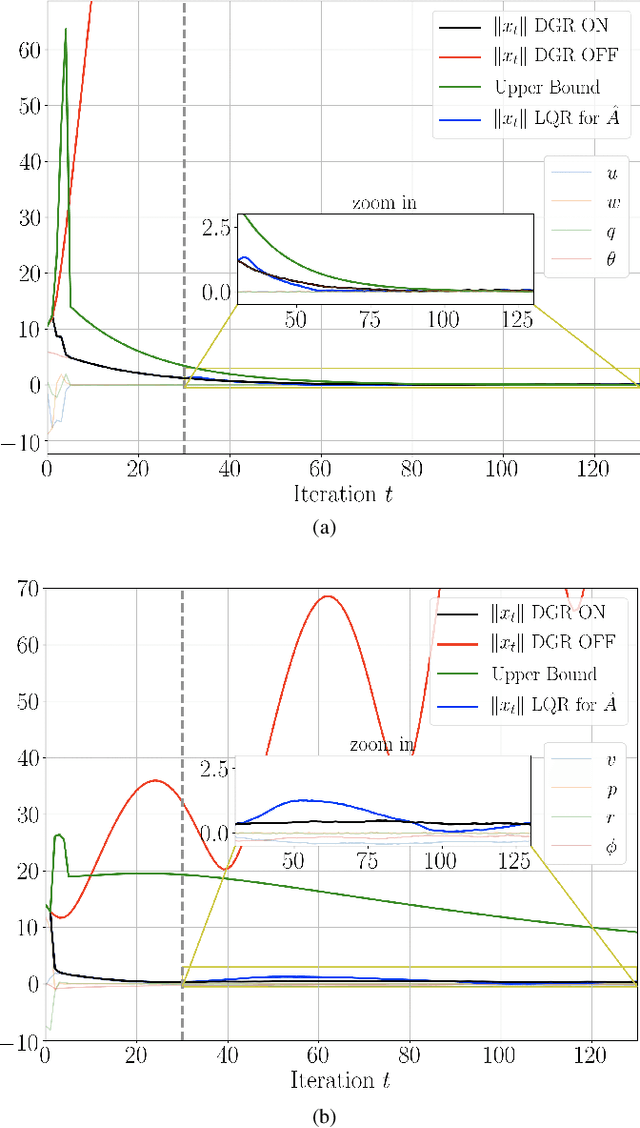

Abstract:Recently, data-driven methods for control of dynamic systems have received considerable attention in system theory and machine learning as they provide a mechanism for feedback synthesis from the observed time-series data. However learning, say through direct policy updates, often requires assumptions such as knowing a priori that the initial policy (gain) is stabilizing, e.g., when the open-loop system is stable. In this paper, we examine online regulation of (possibly unstable) partially unknown linear systems with no a priori assumptions on the initial controller. First, we introduce and characterize the notion of ''regularizability'' for linear systems that gauges the capacity of a system to be regulated in finite-time in contrast to its asymptotic behavior (commonly characterized by stabilizability/controllability). Next, having access only to the input matrix, we propose the Data-GuidedRegulation (DGR) synthesis that--as its name suggests--regulates the underlying states while also generating informative data that can subsequently be used for data-driven stabilization or system identification (sysID). The analysis is also related in spirit, to thespectrum and the ''instability number'' of the underlying linear system, a novel geometric property studied in this work. We further elucidate our results by considering special structures for system parameters as well as boosting the performance of the algorithm via a rank-one matrix update using the discrete nature of data collection in the problem setup. Finally, we demonstrate the utility of the proposed approach via an example involving direct (online) regulation of the X-29 aircraft.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge