Shuyang Lin

FOAM: A General Frequency-Optimized Anti-Overlapping Framework for Overlapping Object Perception

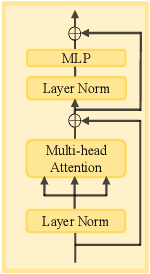

Jun 16, 2025Abstract:Overlapping object perception aims to decouple the randomly overlapping foreground-background features, extracting foreground features while suppressing background features, which holds significant application value in fields such as security screening and medical auxiliary diagnosis. Despite some research efforts to tackle the challenge of overlapping object perception, most solutions are confined to the spatial domain. Through frequency domain analysis, we observe that the degradation of contours and textures due to the overlapping phenomenon can be intuitively reflected in the magnitude spectrum. Based on this observation, we propose a general Frequency-Optimized Anti-Overlapping Framework (FOAM) to assist the model in extracting more texture and contour information, thereby enhancing the ability for anti-overlapping object perception. Specifically, we design the Frequency Spatial Transformer Block (FSTB), which can simultaneously extract features from both the frequency and spatial domains, helping the network capture more texture features from the foreground. In addition, we introduce the Hierarchical De-Corrupting (HDC) mechanism, which aligns adjacent features in the separately constructed base branch and corruption branch using a specially designed consistent loss during the training phase. This mechanism suppresses the response to irrelevant background features of FSTBs, thereby improving the perception of foreground contour. We conduct extensive experiments to validate the effectiveness and generalization of the proposed FOAM, which further improves the accuracy of state-of-the-art models on four datasets, specifically for the three overlapping object perception tasks: Prohibited Item Detection, Prohibited Item Segmentation, and Pneumonia Detection. The code will be open source once the paper is accepted.

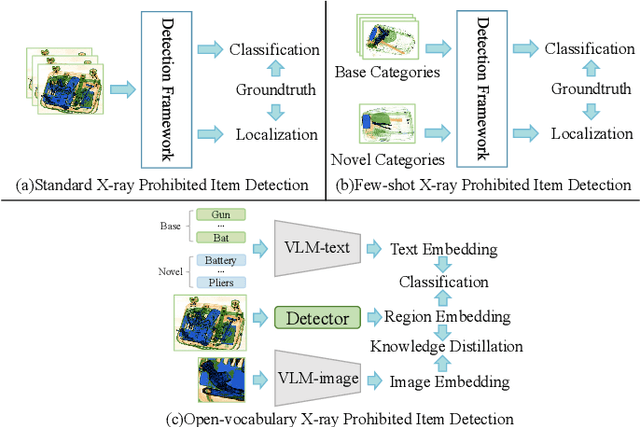

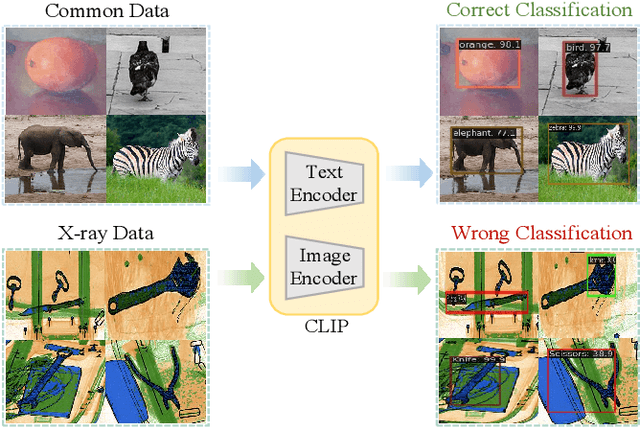

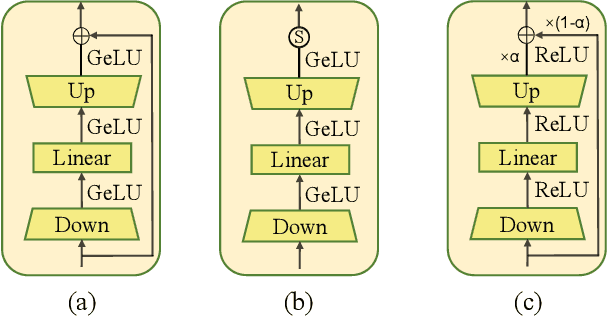

Open-Vocabulary X-ray Prohibited Item Detection via Fine-tuning CLIP

Jun 16, 2024

Abstract:X-ray prohibited item detection is an essential component of security check and categories of prohibited item are continuously increasing in accordance with the latest laws. Previous works all focus on close-set scenarios, which can only recognize known categories used for training and often require time-consuming as well as labor-intensive annotations when learning novel categories, resulting in limited real-world applications. Although the success of vision-language models (e.g. CLIP) provides a new perspectives for open-set X-ray prohibited item detection, directly applying CLIP to X-ray domain leads to a sharp performance drop due to domain shift between X-ray data and general data used for pre-training CLIP. To address aforementioned challenges, in this paper, we introduce distillation-based open-vocabulary object detection (OVOD) task into X-ray security inspection domain by extending CLIP to learn visual representations in our specific X-ray domain, aiming to detect novel prohibited item categories beyond base categories on which the detector is trained. Specifically, we propose X-ray feature adapter and apply it to CLIP within OVOD framework to develop OVXD model. X-ray feature adapter containing three adapter submodules of bottleneck architecture, which is simple but can efficiently integrate new knowledge of X-ray domain with original knowledge, further bridge domain gap and promote alignment between X-ray images and textual concepts. Extensive experiments conducted on PIXray and PIDray datasets demonstrate that proposed method performs favorably against other baseline OVOD methods in detecting novel categories in X-ray scenario. It outperforms previous best result by 15.2 AP50 and 1.5 AP50 on PIXray and PIDray with achieving 21.0 AP50 and 27.8 AP50 respectively.

AO-DETR: Anti-Overlapping DETR for X-Ray Prohibited Items Detection

Mar 07, 2024

Abstract:Prohibited item detection in X-ray images is one of the most essential and highly effective methods widely employed in various security inspection scenarios. Considering the significant overlapping phenomenon in X-ray prohibited item images, we propose an Anti-Overlapping DETR (AO-DETR) based on one of the state-of-the-art general object detectors, DINO. Specifically, to address the feature coupling issue caused by overlapping phenomena, we introduce the Category-Specific One-to-One Assignment (CSA) strategy to constrain category-specific object queries in predicting prohibited items of fixed categories, which can enhance their ability to extract features specific to prohibited items of a particular category from the overlapping foreground-background features. To address the edge blurring problem caused by overlapping phenomena, we propose the Look Forward Densely (LFD) scheme, which improves the localization accuracy of reference boxes in mid-to-high-level decoder layers and enhances the ability to locate blurry edges of the final layer. Similar to DINO, our AO-DETR provides two different versions with distinct backbones, tailored to meet diverse application requirements. Extensive experiments on the PIXray and OPIXray datasets demonstrate that the proposed method surpasses the state-of-the-art object detectors, indicating its potential applications in the field of prohibited item detection. The source code will be released at https://github.com/Limingyuan001/AO-DETR-test.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge