Shuwei Xing

Virtual Fluoroscopy for Interventional Guidance using Magnetic Tracking

May 20, 2025Abstract:Purpose: In conventional fluoroscopy-guided interventions, the 2D projective nature of X-ray imaging limits depth perception and leads to prolonged radiation exposure. Virtual fluoroscopy, combined with spatially tracked surgical instruments, is a promising strategy to mitigate these limitations. While magnetic tracking shows unique advantages, particularly in tracking flexible instruments, it remains under-explored due to interference from ferromagnetic materials in the C-arm room. This work proposes a virtual fluoroscopy workflow by effectively integrating magnetic tracking, and demonstrates its clinical efficacy. Methods: An automatic virtual fluoroscopy workflow was developed using a radiolucent tabletop field generator prototype. Specifically, we developed a fluoro-CT registration approach with automatic 2D-3D shared landmark correspondence to establish the C-arm-patient relationship, along with a general C-arm modelling approach to calculate desired poses and generate corresponding virtual fluoroscopic images. Results: Testing on a dataset with views ranging from RAO 90 degrees to LAO 90 degrees, simulated fluoroscopic images showed visually imperceptible differences from the real ones, achieving a mean target projection distance error of 1.55 mm. An endoleak phantom insertion experiment highlighted the effectiveness of simulating multiplanar views with real-time instrument overlays, achieving a mean needle tip error of 3.42 mm. Conclusions: Results demonstrated the efficacy of virtual fluoroscopy integrated with magnetic tracking, improving depth perception during navigation. The broad capture range of virtual fluoroscopy showed promise in improving the users understanding of X-ray imaging principles, facilitating more efficient image acquisition.

Towards Seamless Integration of Magnetic Tracking into Fluoroscopy-guided Interventions

Nov 12, 2024

Abstract:The 2D projective nature of X-ray radiography presents significant limitations in fluoroscopy-guided interventions, particularly the loss of depth perception and prolonged radiation exposure. Integrating magnetic trackers into these workflows is promising; however, it remains challenging and under-explored in current research and practice. To address this, we employed a radiolucent magnetic field generator (FG) prototype as a foundational step towards seamless magnetic tracking (MT) integration. A two-layer FG mounting frame was designed for compatibility with various C-arm X-ray systems, ensuring smooth installation and optimal tracking accuracy. To overcome technical challenges, including accurate C-arm pose estimation, robust fluoro-CT registration, and 3D navigation, we proposed the incorporation of external aluminum fiducials without disrupting conventional workflows. Experimental evaluation showed no clinically significant impact of the aluminum fiducials and the C-arm on MT accuracy. Our fluoro-CT registration demonstrated high accuracy (mean projection distance approxiamtely 0.7 mm, robustness (wide capture range), and generalizability across local and public datasets. In a phantom targeting experiment, needle insertion error was between 2 mm and 3 mm, with real-time guidance using enhanced 2D and 3D navigation. Overall, our results demonstrated the efficacy and clinical applicability of the MT-assisted approach. To the best of our knowledge, this is the first study to integrate a radiolucent FG into a fluoroscopy-guided workflow.

Deep Regression 2D-3D Ultrasound Registration for Liver Motion Correction in Focal Tumor Thermal Ablation

Oct 03, 2024

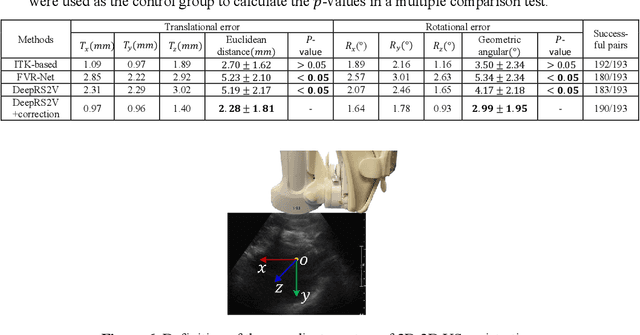

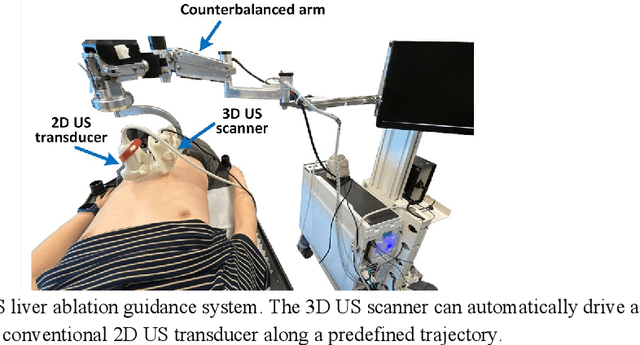

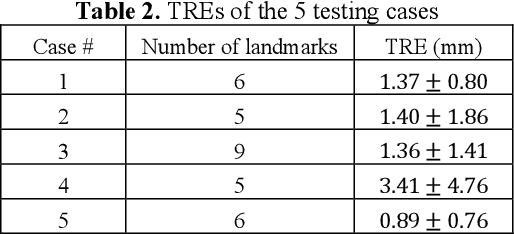

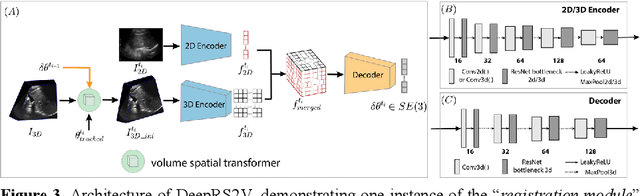

Abstract:Liver tumor ablation procedures require accurate placement of the needle applicator at the tumor centroid. The lower-cost and real-time nature of ultrasound (US) has advantages over computed tomography (CT) for applicator guidance, however, in some patients, liver tumors may be occult on US and tumor mimics can make lesion identification challenging. Image registration techniques can aid in interpreting anatomical details and identifying tumors, but their clinical application has been hindered by the tradeoff between alignment accuracy and runtime performance, particularly when compensating for liver motion due to patient breathing or movement. Therefore, we propose a 2D-3D US registration approach to enable intra-procedural alignment that mitigates errors caused by liver motion. Specifically, our approach can correlate imbalanced 2D and 3D US image features and use continuous 6D rotation representations to enhance the model's training stability. The dataset was divided into 2388, 196 and 193 image pairs for training, validation and testing, respectively. Our approach achieved a mean Euclidean distance error of 2.28 mm $\pm$ 1.81 mm and a mean geodesic angular error of 2.99$^{\circ}$ $\pm$ 1.95$^{\circ}$, with a runtime of 0.22 seconds per 2D-3D US image pair. These results demonstrate that our approach can achieve accurate alignment and clinically acceptable runtime, indicating potential for clinical translation.

GRU-TV: Time- and velocity-aware GRU for patient representation on multivariate clinical time-series data

May 04, 2022

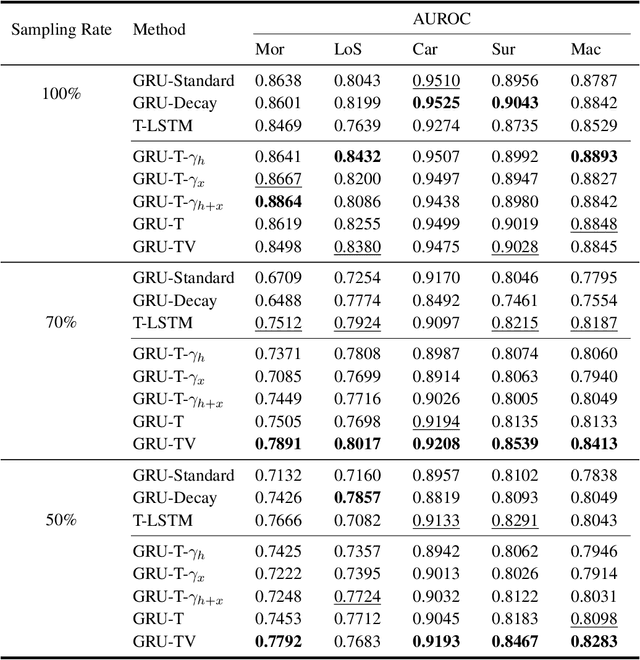

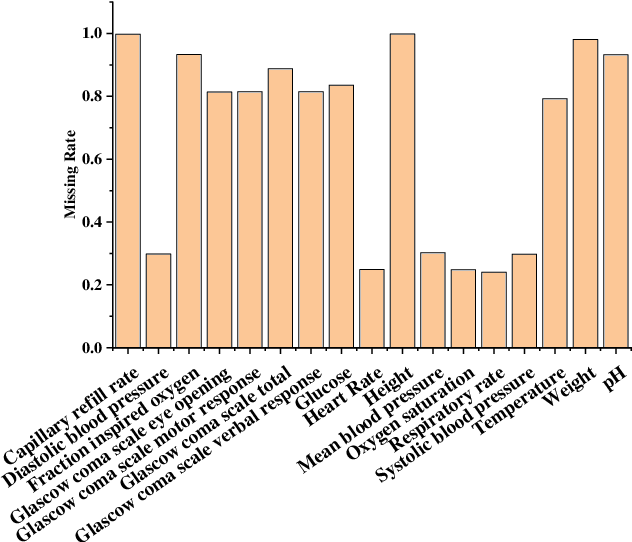

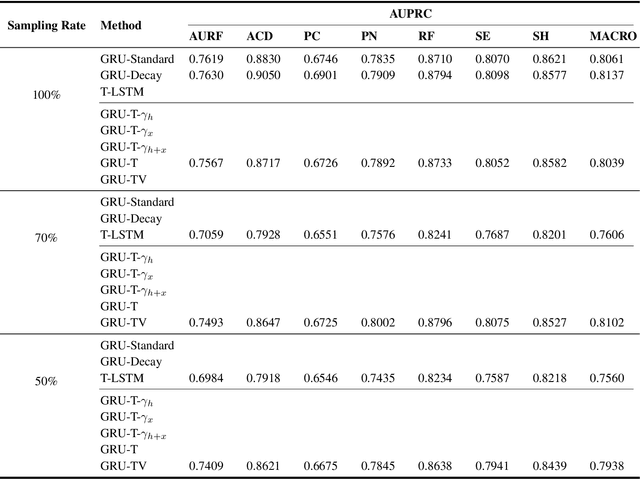

Abstract:Electronic health records (EHRs) provide a rich repository to track a patient's health status. EHRs seek to fully document the patient's physiological status, and include data that is is high dimensional, heterogeneous, and multimodal. The significant differences in the sampling frequency of clinical variables can result in high missing rates and uneven time intervals between adjacent records in the multivariate clinical time-series data extracted from EHRs. Current studies using clinical time-series data for patient characterization view the patient's physiological status as a discrete process described by sporadically collected values, while the dynamics in patient's physiological status are time-continuous. In addition, recurrent neural networks (RNNs) models widely used for patient representation learning lack the perception of time intervals and velocity, which limits the ability of the model to represent the physiological status of the patient. In this paper, we propose an improved gated recurrent unit (GRU), namely time- and velocity-aware GRU (GRU-TV), for patient representation learning of clinical multivariate time-series data in a time-continuous manner. In proposed GRU-TV, the neural ordinary differential equations (ODEs) and velocity perception mechanism are used to perceive the time interval between records in the time-series data and changing rate of the patient's physiological status, respectively. Experimental results on two real-world clinical EHR datasets(PhysioNet2012, MIMIC-III) show that GRU-TV achieve state-of-the-art performance in computer aided diagnosis (CAD) tasks, and is more advantageous in processing sampled data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge