Shujun Bi

Error bound of local minima and KL property of exponent 1/2 for squared F-norm regularized factorization

Nov 11, 2019

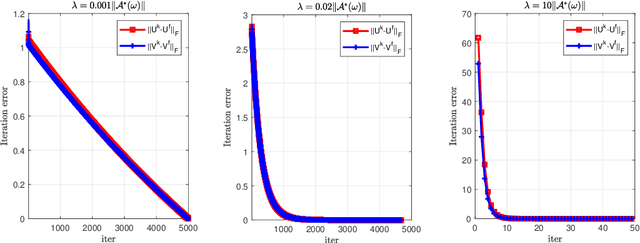

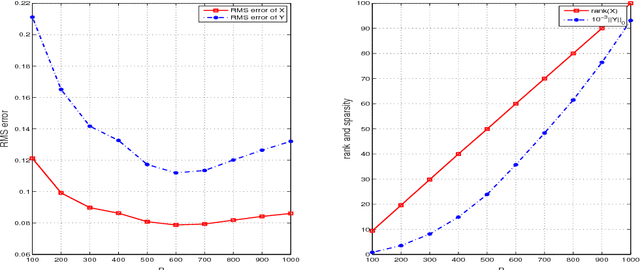

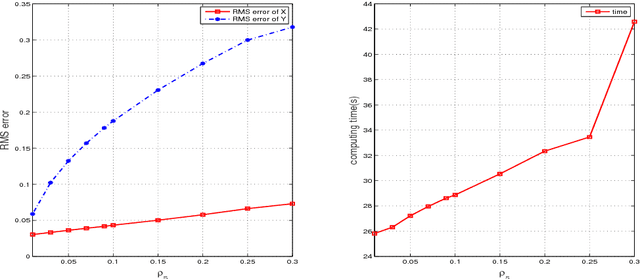

Abstract:This paper is concerned with the squared F(robenius)-norm regularized factorization form for noisy low-rank matrix recovery problems. Under a suitable assumption on the restricted condition number of the Hessian matrix of the loss function, we establish an error bound to the true matrix for those local minima whose ranks are not more than the rank of the true matrix. Then, for the least squares loss function, we achieve the KL property of exponent 1/2 for the F-norm regularized factorization function over its global minimum set under a restricted strong convexity assumption. These theoretical findings are also confirmed by applying an accelerated alternating minimization method to the F-norm regularized factorization problem.

KL property of exponent $1/2$ of $\ell_{2,0}$-norm and DC regularized factorizations for low-rank matrix recovery

Aug 24, 2019

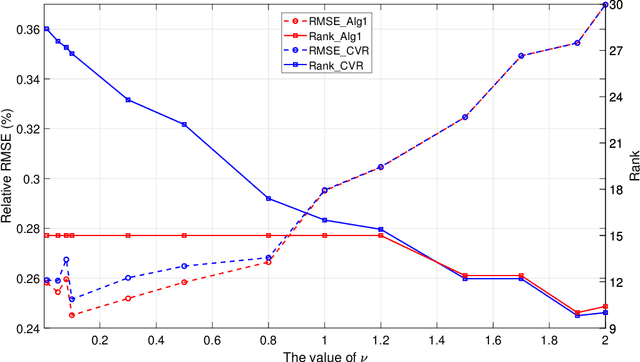

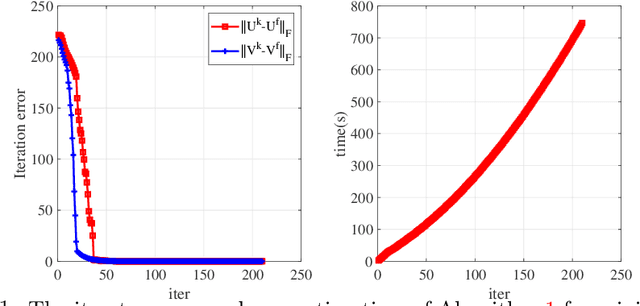

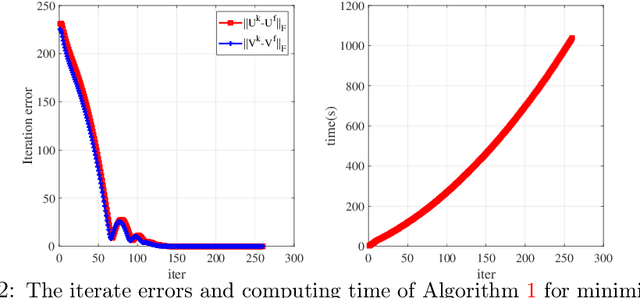

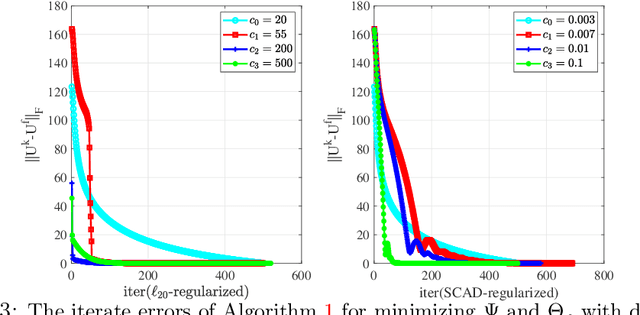

Abstract:This paper is concerned with the factorization form of the rank regularized loss minimization problem. To cater for the scenario in which only a coarse estimation is available for the rank of the true matrix, an $\ell_{2,0}$-norm regularized term is added to the factored loss function to reduce the rank adaptively; and account for the ambiguities in the factorization, a balanced term is then introduced. For the least squares loss, under a restricted condition number assumption on the sampling operator, we establish the KL property of exponent $1/2$ of the nonsmooth factored composite function and its equivalent DC reformulations in the set of their global minimizers. We also confirm the theoretical findings by applying a proximal linearized alternating minimization method to the regularized factorizations.

Equivalent Lipschitz surrogates for zero-norm and rank optimization problems

Apr 30, 2018

Abstract:This paper proposes a mechanism to produce equivalent Lipschitz surrogates for zero-norm and rank optimization problems by means of the global exact penalty for their equivalent mathematical programs with an equilibrium constraint (MPECs). Specifically, we reformulate these combinatorial problems as equivalent MPECs by the variational characterization of the zero-norm and rank function, show that their penalized problems, yielded by moving the equilibrium constraint into the objective, are the global exact penalization, and obtain the equivalent Lipschitz surrogates by eliminating the dual variable in the global exact penalty. These surrogates, including the popular SCAD function in statistics, are also difference of two convex functions (D.C.) if the function and constraint set involved in zero-norm and rank optimization problems are convex. We illustrate an application by designing a multi-stage convex relaxation approach to the rank plus zero-norm regularized minimization problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge