Shoichi Naito

Ricoh Company, Ltd, Tohoku University, RIKEN

Flee the Flaw: Annotating the Underlying Logic of Fallacious Arguments Through Templates and Slot-filling

Jun 18, 2024

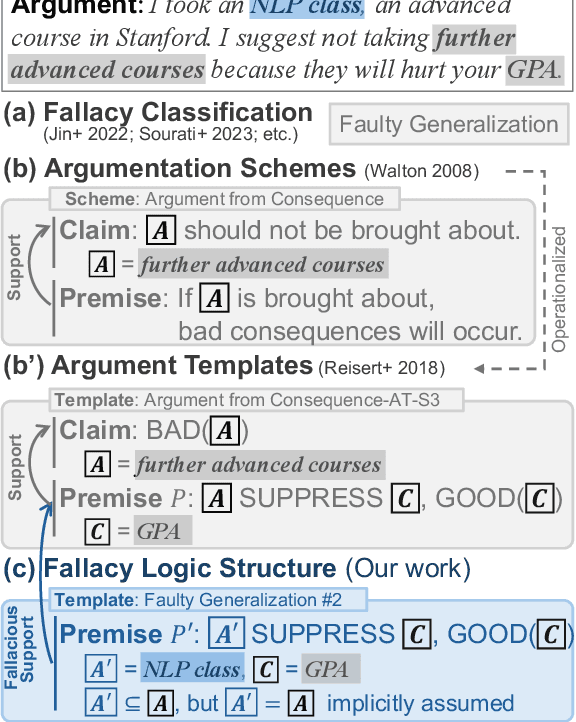

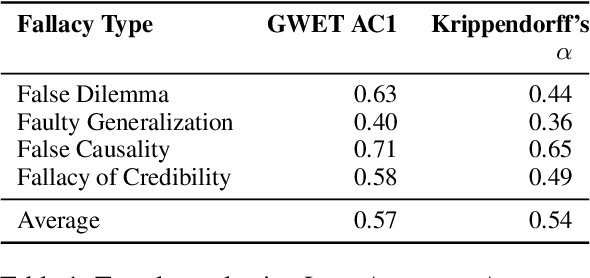

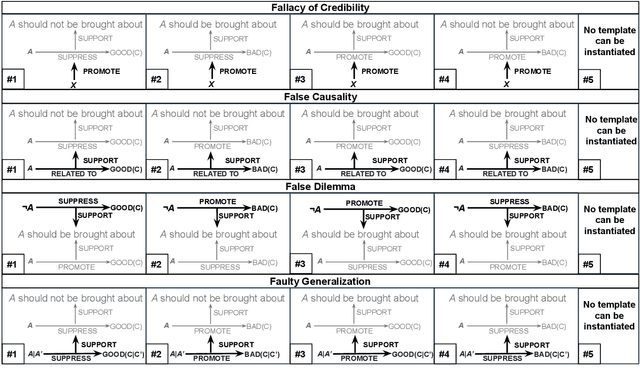

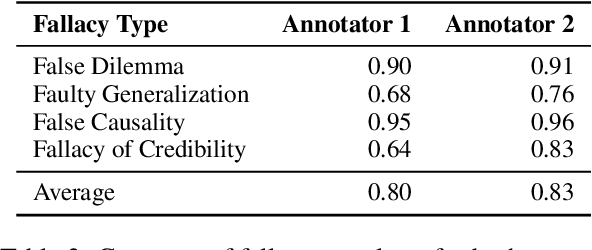

Abstract:Prior research in computational argumentation has mainly focused on scoring the quality of arguments, with less attention on explicating logical errors. In this work, we introduce four sets of explainable templates for common informal logical fallacies designed to explicate a fallacy's implicit logic. Using our templates, we conduct an annotation study on top of 400 fallacious arguments taken from LOGIC dataset and achieve a high agreement score (Krippendorf's alpha of 0.54) and reasonable coverage (0.83). Finally, we conduct an experiment for detecting the structure of fallacies and discover that state-of-the-art language models struggle with detecting fallacy templates (0.47 accuracy). To facilitate research on fallacies, we make our dataset and guidelines publicly available.

Teach Me How to Improve My Argumentation Skills: A Survey on Feedback in Argumentation

Jul 28, 2023

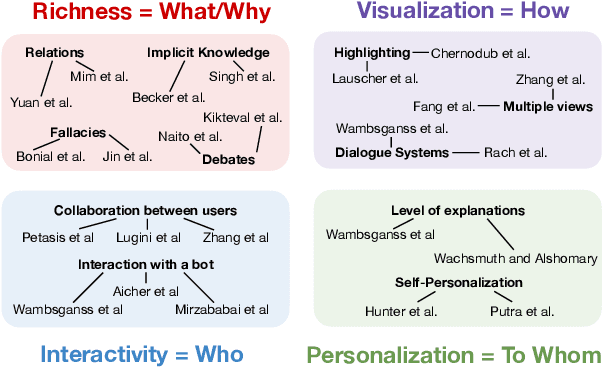

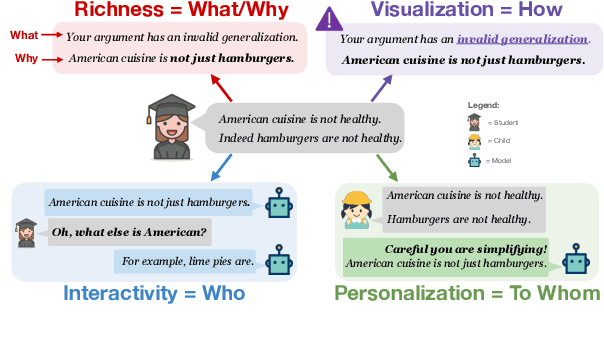

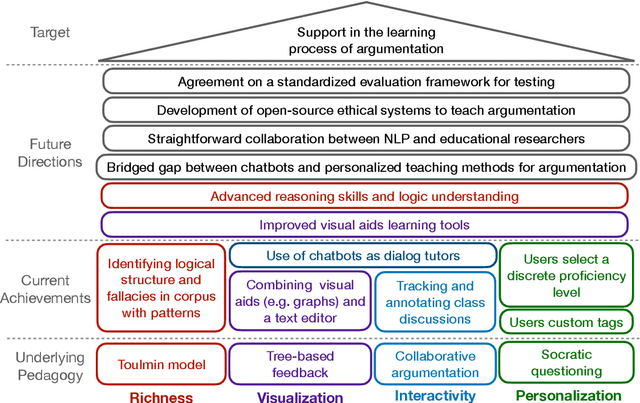

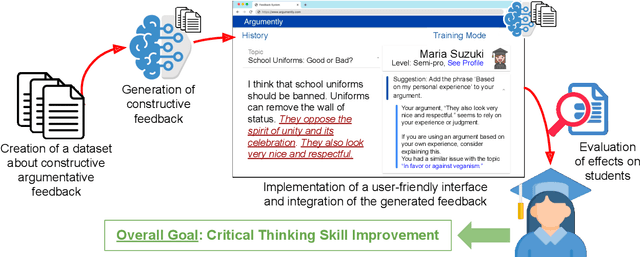

Abstract:The use of argumentation in education has been shown to improve critical thinking skills for end-users such as students, and computational models for argumentation have been developed to assist in this process. Although these models are useful for evaluating the quality of an argument, they oftentimes cannot explain why a particular argument is considered poor or not, which makes it difficult to provide constructive feedback to users to strengthen their critical thinking skills. In this survey, we aim to explore the different dimensions of feedback (Richness, Visualization, Interactivity, and Personalization) provided by the current computational models for argumentation, and the possibility of enhancing the power of explanations of such models, ultimately helping learners improve their critical thinking skills.

LPAttack: A Feasible Annotation Scheme for Capturing Logic Pattern of Attacks in Arguments

Apr 04, 2022

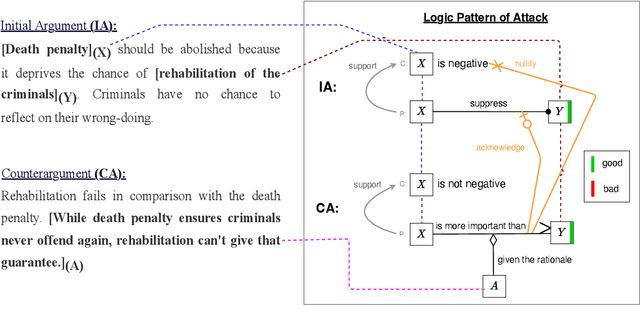

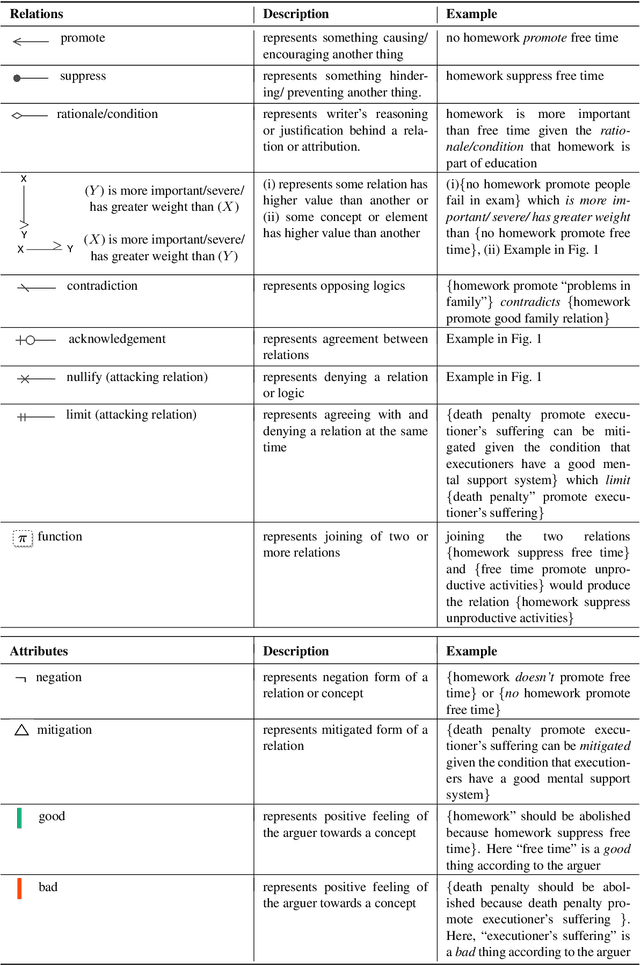

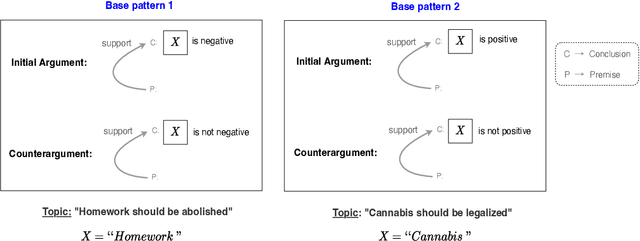

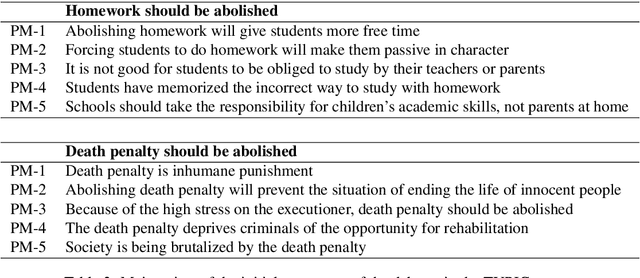

Abstract:In argumentative discourse, persuasion is often achieved by refuting or attacking others arguments. Attacking is not always straightforward and often comprise complex rhetorical moves such that arguers might agree with a logic of an argument while attacking another logic. Moreover, arguer might neither deny nor agree with any logics of an argument, instead ignore them and attack the main stance of the argument by providing new logics and presupposing that the new logics have more value or importance than the logics present in the attacked argument. However, no existing studies in the computational argumentation capture such complex rhetorical moves in attacks or the presuppositions or value judgements in them. In order to address this gap, we introduce LPAttack, a novel annotation scheme that captures the common modes and complex rhetorical moves in attacks along with the implicit presuppositions and value judgements in them. Our annotation study shows moderate inter-annotator agreement, indicating that human annotation for the proposed scheme is feasible. We publicly release our annotated corpus and the annotation guidelines.

TYPIC: A Corpus of Template-Based Diagnostic Comments on Argumentation

Jan 18, 2022

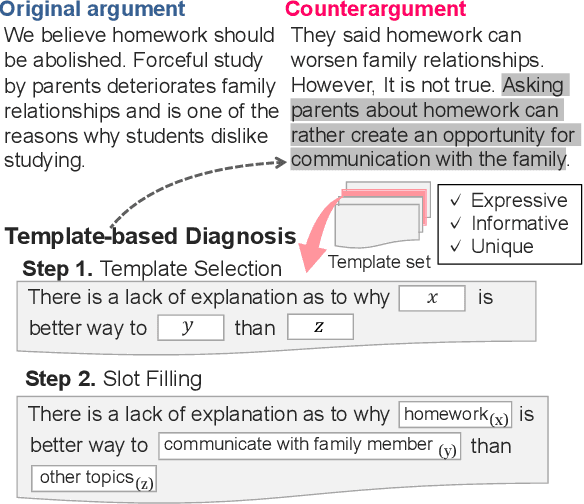

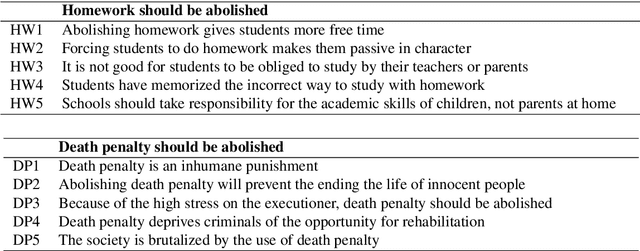

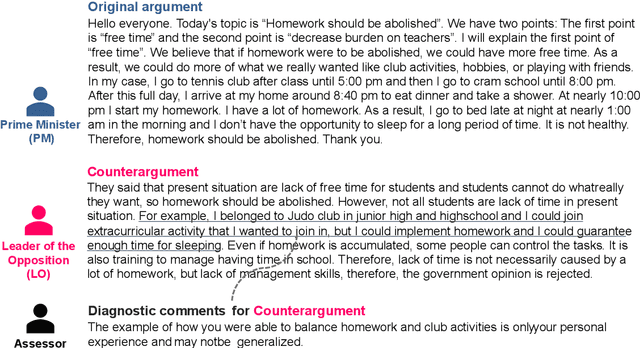

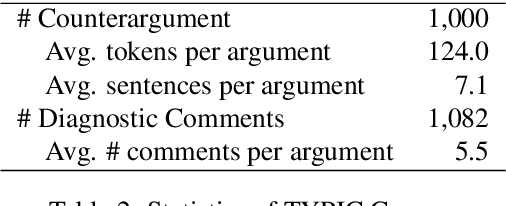

Abstract:Providing feedback on the argumentation of learner is essential for development of critical thinking skills, but it takes a lot of time and effort. To reduce the burden on teachers, we aim to automate a process of giving feedback, especially giving diagnostic comments which point out the weaknesses inherent in the argumentation. It is advisable to give specific diagnostic comments so that learners can recognize the diagnosis without misunderstanding. However, it is not obvious how the task of providing specific diagnostic comments should be formulated. We present a formulation of the task as template selection and slot filling to make an automatic evaluation easier and the behavior of the model more tractable. The key to the formulation is the possibility of creating a template set that is sufficient for practical use. In this paper, we define three criteria that a template set should satisfy: expressiveness, informativeness, and uniqueness, and verify the feasibility to create a template set that satisfies these criteria as a first trial. We will show that it is feasible through an annotation study that converts diagnostic comments given in text into a template format. The corpus used in the annotation study is publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge