Shiqi Fang

Spatiotemporal Density Correction of Multivariate Global Climate Model Projections using Deep Learning

Dec 07, 2024

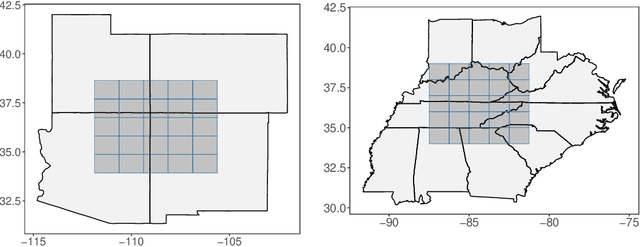

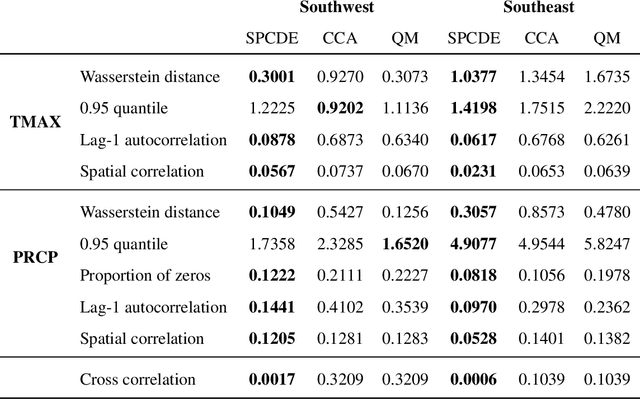

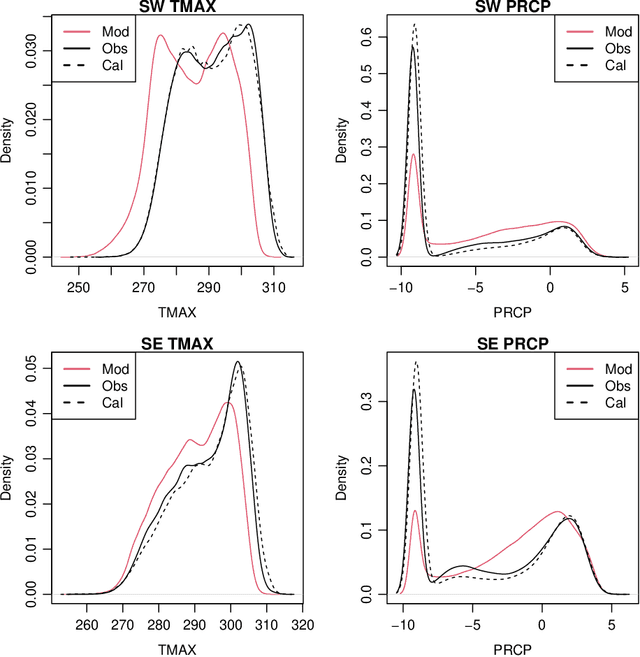

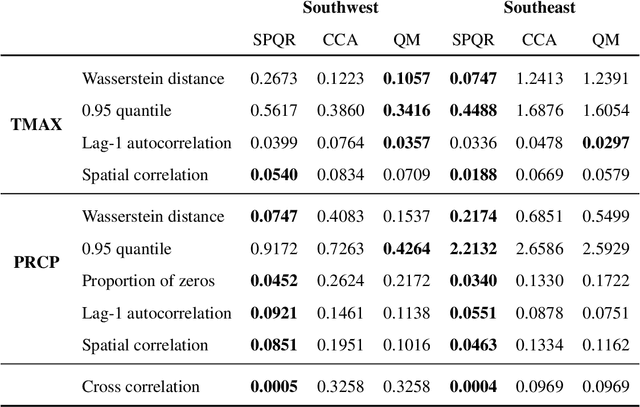

Abstract:Global Climate Models (GCMs) are numerical models that simulate complex physical processes within the Earth's climate system and are essential for understanding and predicting climate change. However, GCMs suffer from systemic biases due to simplifications made to the underlying physical processes. GCM output therefore needs to be bias corrected before it can be used for future climate projections. Most common bias correction methods, however, cannot preserve spatial, temporal, or inter-variable dependencies. We propose a new semi-parametric conditional density estimation (SPCDE) for density correction of the joint distribution of daily precipitation and maximum temperature data obtained from gridded GCM spatial fields. The Vecchia approximation is employed to preserve dependencies in the observed field during the density correction process, which is carried out using semi-parametric quantile regression. The ability to calibrate joint distributions of GCM projections has potential advantages not only in estimating extremes, but also in better estimating compound hazards, like heat waves and drought, under potential climate change. Illustration on historical data from 1951-2014 over two 5x5 spatial grids in the US indicate that SPCDE can preserve key marginal and joint distribution properties of precipitation and maximum temperature, and predictions obtained using SPCDE are better calibrated compared to predictions using asynchronous quantile mapping and canonical correlation analysis, two commonly used bias correction approaches.

Peer-induced Fairness: A Causal Approach for Algorithmic Fairness Auditing

Aug 16, 2024Abstract:With the EU AI Act effective from 1 August 2024, high-risk applications like credit scoring must adhere to stringent transparency and quality standards, including algorithmic fairness evaluations. Consequently, developing tools for auditing algorithmic fairness has become crucial. This paper addresses a key question: how can we scientifically audit algorithmic fairness? It is vital to determine whether adverse decisions result from algorithmic discrimination or the subjects' inherent limitations. We introduce a novel auditing framework, ``peer-induced fairness'', leveraging counterfactual fairness and advanced causal inference techniques within credit approval systems. Our approach assesses fairness at the individual level through peer comparisons, independent of specific AI methodologies. It effectively tackles challenges like data scarcity and imbalance, common in traditional models, particularly in credit approval. Model-agnostic and flexible, the framework functions as both a self-audit tool for stakeholders and an external audit tool for regulators, offering ease of integration. It also meets the EU AI Act's transparency requirements by providing clear feedback on whether adverse decisions stem from personal capabilities or discrimination. We demonstrate the framework's usefulness by applying it to SME credit approval, revealing significant bias: 41.51% of micro-firms face discrimination compared to non-micro firms. These findings highlight the framework's potential for diverse AI applications.

Peer-induced Fairness: A Causal Approach to Reveal Algorithmic Unfairness in Credit Approval

Aug 05, 2024Abstract:This paper introduces a novel framework, "peer-induced fairness", to scientifically audit algorithmic fairness. It addresses a critical but often overlooked issue: distinguishing between adverse outcomes due to algorithmic discrimination and those resulting from individuals' insufficient capabilities. By utilizing counterfactual fairness and advanced causal inference techniques, such as the Single World Intervention Graph, this model-agnostic approach evaluates fairness at the individual level through peer comparisons and hypothesis testing. It also tackles challenges like data scarcity and imbalance, offering a flexible, plug-and-play self-audit tool for stakeholders and an external audit tool for regulators, while providing explainable feedback for those affected by unfavorable decisions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge