Shihang Qiu

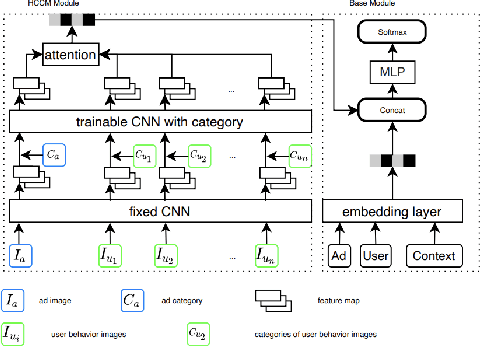

Hybrid CNN Based Attention with Category Prior for User Image Behavior Modeling

May 05, 2022

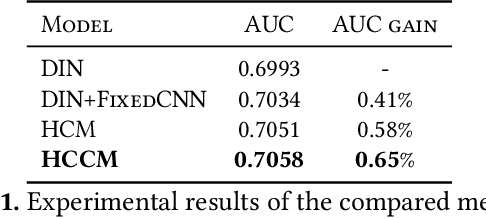

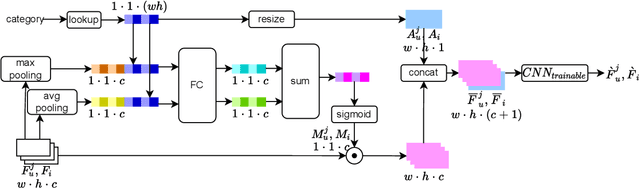

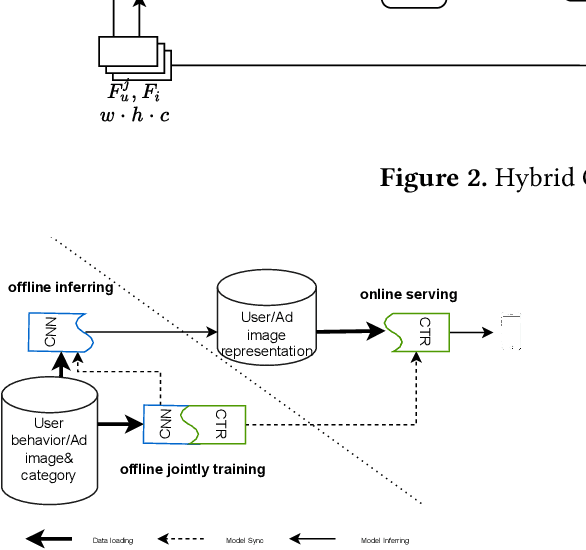

Abstract:User historical behaviors are proved useful for Click Through Rate (CTR) prediction in online advertising system. In Meituan, one of the largest e-commerce platform in China, an item is typically displayed with its image and whether a user clicks the item or not is usually influenced by its image, which implies that user's image behaviors are helpful for understanding user's visual preference and improving the accuracy of CTR prediction. Existing user image behavior models typically use a two-stage architecture, which extracts visual embeddings of images through off-the-shelf Convolutional Neural Networks (CNNs) in the first stage, and then jointly trains a CTR model with those visual embeddings and non-visual features. We find that the two-stage architecture is sub-optimal for CTR prediction. Meanwhile, precisely labeled categories in online ad systems contain abundant visual prior information, which can enhance the modeling of user image behaviors. However, off-the-shelf CNNs without category prior may extract category unrelated features, limiting CNN's expression ability. To address the two issues, we propose a hybrid CNN based attention module, unifying user's image behaviors and category prior, for CTR prediction. Our approach achieves significant improvements in both online and offline experiments on a billion scale real serving dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge