Shicong Ma

A Miniature Vision-Based Localization System for Indoor Blimps

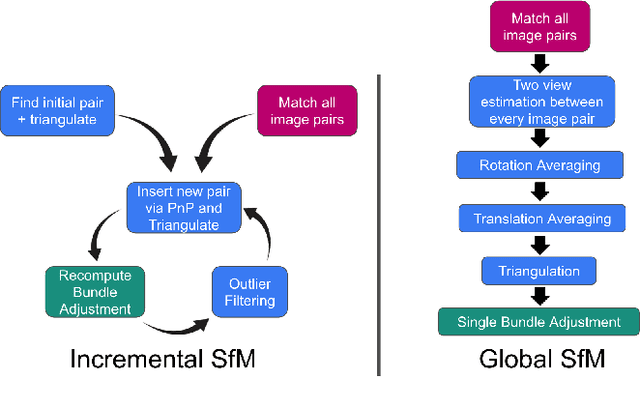

Aug 13, 2024Abstract:With increasing attention paid to blimp research, I hope to build an indoor blimp to interact with humans. To begin with, I propose developing a visual localization system to enable blimps to localize themselves in an indoor environment autonomously. This system initially reconstructs an indoor environment by employing Structure from Motion with Superpoint visual features. Next, with the previously built sparse point cloud map, the system generates camera poses by continuously employing pose estimation on matched visual features observed from the map. In this project, the blimp only serves as a reference mobile platform that constrains the weight of the perception system. The perception system contains one monocular camera and a WiFi adaptor to capture and transmit visual data to a ground PC station where the algorithms will be executed. The success of this project will transform remote-controlled indoor blimps into autonomous indoor blimps, which can be utilized for applications such as surveillance, advertisement, and indoor mapping.

FAST-LIO, Then Bayesian ICP, Then GTSFM

Oct 05, 2022

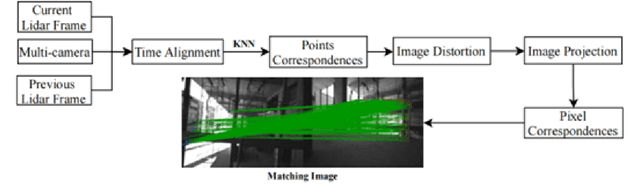

Abstract:For the Hilti Challenge 2022, we created two systems, one building upon the other. The first system is FL2BIPS which utilizes the iEKF algorithm FAST-LIO2 and Bayesian ICP PoseSLAM, whereas the second system is GTSFM, a structure from motion pipeline with factor graph backend optimization powered by GTSAM

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge