Jerred Chen

Image as an IMU: Estimating Camera Motion from a Single Motion-Blurred Image

Mar 21, 2025Abstract:In many robotics and VR/AR applications, fast camera motions cause a high level of motion blur, causing existing camera pose estimation methods to fail. In this work, we propose a novel framework that leverages motion blur as a rich cue for motion estimation rather than treating it as an unwanted artifact. Our approach works by predicting a dense motion flow field and a monocular depth map directly from a single motion-blurred image. We then recover the instantaneous camera velocity by solving a linear least squares problem under the small motion assumption. In essence, our method produces an IMU-like measurement that robustly captures fast and aggressive camera movements. To train our model, we construct a large-scale dataset with realistic synthetic motion blur derived from ScanNet++v2 and further refine our model by training end-to-end on real data using our fully differentiable pipeline. Extensive evaluations on real-world benchmarks demonstrate that our method achieves state-of-the-art angular and translational velocity estimates, outperforming current methods like MASt3R and COLMAP.

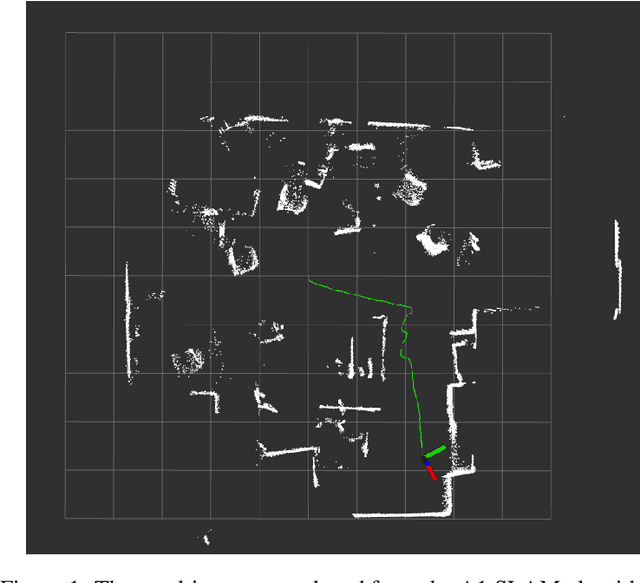

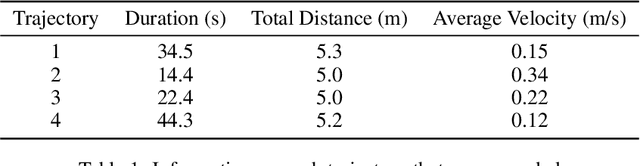

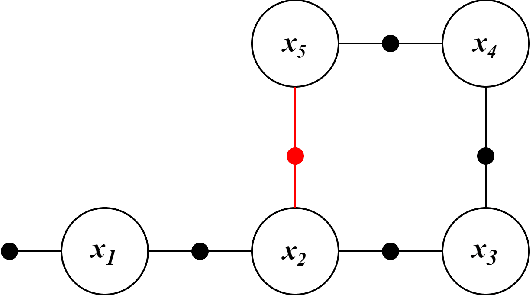

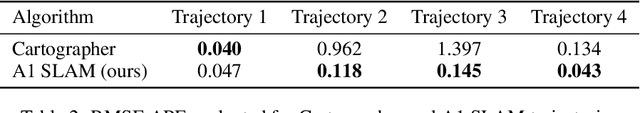

A1 SLAM: Quadruped SLAM using the A1's Onboard Sensors

Nov 26, 2022

Abstract:Quadrupeds are robots that have been of interest in the past few years due to their versatility in navigating across various terrain and utility in several applications. For quadrupeds to navigate without a predefined map a priori, they must rely on SLAM approaches to localize and build the map of the environment. Despite the surge of interest and research development in SLAM and quadrupeds, there still has yet to be an open-source package that capitalizes on the onboard sensors of an affordable quadruped. This motivates the A1 SLAM package, which is an open-source ROS package that provides the Unitree A1 quadruped with real-time, high performing SLAM capabilities using the default sensors shipped with the robot. A1 SLAM solves the PoseSLAM problem using the factor graph paradigm to optimize for the poses throughout the trajectory. A major design feature of the algorithm is using a sliding window of fully connected LiDAR odometry factors. A1 SLAM has been benchmarked against Google's Cartographer and has showed superior performance especially with trajectories experiencing aggressive motion.

FAST-LIO, Then Bayesian ICP, Then GTSFM

Oct 05, 2022

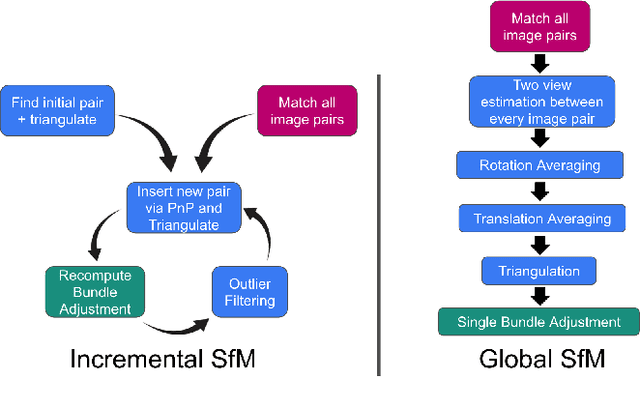

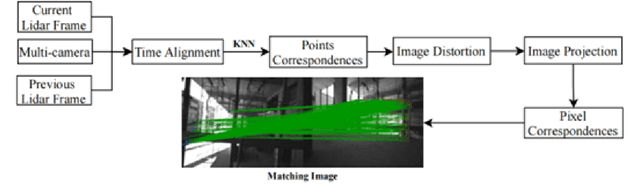

Abstract:For the Hilti Challenge 2022, we created two systems, one building upon the other. The first system is FL2BIPS which utilizes the iEKF algorithm FAST-LIO2 and Bayesian ICP PoseSLAM, whereas the second system is GTSFM, a structure from motion pipeline with factor graph backend optimization powered by GTSAM

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge