Sheng-Yeh Chen

Kenneth

Deep Exposure Fusion with Deghosting via Homography Estimation and Attention Learning

Apr 20, 2020

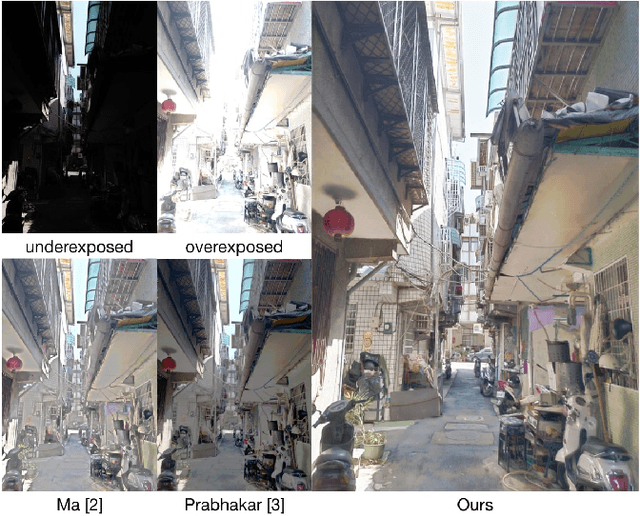

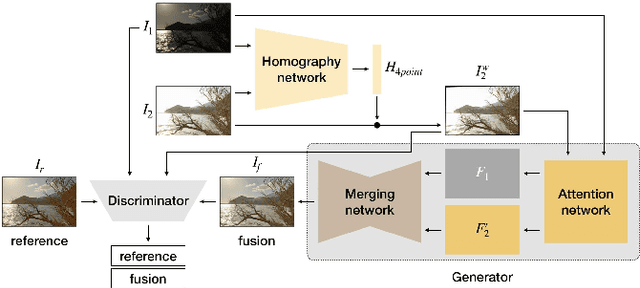

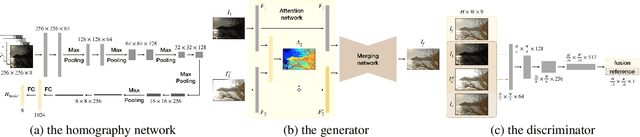

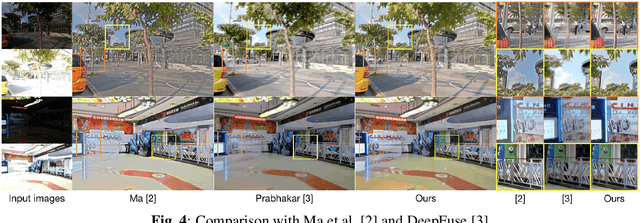

Abstract:Modern cameras have limited dynamic ranges and often produce images with saturated or dark regions using a single exposure. Although the problem could be addressed by taking multiple images with different exposures, exposure fusion methods need to deal with ghosting artifacts and detail loss caused by camera motion or moving objects. This paper proposes a deep network for exposure fusion. For reducing the potential ghosting problem, our network only takes two images, an underexposed image and an overexposed one. Our network integrates together homography estimation for compensating camera motion, attention mechanism for correcting remaining misalignment and moving pixels, and adversarial learning for alleviating other remaining artifacts. Experiments on real-world photos taken using handheld mobile phones show that the proposed method can generate high-quality images with faithful detail and vivid color rendition in both dark and bright areas.

EmotionLines: An Emotion Corpus of Multi-Party Conversations

May 30, 2018

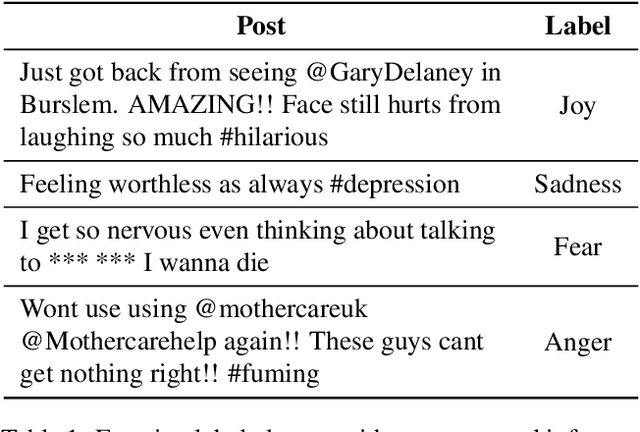

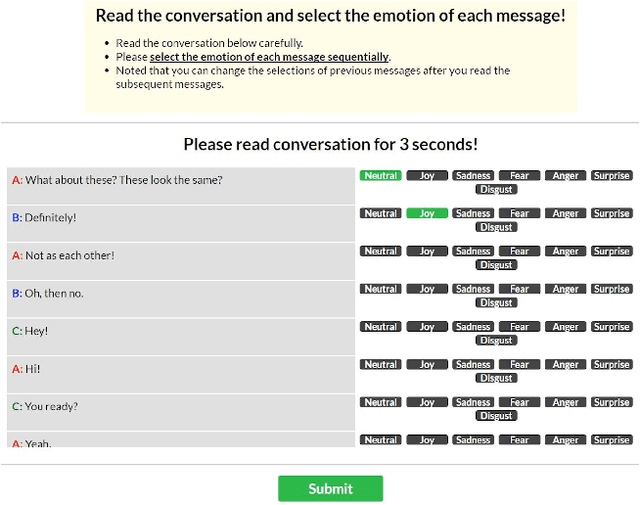

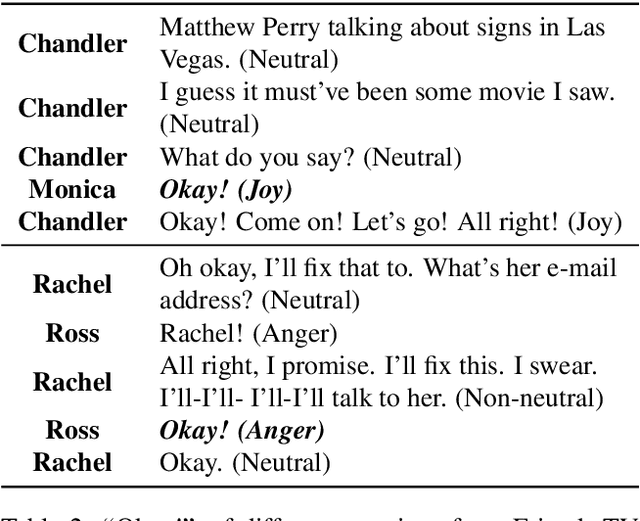

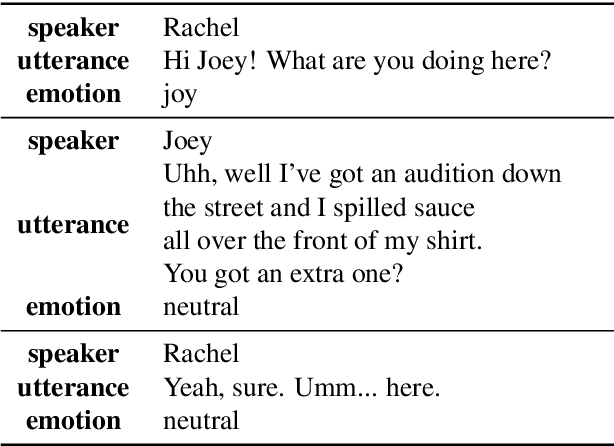

Abstract:Feeling emotion is a critical characteristic to distinguish people from machines. Among all the multi-modal resources for emotion detection, textual datasets are those containing the least additional information in addition to semantics, and hence are adopted widely for testing the developed systems. However, most of the textual emotional datasets consist of emotion labels of only individual words, sentences or documents, which makes it challenging to discuss the contextual flow of emotions. In this paper, we introduce EmotionLines, the first dataset with emotions labeling on all utterances in each dialogue only based on their textual content. Dialogues in EmotionLines are collected from Friends TV scripts and private Facebook messenger dialogues. Then one of seven emotions, six Ekman's basic emotions plus the neutral emotion, is labeled on each utterance by 5 Amazon MTurkers. A total of 29,245 utterances from 2,000 dialogues are labeled in EmotionLines. We also provide several strong baselines for emotion detection models on EmotionLines in this paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge