Sheng Hua

Sparse Optimization for Green Edge AI Inference

Mar 13, 2020

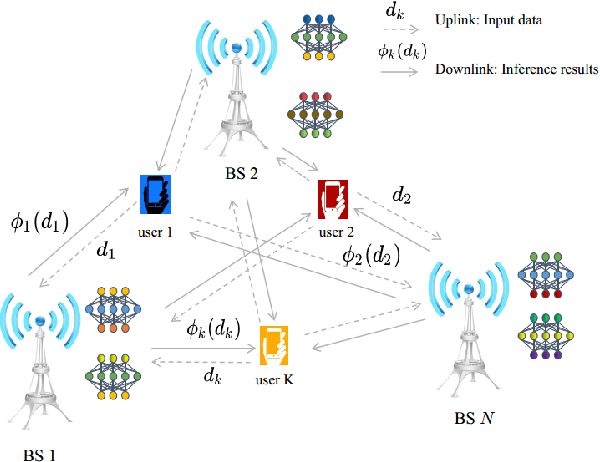

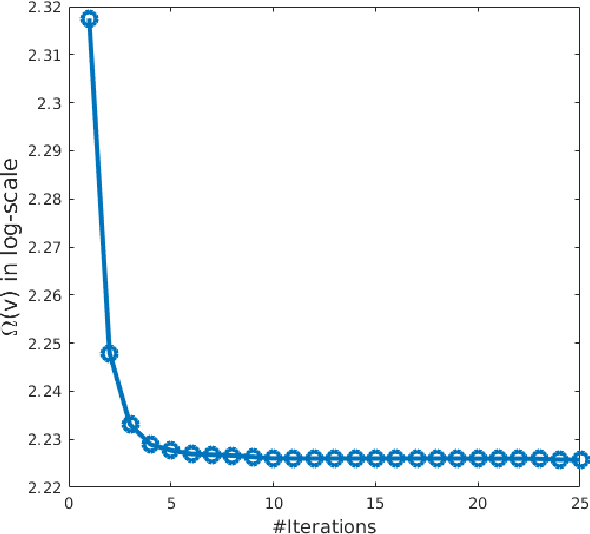

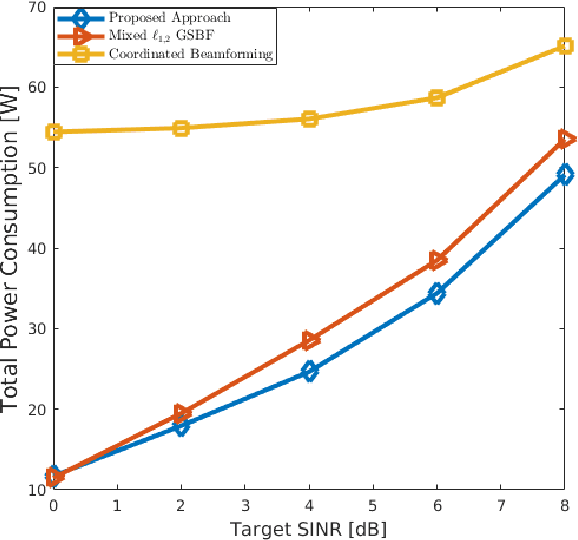

Abstract:With the rapid upsurge of deep learning tasks at the network edge, effective edge artificial intelligence (AI) inference becomes critical to provide low-latency intelligent services for mobile users via leveraging the edge computing capability. In such scenarios, energy efficiency becomes a primary concern. In this paper, we present a joint inference task selection and downlink beamforming strategy to achieve energy-efficient edge AI inference through minimizing the overall power consumption consisting of both computation and transmission power consumption, yielding a mixed combinatorial optimization problem. By exploiting the inherent connections between the set of task selection and group sparsity structural transmit beamforming vector, we reformulate the optimization as a group sparse beamforming problem. To solve this challenging problem, we propose a log-sum function based three-stage approach. By adopting the log-sum function to enhance the group sparsity, a proximal iteratively reweighted algorithm is developed. Furthermore, we establish the global convergence analysis and provide the ergodic worst-case convergence rate for this algorithm. Simulation results will demonstrate the effectiveness of the proposed approach for improving energy efficiency in edge AI inference systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge