Shathushan Sivashangaran

Exploration Without Maps via Zero-Shot Out-of-Distribution Deep Reinforcement Learning

Feb 07, 2024Abstract:Operation of Autonomous Mobile Robots (AMRs) of all forms that include wheeled ground vehicles, quadrupeds and humanoids in dynamically changing GPS denied environments without a-priori maps, exclusively using onboard sensors, is an unsolved problem that has potential to transform the economy, and vastly improve humanity's capabilities with improvements to agriculture, manufacturing, disaster response, military and space exploration. Conventional AMR automation approaches are modularized into perception, motion planning and control which is computationally inefficient, and requires explicit feature extraction and engineering, that inhibits generalization, and deployment at scale. Few works have focused on real-world end-to-end approaches that directly map sensor inputs to control outputs due to the large amount of well curated training data required for supervised Deep Learning (DL) which is time consuming and labor intensive to collect and label, and sample inefficiency and challenges to bridging the simulation to reality gap using Deep Reinforcement Learning (DRL). This paper presents a novel method to efficiently train DRL for robust end-to-end AMR exploration, in a constrained environment at physical limits in simulation, transferred zero-shot to the real-world. The representation learned in a compact parameter space with 2 fully connected layers with 64 nodes each is demonstrated to exhibit emergent behavior for out-of-distribution generalization to navigation in new environments that include unstructured terrain without maps, and dynamic obstacle avoidance. The learned policy outperforms conventional navigation algorithms while consuming a fraction of the computation resources, enabling execution on a range of AMR forms with varying embedded computer payloads.

AutoVRL: A High Fidelity Autonomous Ground Vehicle Simulator for Sim-to-Real Deep Reinforcement Learning

Apr 22, 2023Abstract:Deep Reinforcement Learning (DRL) enables cognitive Autonomous Ground Vehicle (AGV) navigation utilizing raw sensor data without a-priori maps or GPS, which is a necessity in hazardous, information poor environments such as regions where natural disasters occur, and extraterrestrial planets. The substantial training time required to learn an optimal DRL policy, which can be days or weeks for complex tasks, is a major hurdle to real-world implementation in AGV applications. Training entails repeated collisions with the surrounding environment over an extended time period, dependent on the complexity of the task, to reinforce positive exploratory, application specific behavior that is expensive, and time consuming in the real-world. Effectively bridging the simulation to real-world gap is a requisite for successful implementation of DRL in complex AGV applications, enabling learning of cost-effective policies. We present AutoVRL, an open-source high fidelity simulator built upon the Bullet physics engine utilizing OpenAI Gym and Stable Baselines3 in PyTorch to train AGV DRL agents for sim-to-real policy transfer. AutoVRL is equipped with sensor implementations of GPS, IMU, LiDAR and camera, actuators for AGV control, and realistic environments, with extensibility for new environments and AGV models. The simulator provides access to state-of-the-art DRL algorithms, utilizing a python interface for simple algorithm and environment customization, and simulation execution.

Deep Reinforcement Learning for Autonomous Ground Vehicle Exploration Without A-Priori Maps

Jan 10, 2023Abstract:Autonomous Ground Vehicles (AGVs) are essential tools for a wide range of applications stemming from their ability to operate in hazardous environments with minimal human operator input. Efficient and effective motion planning is paramount for successful operation of AGVs. Conventional motion planning algorithms are dependent on prior knowledge of environment characteristics and offer limited utility in information poor, dynamically altering environments such as areas where emergency hazards like fire and earthquake occur, and unexplored subterranean environments such as tunnels and lava tubes on Mars. We propose a Deep Reinforcement Learning (DRL) framework for intelligent AGV exploration without a-priori maps utilizing Actor-Critic DRL algorithms to learn policies in continuous and high-dimensional action spaces, required for robotics applications. The DRL architecture comprises feedforward neural networks for the critic and actor representations in which the actor network strategizes linear and angular velocity control actions given current state inputs, that are evaluated by the critic network which learns and estimates Q-values to maximize an accumulated reward. Three off-policy DRL algorithms, DDPG, TD3 and SAC, are trained and compared in two environments of varying complexity, and further evaluated in a third with no prior training or knowledge of map characteristics. The agent is shown to learn optimal policies at the end of each training period to chart quick, efficient and collision-free exploration trajectories, and is extensible, capable of adapting to an unknown environment with no changes to network architecture or hyperparameters.

XTENTH-CAR: A Proportionally Scaled Experimental Vehicle Platform for Connected Autonomy and All-Terrain Research

Dec 03, 2022

Abstract:Connected Autonomous Vehicles (CAVs) are key components of the Intelligent Transportation System (ITS), and all-terrain Autonomous Ground Vehicles (AGVs) are indispensable tools for a wide range of applications such as disaster response, automated mining, agriculture, military operations, search and rescue missions, and planetary exploration. Experimental validation is a requisite for CAV and AGV research, but requires a large, safe experimental environment when using full-size vehicles which is time-consuming and expensive. To address these challenges, we developed XTENTH-CAR (eXperimental one-TENTH scaled vehicle platform for Connected autonomy and All-terrain Research), an open-source, cost-effective proportionally one-tenth scaled experimental vehicle platform governed by the same physics as a full-size on-road vehicle. XTENTH-CAR is equipped with the best-in-class NVIDIA Jetson AGX Orin System on Module (SOM), stereo camera, 2D LiDAR and open-source Electronic Speed Controller (ESC) with drivers written in the new Robot Operating System (ROS 2) to facilitate experimental CAV and AGV perception, motion planning and control research, that incorporate state-of-the-art computationally expensive algorithms such as Deep Reinforcement Learning (DRL). XTENTH-CAR is designed for compact experimental environments, and aims to increase the accessibility of experimental CAV and AGV research with low upfront costs, and complete Autonomous Vehicle (AV) hardware and software architectures similar to the full-sized X-CAR experimental vehicle platform, enabling efficient cross-platform development between small-scale and full-scale vehicles.

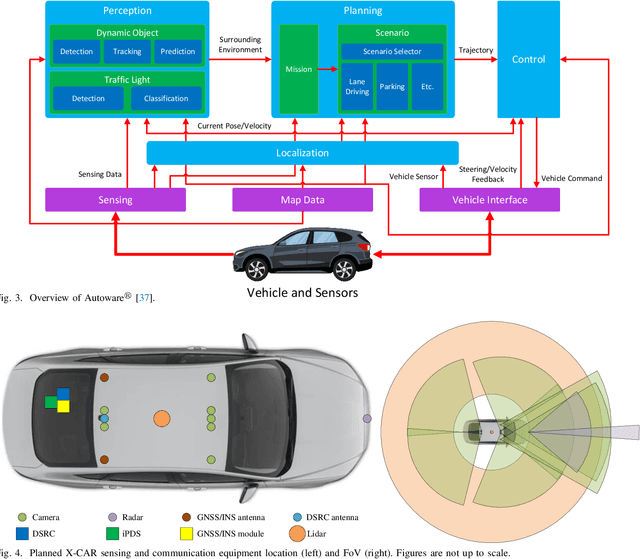

X-CAR: An Experimental Vehicle Platform for Connected Autonomy Research Powered by CARMA

Apr 06, 2022

Abstract:Autonomous vehicles promise a future with a safer, cleaner, more efficient, and more reliable transportation system. However, the current approach to autonomy has focused on building small, disparate intelligences that are closed off to the rest of the world. Vehicle connectivity has been proposed as a solution, relying on a vision of the future where a mix of connected autonomous and human-driven vehicles populate the road. Developed by the U.S. Department of Transportation Federal Highway Administration as a reusable, extensible platform for controlling connected autonomous vehicles, the CARMA Platform is one of the technologies enabling this connected future. Nevertheless, the adoption of the CARMA Platform has been slow, with a contributing factor being the limited, expensive, and somewhat old vehicle configurations that are officially supported. To alleviate this problem, we propose X-CAR (eXperimental vehicle platform for Connected Autonomy Research). By implementing the CARMA Platform on more affordable, high quality hardware, X-CAR aims to increase the versatility of the CARMA Platform and facilitate its adoption for research and development of connected driving automation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge