Shariq Bashir

Introducing Partial Matching Approach in Association Rules for Better Treatment of Missing Values

Apr 21, 2009

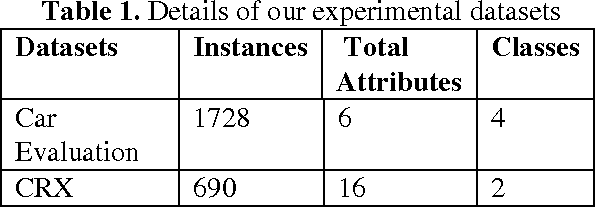

Abstract:Handling missing values in training datasets for constructing learning models or extracting useful information is considered to be an important research task in data mining and knowledge discovery in databases. In recent years, lot of techniques are proposed for imputing missing values by considering attribute relationships with missing value observation and other observations of training dataset. The main deficiency of such techniques is that, they depend upon single approach and do not combine multiple approaches, that why they are less accurate. To improve the accuracy of missing values imputation, in this paper we introduce a novel partial matching concept in association rules mining, which shows better results as compared to full matching concept that we described in our previous work. Our imputation technique combines the partial matching concept in association rules with k-nearest neighbor approach. Since this is a hybrid technique, therefore its accuracy is much better than as compared to those techniques which depend upon single approach. To check the efficiency of our technique, we also provide detail experimental results on number of benchmark datasets which show better results as compared to previous approaches.

Using Association Rules for Better Treatment of Missing Values

Apr 21, 2009

Abstract:The quality of training data for knowledge discovery in databases (KDD) and data mining depends upon many factors, but handling missing values is considered to be a crucial factor in overall data quality. Today real world datasets contains missing values due to human, operational error, hardware malfunctioning and many other factors. The quality of knowledge extracted, learning and decision problems depend directly upon the quality of training data. By considering the importance of handling missing values in KDD and data mining tasks, in this paper we propose a novel Hybrid Missing values Imputation Technique (HMiT) using association rules mining and hybrid combination of k-nearest neighbor approach. To check the effectiveness of our HMiT missing values imputation technique, we also perform detail experimental results on real world datasets. Our results suggest that the HMiT technique is not only better in term of accuracy but it also take less processing time as compared to current best missing values imputation technique based on k-nearest neighbor approach, which shows the effectiveness of our missing values imputation technique.

Fast Algorithms for Mining Interesting Frequent Itemsets without Minimum Support

Apr 21, 2009

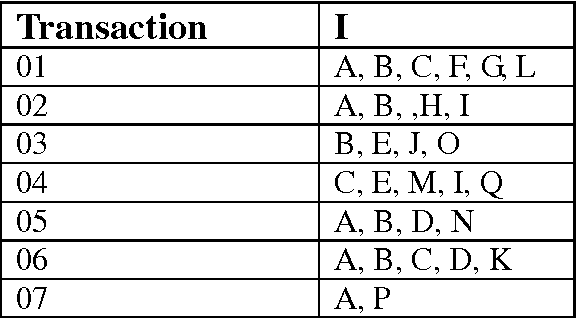

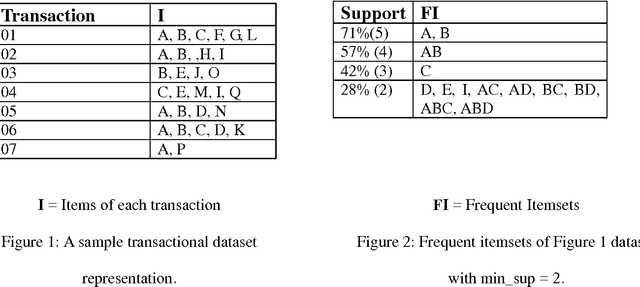

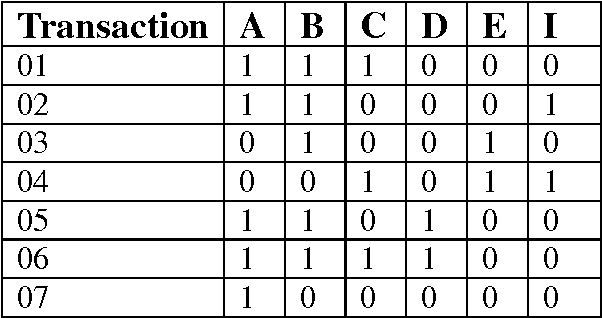

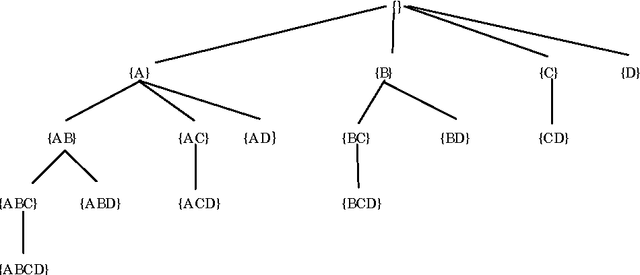

Abstract:Real world datasets are sparse, dirty and contain hundreds of items. In such situations, discovering interesting rules (results) using traditional frequent itemset mining approach by specifying a user defined input support threshold is not appropriate. Since without any domain knowledge, setting support threshold small or large can output nothing or a large number of redundant uninteresting results. Recently a novel approach of mining only N-most/Top-K interesting frequent itemsets has been proposed, which discovers the top N interesting results without specifying any user defined support threshold. However, mining interesting frequent itemsets without minimum support threshold are more costly in terms of itemset search space exploration and processing cost. Thereby, the efficiency of their mining highly depends upon three main factors (1) Database representation approach used for itemset frequency counting, (2) Projection of relevant transactions to lower level nodes of search space and (3) Algorithm implementation technique. Therefore, to improve the efficiency of mining process, in this paper we present two novel algorithms called (N-MostMiner and Top-K-Miner) using the bit-vector representation approach which is very efficient in terms of itemset frequency counting and transactions projection. In addition to this, several efficient implementation techniques of N-MostMiner and Top-K-Miner are also present which we experienced in our implementation. Our experimental results on benchmark datasets suggest that the NMostMiner and Top-K-Miner are very efficient in terms of processing time as compared to current best algorithms BOMO and TFP.

Ramp: Fast Frequent Itemset Mining with Efficient Bit-Vector Projection Technique

Apr 21, 2009

Abstract:Mining frequent itemset using bit-vector representation approach is very efficient for dense type datasets, but highly inefficient for sparse datasets due to lack of any efficient bit-vector projection technique. In this paper we present a novel efficient bit-vector projection technique, for sparse and dense datasets. To check the efficiency of our bit-vector projection technique, we present a new frequent itemset mining algorithm Ramp (Real Algorithm for Mining Patterns) build upon our bit-vector projection technique. The performance of the Ramp is compared with the current best (all, maximal and closed) frequent itemset mining algorithms on benchmark datasets. Different experimental results on sparse and dense datasets show that mining frequent itemset using Ramp is faster than the current best algorithms, which show the effectiveness of our bit-vector projection idea. We also present a new local maximal frequent itemsets propagation and maximal itemset superset checking approach FastLMFI, build upon our PBR bit-vector projection technique. Our different computational experiments suggest that itemset maximality checking using FastLMFI is fast and efficient than a previous will known progressive focusing approach.

HybridMiner: Mining Maximal Frequent Itemsets Using Hybrid Database Representation Approach

Apr 21, 2009

Abstract:In this paper we present a novel hybrid (arraybased layout and vertical bitmap layout) database representation approach for mining complete Maximal Frequent Itemset (MFI) on sparse and large datasets. Our work is novel in terms of scalability, item search order and two horizontal and vertical projection techniques. We also present a maximal algorithm using this hybrid database representation approach. Different experimental results on real and sparse benchmark datasets show that our approach is better than previous state of art maximal algorithms.

FastLMFI: An Efficient Approach for Local Maximal Patterns Propagation and Maximal Patterns Superset Checking

Apr 21, 2009

Abstract:Maximal frequent patterns superset checking plays an important role in the efficient mining of complete Maximal Frequent Itemsets (MFI) and maximal search space pruning. In this paper we present a new indexing approach, FastLMFI for local maximal frequent patterns (itemset) propagation and maximal patterns superset checking. Experimental results on different sparse and dense datasets show that our work is better than the previous well known progressive focusing technique. We have also integrated our superset checking approach with an existing state of the art maximal itemsets algorithm Mafia, and compare our results with current best maximal itemsets algorithms afopt-max and FP (zhu)-max. Our results outperform afopt-max and FP (zhu)-max on dense (chess and mushroom) datasets on almost all support thresholds, which shows the effectiveness of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge