Shangao Lin

Multi-Channel Attentive Feature Fusion for Radio Frequency Fingerprinting

Mar 19, 2023

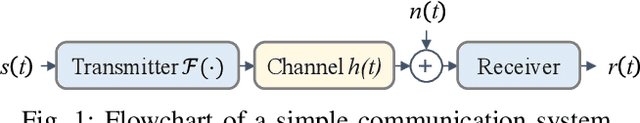

Abstract:Radio frequency fingerprinting (RFF) is a promising device authentication technique for securing the Internet of things. It exploits the intrinsic and unique hardware impairments of the transmitters for RF device identification. In real-world communication systems, hardware impairments across transmitters are subtle, which are difficult to model explicitly. Recently, due to the superior performance of deep learning (DL)-based classification models on real-world datasets, DL networks have been explored for RFF. Most existing DL-based RFF models use a single representation of radio signals as the input. Multi-channel input model can leverage information from different representations of radio signals and improve the identification accuracy of the RF fingerprint. In this work, we propose a novel multi-channel attentive feature fusion (McAFF) method for RFF. It utilizes multi-channel neural features extracted from multiple representations of radio signals, including IQ samples, carrier frequency offset, fast Fourier transform coefficients and short-time Fourier transform coefficients, for better RF fingerprint identification. The features extracted from different channels are fused adaptively using a shared attention module, where the weights of neural features from multiple channels are learned during training the McAFF model. In addition, we design a signal identification module using a convolution-based ResNeXt block to map the fused features to device identities. To evaluate the identification performance of the proposed method, we construct a WiFi dataset, named WFDI, using commercial WiFi end-devices as the transmitters and a Universal Software Radio Peripheral (USRP) as the receiver. ...

Learning of Frequency-Time Attention Mechanism for Automatic Modulation Recognition

Nov 05, 2021

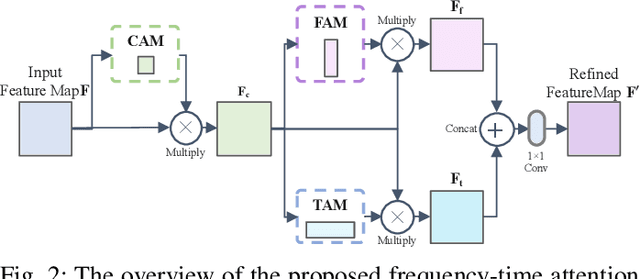

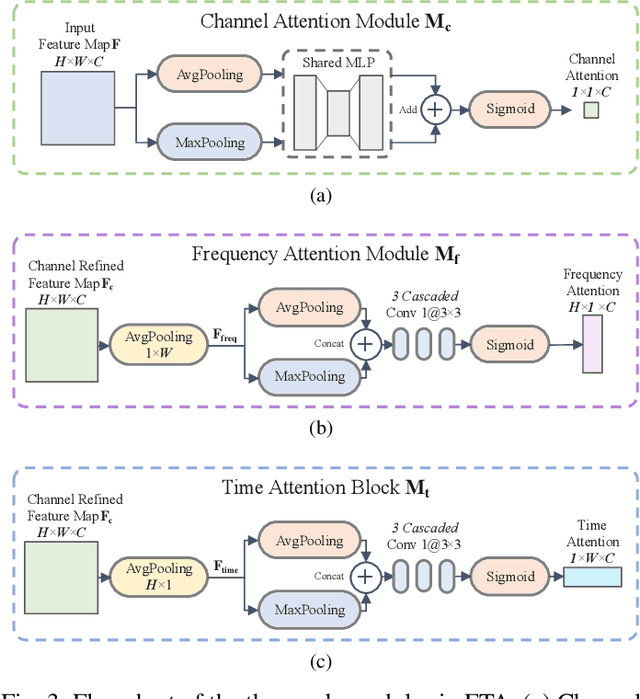

Abstract:Recent learning-based image classification and speech recognition approaches make extensive use of attention mechanisms to achieve state-of-the-art recognition power, which demonstrates the effectiveness of attention mechanisms. Motivated by the fact that the frequency and time information of modulated radio signals are crucial for modulation mode recognition, this paper proposes a frequency-time attention mechanism for a convolutional neural network (CNN)-based modulation recognition framework. The proposed frequency-time attention module is designed to learn which channel, frequency and time information is more meaningful in CNN for modulation recognition. We analyze the effectiveness of the proposed frequency-time attention mechanism and compare the proposed method with two existing learning-based methods. Experiments on an open-source modulation recognition dataset show that the recognition performance of the proposed framework is better than those of the framework without frequency-time attention and existing learning-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge