Shahane Tigranyan

Multi-Track Multimodal Learning on iMiGUE: Micro-Gesture and Emotion Recognition

Dec 29, 2025Abstract:Micro-gesture recognition and behavior-based emotion prediction are both highly challenging tasks that require modeling subtle, fine-grained human behaviors, primarily leveraging video and skeletal pose data. In this work, we present two multimodal frameworks designed to tackle both problems on the iMiGUE dataset. For micro-gesture classification, we explore the complementary strengths of RGB and 3D pose-based representations to capture nuanced spatio-temporal patterns. To comprehensively represent gestures, video, and skeletal embeddings are extracted using MViTv2-S and 2s-AGCN, respectively. Then, they are integrated through a Cross-Modal Token Fusion module to combine spatial and pose information. For emotion recognition, our framework extends to behavior-based emotion prediction, a binary classification task identifying emotional states based on visual cues. We leverage facial and contextual embeddings extracted using SwinFace and MViTv2-S models and fuse them through an InterFusion module designed to capture emotional expressions and body gestures. Experiments conducted on the iMiGUE dataset, within the scope of the MiGA 2025 Challenge, demonstrate the robust performance and accuracy of our method in the behavior-based emotion prediction task, where our approach secured 2nd place.

Trial and Trust: Addressing Byzantine Attacks with Comprehensive Defense Strategy

May 12, 2025Abstract:Recent advancements in machine learning have improved performance while also increasing computational demands. While federated and distributed setups address these issues, their structure is vulnerable to malicious influences. In this paper, we address a specific threat, Byzantine attacks, where compromised clients inject adversarial updates to derail global convergence. We combine the trust scores concept with trial function methodology to dynamically filter outliers. Our methods address the critical limitations of previous approaches, allowing functionality even when Byzantine nodes are in the majority. Moreover, our algorithms adapt to widely used scaled methods like Adam and RMSProp, as well as practical scenarios, including local training and partial participation. We validate the robustness of our methods by conducting extensive experiments on both synthetic and real ECG data collected from medical institutions. Furthermore, we provide a broad theoretical analysis of our algorithms and their extensions to aforementioned practical setups. The convergence guarantees of our methods are comparable to those of classical algorithms developed without Byzantine interference.

Self-Trained Model for ECG Complex Delineation

Jun 04, 2024

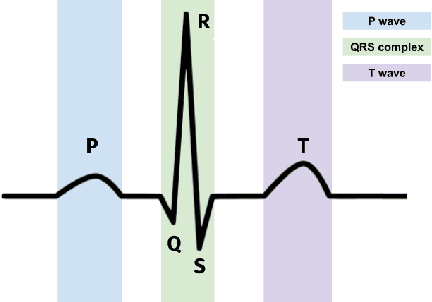

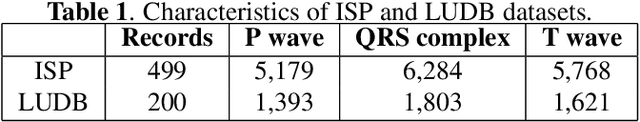

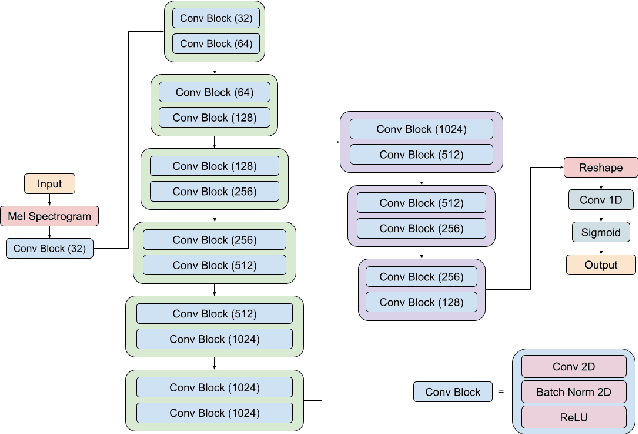

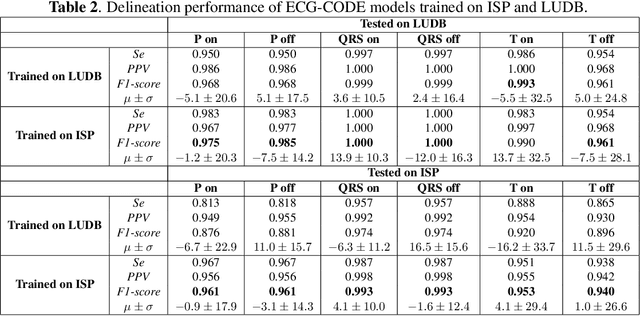

Abstract:Electrocardiogram (ECG) delineation plays a crucial role in assisting cardiologists with accurate diagnoses. Prior research studies have explored various methods, including the application of deep learning techniques, to achieve precise delineation. However, existing approaches face limitations primarily related to dataset size and robustness. In this paper, we introduce a dataset for ECG delineation and propose a novel self-trained method aimed at leveraging a vast amount of unlabeled ECG data. Our approach involves the pseudolabeling of unlabeled data using a neural network trained on our dataset. Subsequently, we train the model on the newly labeled samples to enhance the quality of delineation. We conduct experiments demonstrating that our dataset is a valuable resource for training robust models and that our proposed self-trained method improves the prediction quality of ECG delineation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge