Shafa Balaram

Consistency-Based Semi-supervised Evidential Active Learning for Diagnostic Radiograph Classification

Sep 05, 2022

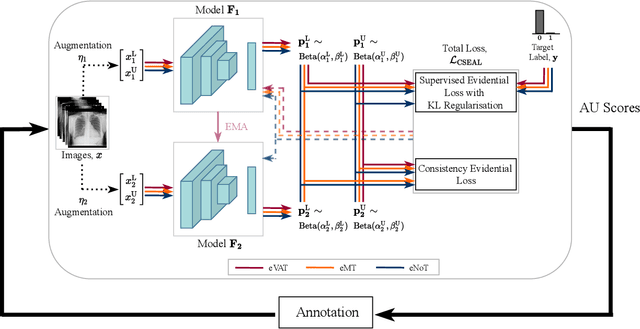

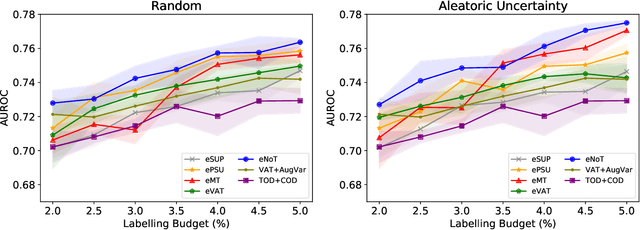

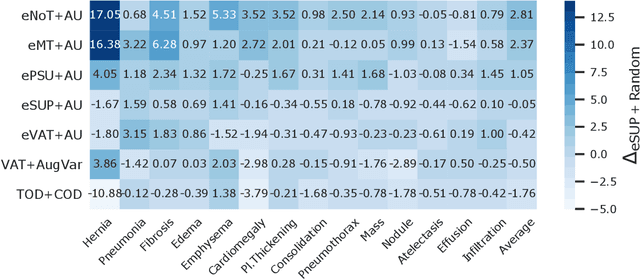

Abstract:Deep learning approaches achieve state-of-the-art performance for classifying radiology images, but rely on large labelled datasets that require resource-intensive annotation by specialists. Both semi-supervised learning and active learning can be utilised to mitigate this annotation burden. However, there is limited work on combining the advantages of semi-supervised and active learning approaches for multi-label medical image classification. Here, we introduce a novel Consistency-based Semi-supervised Evidential Active Learning framework (CSEAL). Specifically, we leverage predictive uncertainty based on theories of evidence and subjective logic to develop an end-to-end integrated approach that combines consistency-based semi-supervised learning with uncertainty-based active learning. We apply our approach to enhance four leading consistency-based semi-supervised learning methods: Pseudo-labelling, Virtual Adversarial Training, Mean Teacher and NoTeacher. Extensive evaluations on multi-label Chest X-Ray classification tasks demonstrate that CSEAL achieves substantive performance improvements over two leading semi-supervised active learning baselines. Further, a class-wise breakdown of results shows that our approach can substantially improve accuracy on rarer abnormalities with fewer labelled samples.

Semi-supervised classification of radiology images with NoTeacher: A Teacher that is not Mean

Aug 10, 2021

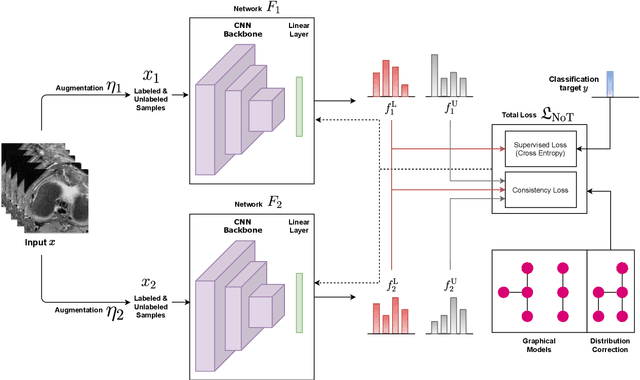

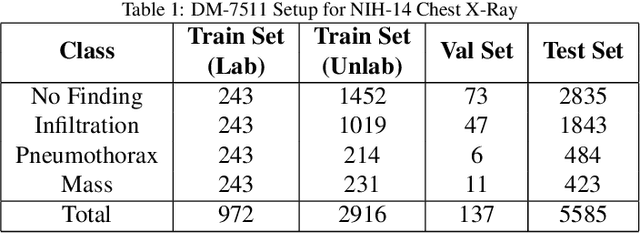

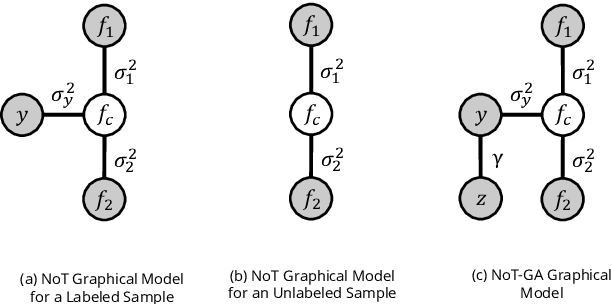

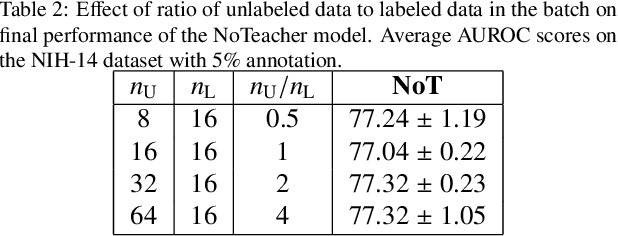

Abstract:Deep learning models achieve strong performance for radiology image classification, but their practical application is bottlenecked by the need for large labeled training datasets. Semi-supervised learning (SSL) approaches leverage small labeled datasets alongside larger unlabeled datasets and offer potential for reducing labeling cost. In this work, we introduce NoTeacher, a novel consistency-based SSL framework which incorporates probabilistic graphical models. Unlike Mean Teacher which maintains a teacher network updated via a temporal ensemble, NoTeacher employs two independent networks, thereby eliminating the need for a teacher network. We demonstrate how NoTeacher can be customized to handle a range of challenges in radiology image classification. Specifically, we describe adaptations for scenarios with 2D and 3D inputs, uni and multi-label classification, and class distribution mismatch between labeled and unlabeled portions of the training data. In realistic empirical evaluations on three public benchmark datasets spanning the workhorse modalities of radiology (X-Ray, CT, MRI), we show that NoTeacher achieves over 90-95% of the fully supervised AUROC with less than 5-15% labeling budget. Further, NoTeacher outperforms established SSL methods with minimal hyperparameter tuning, and has implications as a principled and practical option for semisupervised learning in radiology applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge