Seyed Pooya Shariatpanahi

Subgoal Discovery Using a Free Energy Paradigm and State Aggregations

Dec 21, 2024

Abstract:Reinforcement learning (RL) plays a major role in solving complex sequential decision-making tasks. Hierarchical and goal-conditioned RL are promising methods for dealing with two major problems in RL, namely sample inefficiency and difficulties in reward shaping. These methods tackle the mentioned problems by decomposing a task into simpler subtasks and temporally abstracting a task in the action space. One of the key components for task decomposition of these methods is subgoal discovery. We can use the subgoal states to define hierarchies of actions and also use them in decomposing complex tasks. Under the assumption that subgoal states are more unpredictable, we propose a free energy paradigm to discover them. This is achieved by using free energy to select between two spaces, the main space and an aggregation space. The $model \; changes$ from neighboring states to a given state shows the unpredictability of a given state, and therefore it is used in this paper for subgoal discovery. Our empirical results on navigation tasks like grid-world environments show that our proposed method can be applied for subgoal discovery without prior knowledge of the task. Our proposed method is also robust to the stochasticity of environments.

Semi-Supervised Learning Approach for Efficient Resource Allocation with Network Slicing in O-RAN

Jan 16, 2024Abstract:The Open Radio Access Network (O-RAN) technology has emerged as a promising solution for network operators, providing them with an open and favorable environment. Ensuring effective coordination of x-applications (xAPPs) is crucial to enhance flexibility and optimize network performance within the O-RAN. In this paper, we introduce an innovative approach to the resource allocation problem, aiming to coordinate multiple independent xAPPs for network slicing and resource allocation in O-RAN. Our proposed method focuses on maximizing the weighted throughput among user equipments (UE), as well as allocating physical resource blocks (PRBs). We prioritize two service types, namely enhanced Mobile Broadband and Ultra Reliable Low Latency Communication. To achieve this, we have designed two xAPPs: a power control xAPP for each UE and a PRB allocation xAPP. The proposed method consists of a two-part training phase, where the first part uses supervised learning with a Variational Autoencoder trained to regress the power transmission as well as the user association and PRB allocation decisions, and the second part uses unsupervised learning with a contrastive loss approach to improve the generalization and robustness of the model. We evaluate the performance of our proposed method by comparing its results to those obtained from an exhaustive search algorithm, deep Q-network algorithm, and by reporting performance metrics for the regression task. We also evaluate the proposed model's performance in different scenarios among the service types. The results show that the proposed method is a more efficient and effective solution for network slicing problems compared to state-of-the-art methods.

Reinforcement Learning with Subspaces using Free Energy Paradigm

Dec 13, 2020

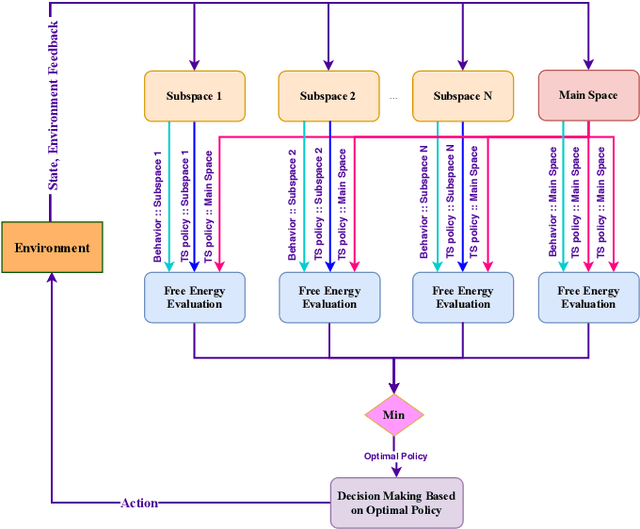

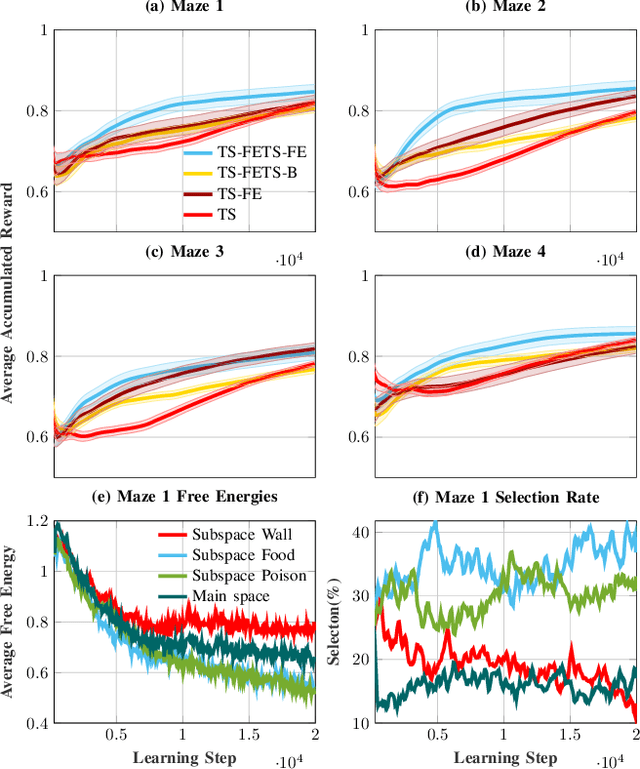

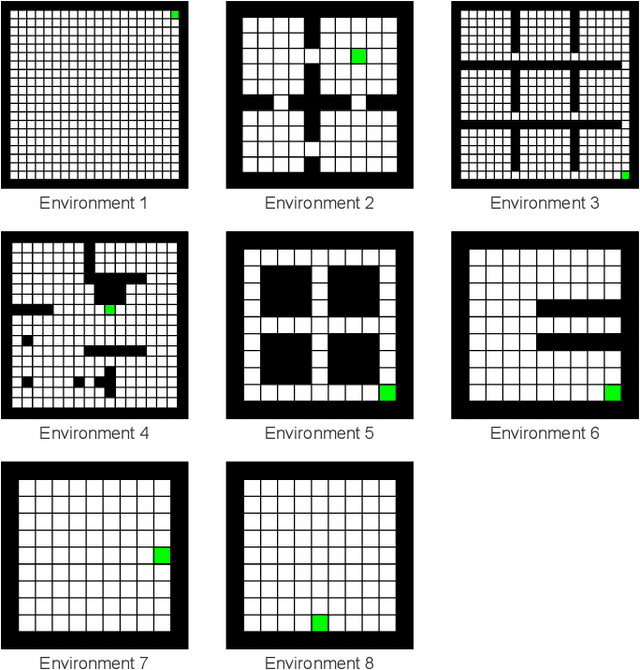

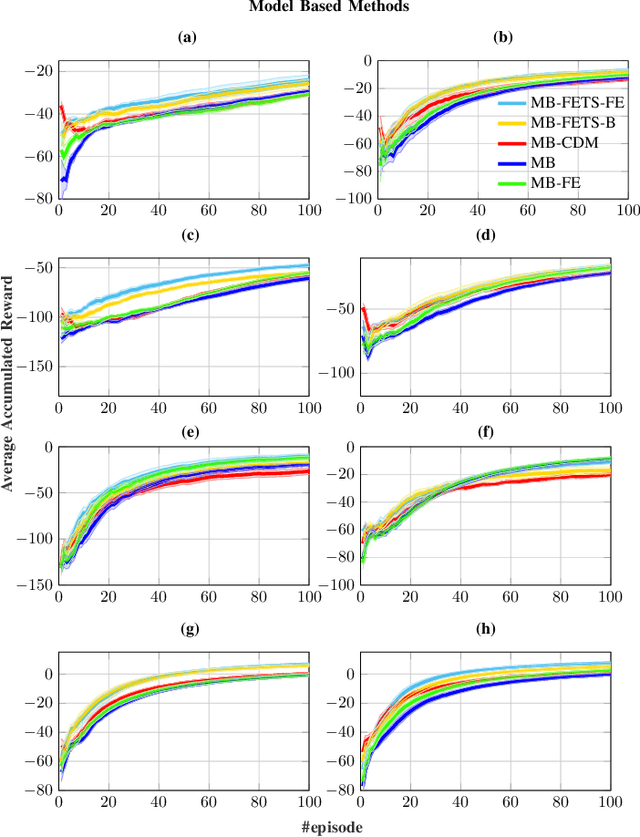

Abstract:In large-scale problems, standard reinforcement learning algorithms suffer from slow learning speed. In this paper, we follow the framework of using subspaces to tackle this problem. We propose a free-energy minimization framework for selecting the subspaces and integrate the policy of the state-space into the subspaces. Our proposed free-energy minimization framework rests upon Thompson sampling policy and behavioral policy of subspaces and the state-space. It is therefore applicable to a variety of tasks, discrete or continuous state space, model-free and model-based tasks. Through a set of experiments, we show that this general framework highly improves the learning speed. We also provide a convergence proof.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge