Reinforcement Learning with Subspaces using Free Energy Paradigm

Paper and Code

Dec 13, 2020

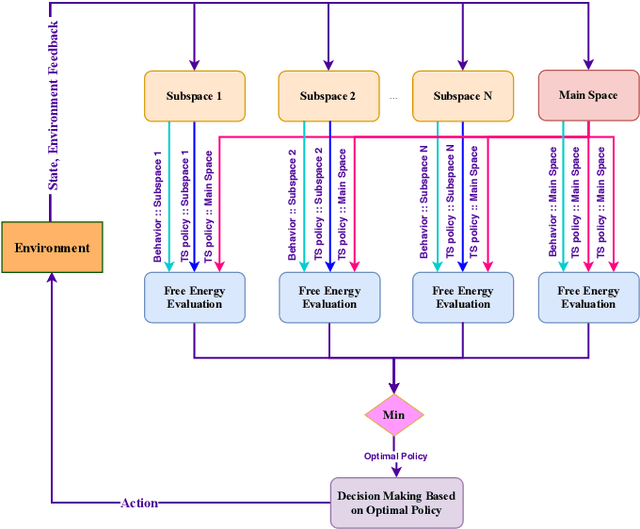

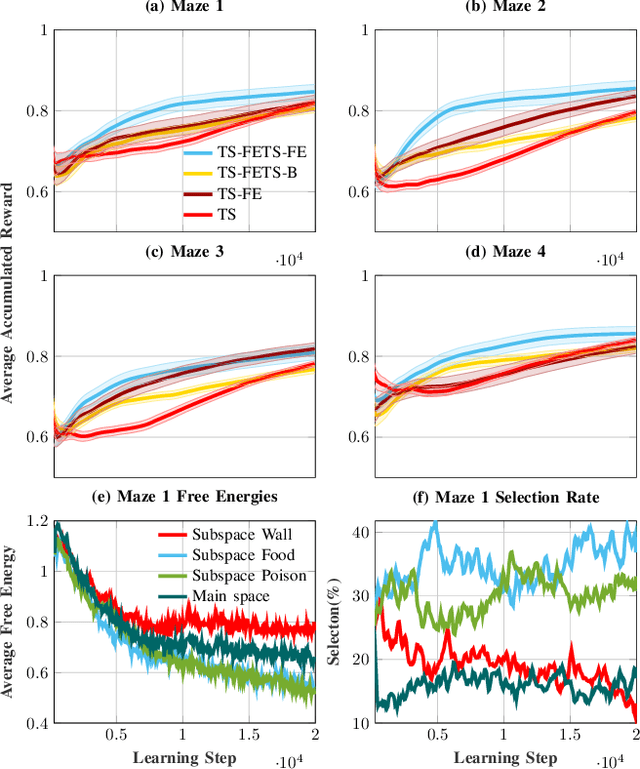

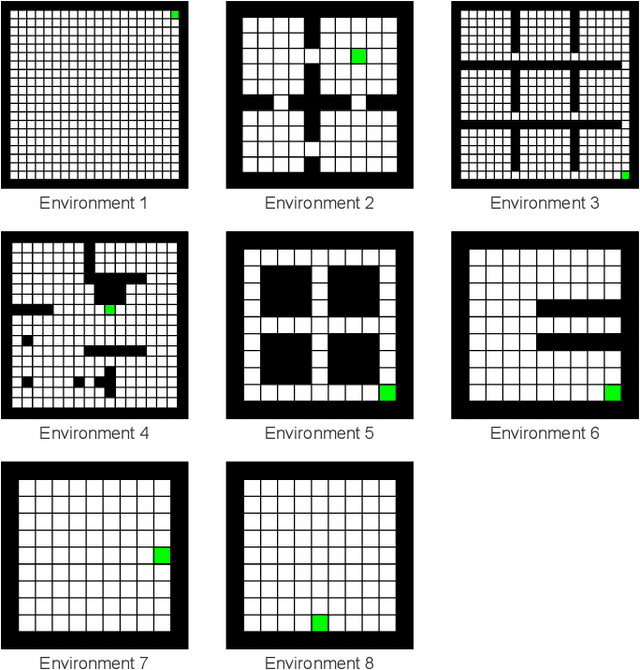

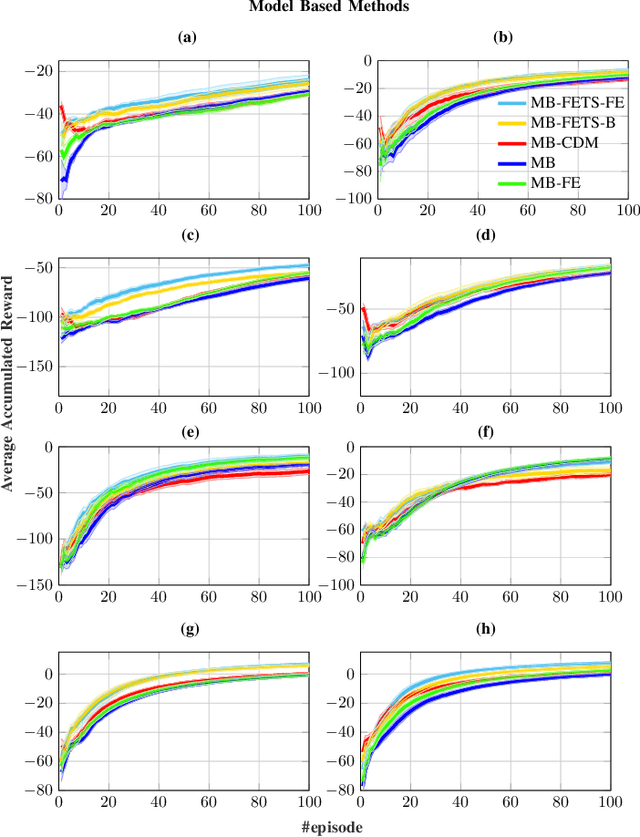

In large-scale problems, standard reinforcement learning algorithms suffer from slow learning speed. In this paper, we follow the framework of using subspaces to tackle this problem. We propose a free-energy minimization framework for selecting the subspaces and integrate the policy of the state-space into the subspaces. Our proposed free-energy minimization framework rests upon Thompson sampling policy and behavioral policy of subspaces and the state-space. It is therefore applicable to a variety of tasks, discrete or continuous state space, model-free and model-based tasks. Through a set of experiments, we show that this general framework highly improves the learning speed. We also provide a convergence proof.

* 12 pages, preprint

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge