Seyed Amir Hossein Hosseini

Scan-Specific MRI Reconstruction using Zero-Shot Physics-Guided Deep Learning

Feb 15, 2021

Abstract:Physics-guided deep learning (PG-DL) has emerged as a powerful tool for accelerated MRI reconstruction, while often necessitating a database of fully-sampled measurements for training. Recent self-supervised and unsupervised learning approaches enable training without fully-sampled data. However, a database of undersampled measurements may not be available in many scenarios, especially for scans involving contrast or recently developed sequences, necessitating new methodology for scan-specific PG-DL reconstructions. A main challenge for developing scan-specific PG-DL methods is the large number of parameters, making it prone to over-fitting. Moreover, database-trained models may not generalize to unseen measurements that differ in terms of SNR, image contrast or sampling pattern. In this work, we propose a zero-shot self-supervised learning approach to perform scan-specific PG-DL reconstruction to tackle these issues. The proposed approach splits available measurements for each scan into three disjoint sets. Two of these sets are used to enforce data consistency and define loss during training, while the last set is used to establish an early stopping criterion. In the presence of models pre-trained on a database, we show that the proposed approach can be adapted as scan-specific fine-tuning via transfer learning to further improve reconstruction quality.

Improved Supervised Training of Physics-Guided Deep Learning Image Reconstruction with Multi-Masking

Oct 26, 2020

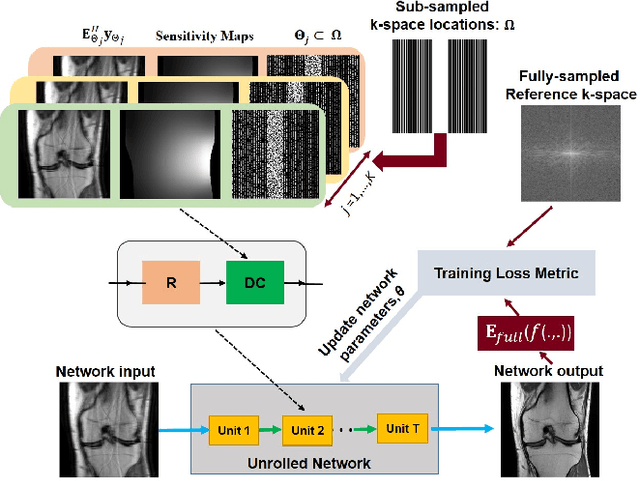

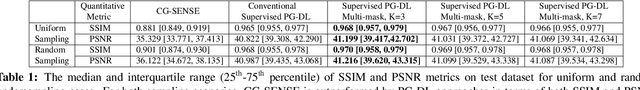

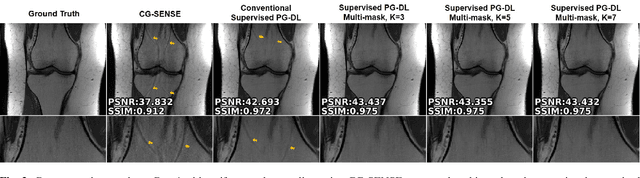

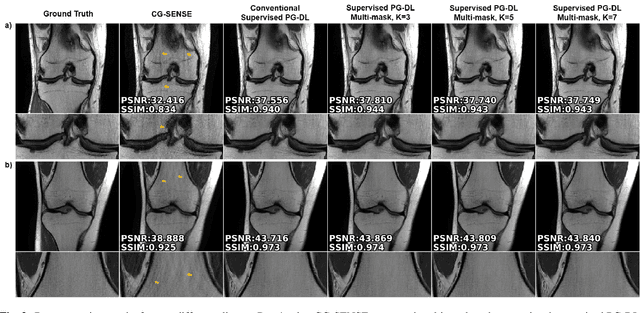

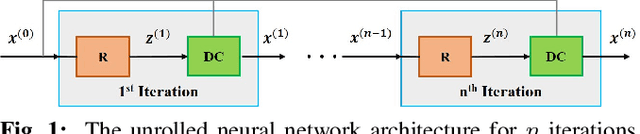

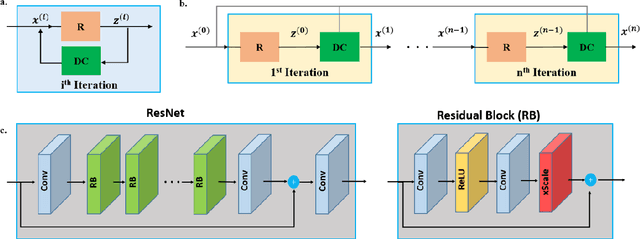

Abstract:Physics-guided deep learning (PG-DL) via algorithm unrolling has received significant interest for improved image reconstruction, including MRI applications. These methods unroll an iterative optimization algorithm into a series of regularizer and data consistency units. The unrolled networks are typically trained end-to-end using a supervised approach. Current supervised PG-DL approaches use all of the available sub-sampled measurements in their data consistency units. Thus, the network learns to fit the rest of the measurements. In this study, we propose to improve the performance and robustness of supervised training by utilizing randomness by retrospectively selecting only a subset of all the available measurements for data consistency units. The process is repeated multiple times using different random masks during training for further enhancement. Results on knee MRI show that the proposed multi-mask supervised PG-DL enhances reconstruction performance compared to conventional supervised PG-DL approaches.

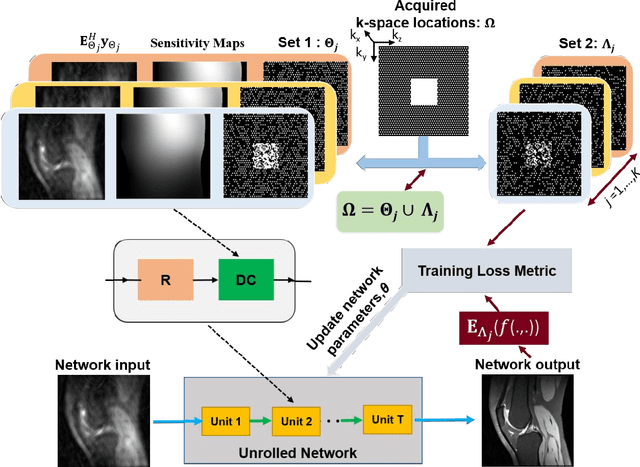

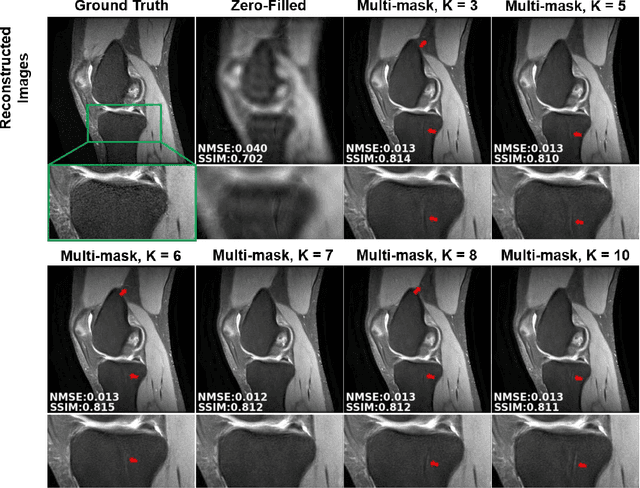

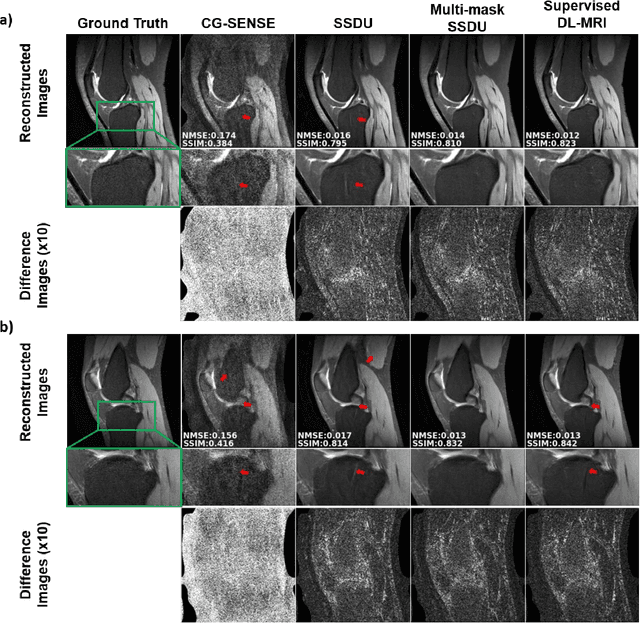

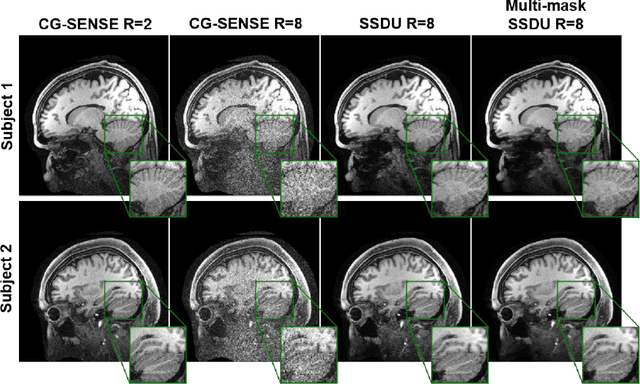

Multi-Mask Self-Supervised Learning for Physics-Guided Neural Networks in Highly Accelerated MRI

Aug 13, 2020

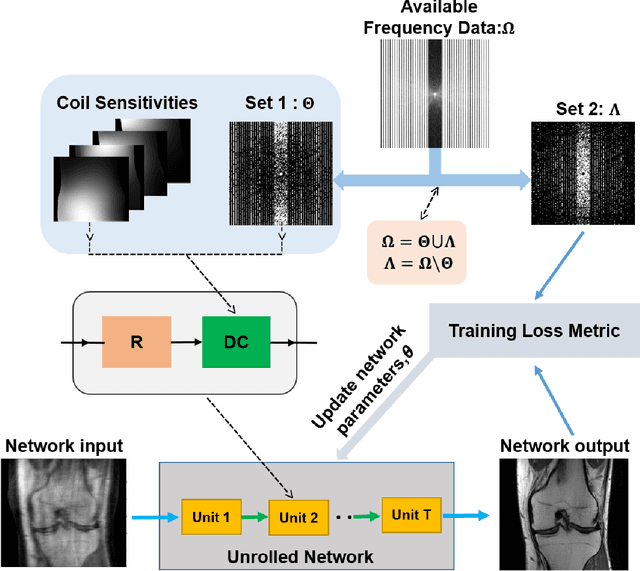

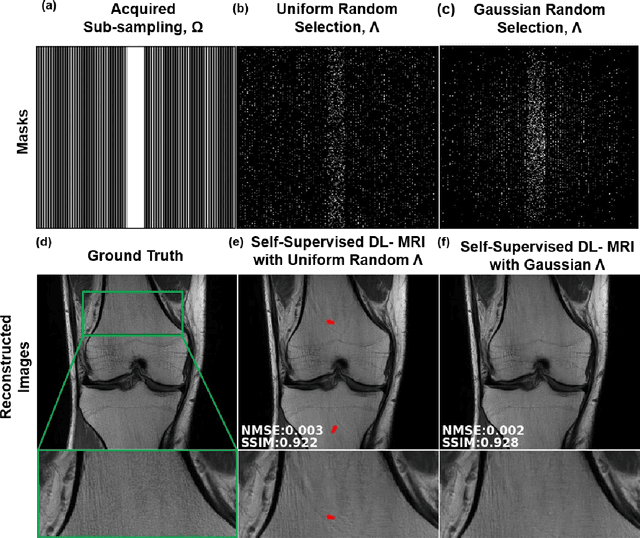

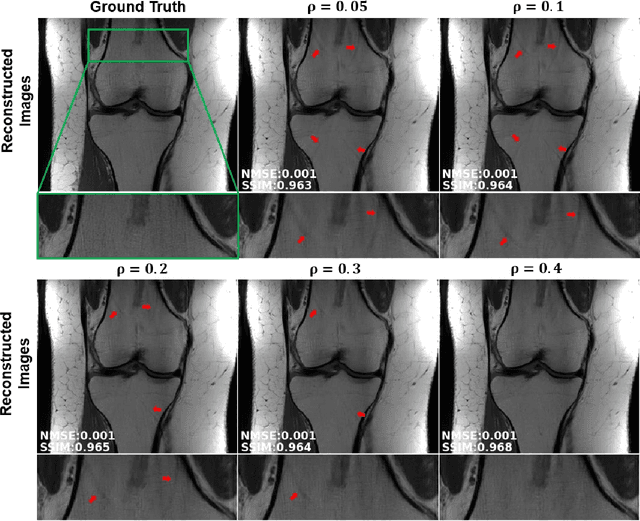

Abstract:Purpose: To develop an improved self-supervised learning strategy that efficiently uses the acquired data for training a physics-guided reconstruction network without a database of fully-sampled data. Methods: Currently self-supervised learning for physics-guided reconstruction networks splits acquired undersampled data into two disjoint sets, where one is used for data consistency (DC) in the unrolled network and the other to define the training loss. The proposed multi-mask self-supervised learning via data undersampling (SSDU) splits acquired measurements into multiple pairs of disjoint sets for each training sample, while using one of these sets for DC units and the other for defining loss, thereby more efficiently using the undersampled data. Multi-mask SSDU is applied on fully-sampled 3D knee and prospectively undersampled 3D brain MRI datasets, which are retrospectively subsampled to acceleration rate (R)=8, and compared to CG-SENSE and single-mask SSDU DL-MRI, as well as supervised DL-MRI when fully-sampled data is available. Results: Results on knee MRI show that the proposed multi-mask SSDU outperforms SSDU and performs closely with supervised DL-MRI, while significantly outperforming CG-SENSE. A clinical reader study further ranks the multi-mask SSDU higher than supervised DL-MRI in terms of SNR and aliasing artifacts. Results on brain MRI show that multi-mask SSDU achieves better reconstruction quality compared to SSDU and CG-SENSE. Reader study demonstrates that multi-mask SSDU at R=8 significantly improves reconstruction compared to single-mask SSDU at R=8, as well as CG-SENSE at R=2. Conclusion: The proposed multi-mask SSDU approach enables improved training of physics-guided neural networks without fully-sampled data, by enabling efficient use of the undersampled data with multiple masks.

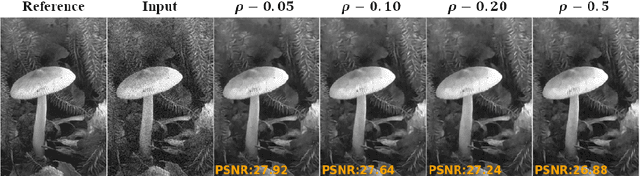

Noise2Inpaint: Learning Referenceless Denoising by Inpainting Unrolling

Jun 16, 2020

Abstract:Deep learning based image denoising methods have been recently popular due to their improved performance. Traditionally, these methods are trained in a supervised manner, requiring a set of noisy input and clean target image pairs. More recently, self-supervised approaches have been proposed to learn denoising from noisy images only, without requiring clean ground truth during training. Succinctly, these methods assume that an image pixel is correlated with its neighboring pixels, while the noise is independent. In this work, building on these approaches and recent methods from image reconstruction, we introduce Noise2Inpaint (N2I), a training approach that recasts the denoising problem into a regularized image inpainting framework. This allows us to use an objective function, which can incorporate different statistical properties of the noise as needed. We use algorithm unrolling to unroll an iterative optimization for solving this objective function and train the unrolled network end-to-end. The training is self-supervised without requiring clean target images, where pixels in the noisy image are split into two disjoint sets. One of these is used to impose data fidelity in the unrolled network, while the other one defines the loss. We demonstrate that N2I performs successful denoising on real-world datasets, while preserving better details compared to its self-supervised counterpart Noise2Void.

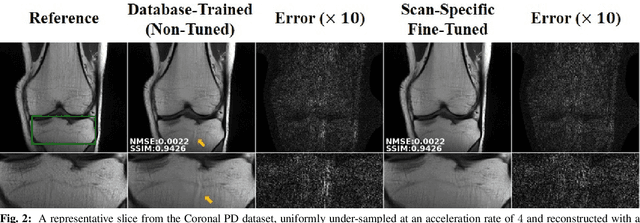

High-Fidelity Accelerated MRI Reconstruction by Scan-Specific Fine-Tuning of Physics-Based Neural Networks

May 12, 2020

Abstract:Long scan duration remains a challenge for high-resolution MRI. Deep learning has emerged as a powerful means for accelerated MRI reconstruction by providing data-driven regularizers that are directly learned from data. These data-driven priors typically remain unchanged for future data in the testing phase once they are learned during training. In this study, we propose to use a transfer learning approach to fine-tune these regularizers for new subjects using a self-supervision approach. While the proposed approach can compromise the extremely fast reconstruction time of deep learning MRI methods, our results on knee MRI indicate that such adaptation can substantially reduce the remaining artifacts in reconstructed images. In addition, the proposed approach has the potential to reduce the risks of generalization to rare pathological conditions, which may be unavailable in the training data.

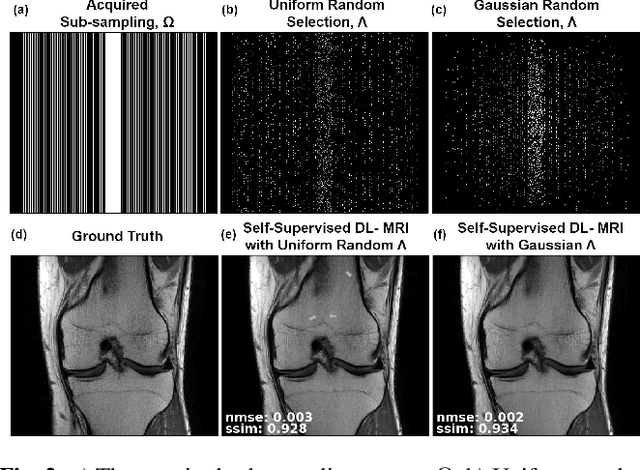

Self-Supervised Learning of Physics-Based Reconstruction Neural Networks without Fully-Sampled Reference Data

Dec 16, 2019

Abstract:Purpose: To develop a strategy for training a physics-driven MRI reconstruction neural network without a database of fully-sampled datasets. Theory and Methods: Self-supervised learning via data under-sampling (SSDU) for physics-based deep learning (DL) reconstruction partitions available measurements into two sets, one of which is used in the data consistency units in the unrolled network and the other is used to define the loss for training. The proposed training without fully-sampled data is compared to fully-supervised training with ground-truth data, as well as conventional compressed sensing and parallel imaging methods using the publicly available fastMRI knee database. The same physics-based neural network is used for both proposed SSDU and supervised training. The SSDU training is also applied to prospectively 2-fold accelerated high-resolution brain datasets at different acceleration rates, and compared to parallel imaging. Results: Results on five different knee sequences at acceleration rate of 4 shows that proposed self-supervised approach performs closely with supervised learning, while significantly outperforming conventional compressed sensing and parallel imaging, as characterized by quantitative metrics and a clinical reader study. The results on prospectively sub-sampled brain datasets, where supervised learning cannot be employed due to lack of ground-truth reference, show that the proposed self-supervised approach successfully perform reconstruction at high acceleration rates (4, 6 and 8). Image readings indicate improved visual reconstruction quality with the proposed approach compared to parallel imaging at acquisition acceleration. Conclusion: The proposed SSDU approach allows training of physics-based DL-MRI reconstruction without fully-sampled data, while achieving comparable results with supervised DL-MRI trained on fully-sampled data.

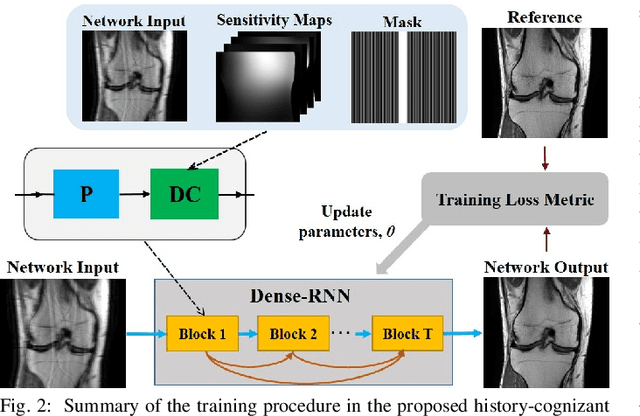

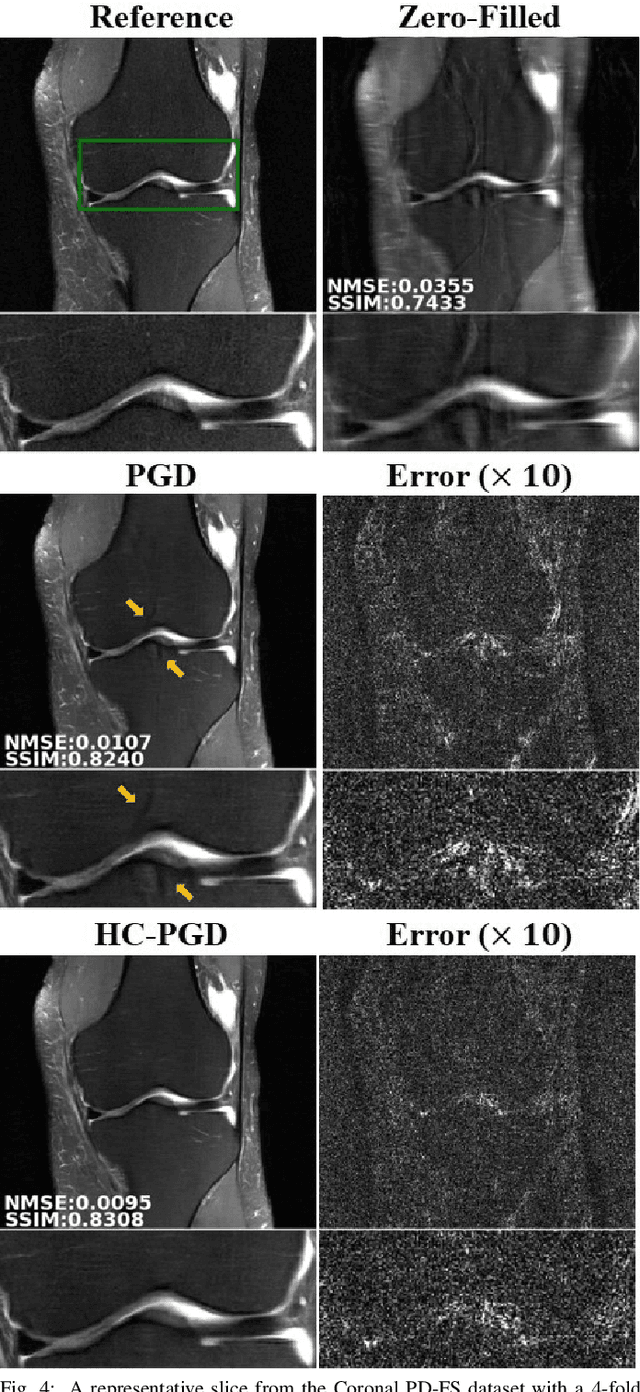

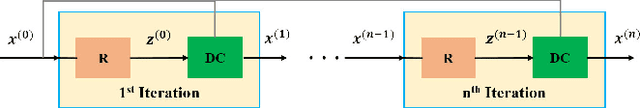

Dense Recurrent Neural Networks for Inverse Problems: History-Cognizant Unrolling of Optimization Algorithms

Dec 16, 2019

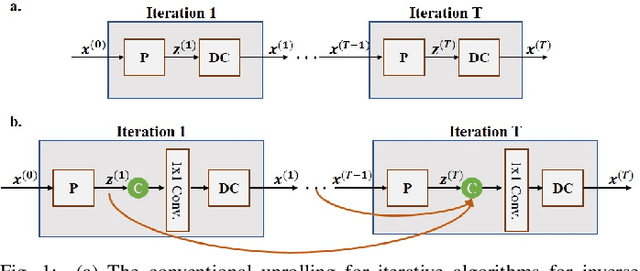

Abstract:Inverse problems in medical imaging applications incorporate domain-specific knowledge about the forward encoding operator in a regularized reconstruction framework. Recently physics-driven deep learning (DL) methods have been proposed to use neural networks for data-driven regularization. These methods unroll iterative optimization algorithms to solve the inverse problem objective function, by alternating between domain-specific data consistency and data-driven regularization via neural networks. The whole unrolled network is then trained end-to-end to learn the parameters of the network. Due to simplicity of data consistency updates with gradient descent steps, proximal gradient descent (PGD) is a common approach to unroll physics-driven DL reconstruction methods. However, PGD methods have slow convergence rates, necessitating a higher number of unrolled iterations, leading to memory issues in training and slower reconstruction times in testing. Inspired by efficient variants of PGD methods that use a history of the previous iterates, we propose a history-cognizant unrolling of the optimization algorithm with dense connections across iterations for improved performance. In our approach, the gradient descent steps are calculated at a trainable combination of the outputs of all the previous regularization units. We also apply this idea to unrolling variable splitting methods with quadratic relaxation. Our results in reconstruction of the fastMRI knee dataset show that the proposed history-cognizant approach reduces residual aliasing artifacts compared to its conventional unrolled counterpart without requiring extra computational power or increasing reconstruction time.

Self-Supervised Physics-Based Deep Learning MRI Reconstruction Without Fully-Sampled Data

Oct 21, 2019

Abstract:Deep learning (DL) has emerged as a tool for improving accelerated MRI reconstruction. A common strategy among DL methods is the physics-based approach, where a regularized iterative algorithm alternating between data consistency and a regularizer is unrolled for a finite number of iterations. This unrolled network is then trained end-to-end in a supervised manner, using fully-sampled data as ground truth for the network output. However, in a number of scenarios, it is difficult to obtain fully-sampled datasets, due to physiological constraints such as organ motion or physical constraints such as signal decay. In this work, we tackle this issue and propose a self-supervised learning strategy that enables physics-based DL reconstruction without fully-sampled data. Our approach is to divide the acquired sub-sampled points for each scan into training and validation subsets. During training, data consistency is enforced over the training subset, while the validation subset is used to define the loss function. Results show that the proposed self-supervised learning method successfully reconstructs images without fully-sampled data, performing similarly to the supervised approach that is trained with fully-sampled references. This has implications for physics-based inverse problem approaches for other settings, where fully-sampled data is not available or possible to acquire.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge