Sergey Pavlov

Weakly Supervised Fine Tuning Approach for Brain Tumor Segmentation Problem

Nov 06, 2019

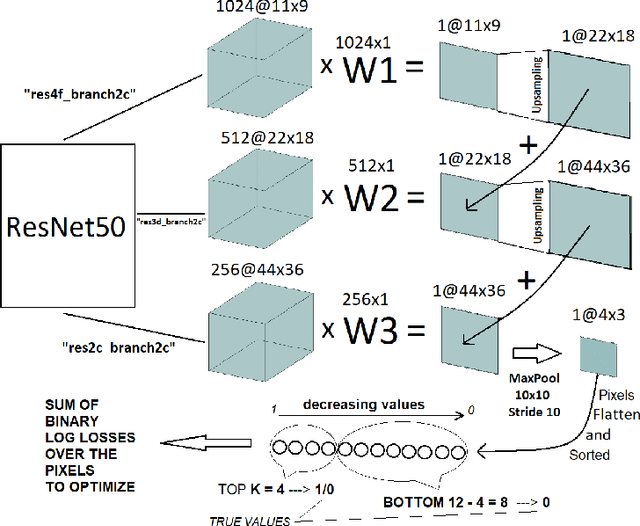

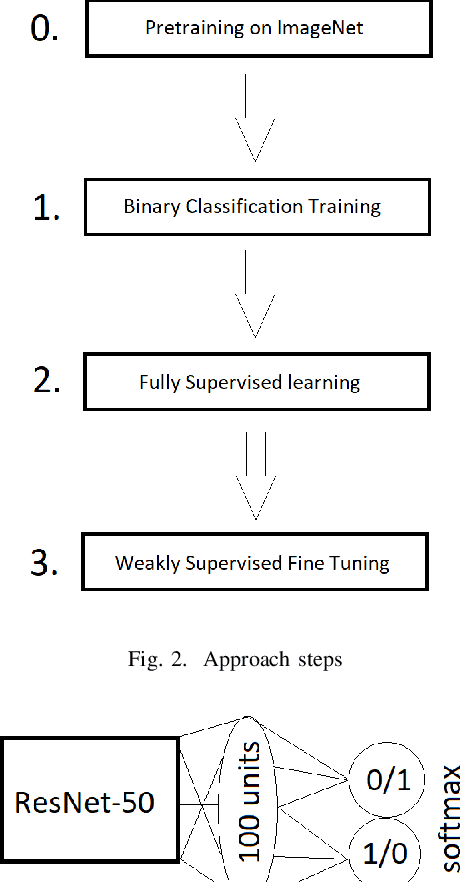

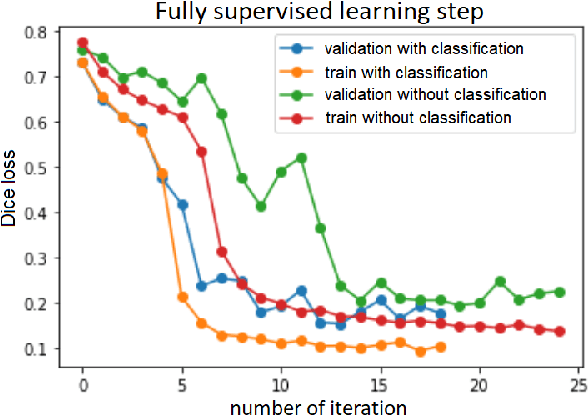

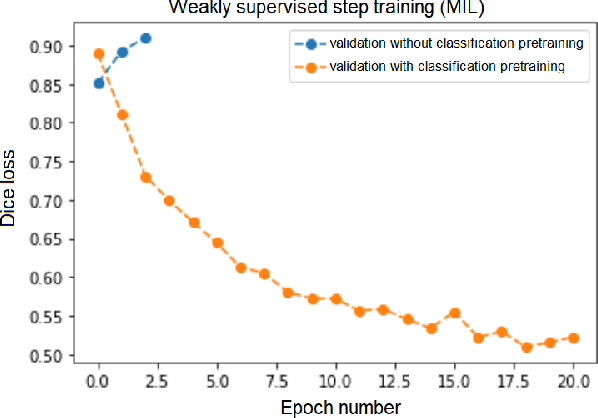

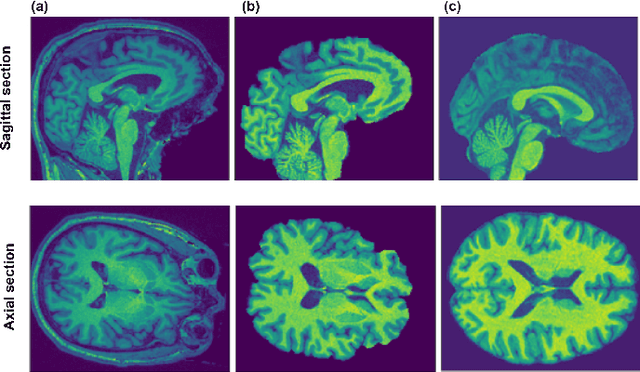

Abstract:Segmentation of tumors in brain MRI images is a challenging task, where most recent methods demand large volumes of data with pixel-level annotations, which are generally costly to obtain. In contrast, image-level annotations, where only the presence of lesion is marked, are generally cheap, generated in far larger volumes compared to pixel-level labels, and contain less labeling noise. In the context of brain tumor segmentation, both pixel-level and image-level annotations are commonly available; thus, a natural question arises whether a segmentation procedure could take advantage of both. In the present work we: 1) propose a learning-based framework that allows simultaneous usage of both pixel- and image-level annotations in MRI images to learn a segmentation model for brain tumor; 2) study the influence of comparative amounts of pixel- and image-level annotations on the quality of brain tumor segmentation; 3) compare our approach to the traditional fully-supervised approach and show that the performance of our method in terms of segmentation quality may be competitive.

3D Deformable Convolutions for MRI classification

Nov 05, 2019

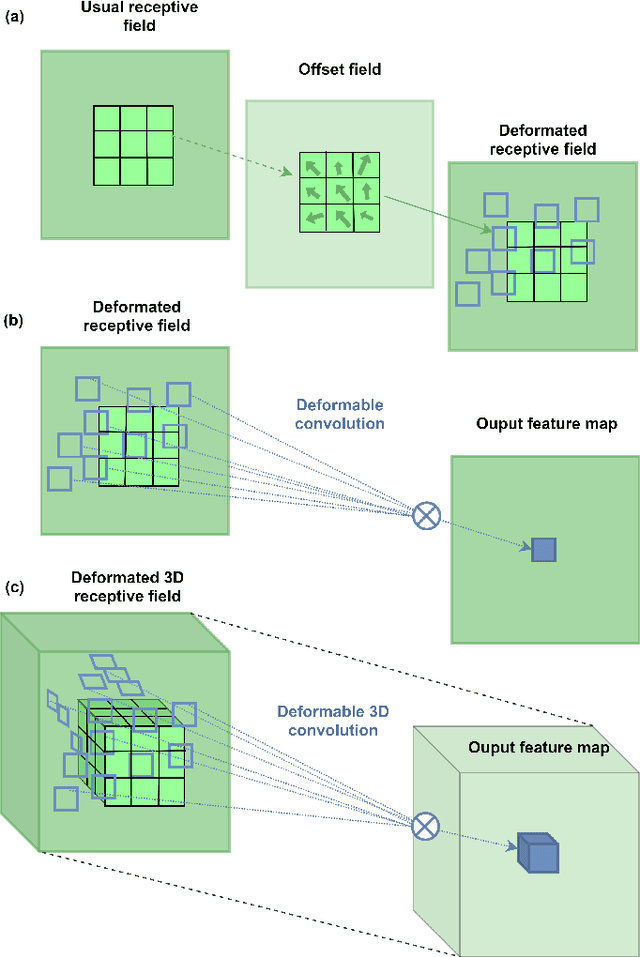

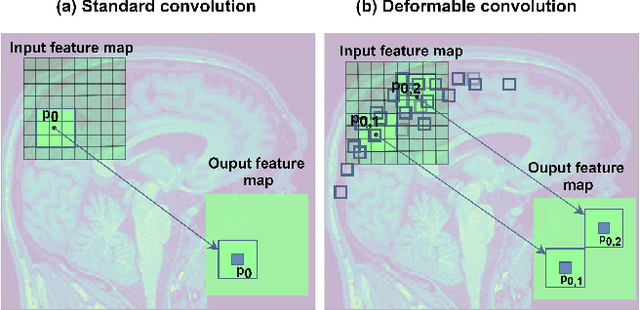

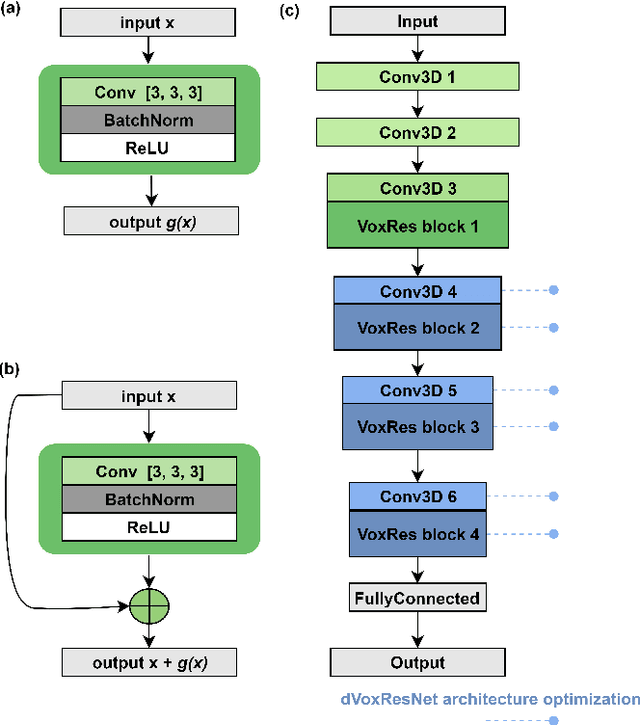

Abstract:Deep learning convolutional neural networks have proved to be a powerful tool for MRI analysis. In current work, we explore the potential of the deformable convolutional deep neural network layers for MRI data classification. We propose new 3D deformable convolutions(d-convolutions), implement them in VoxResNet architecture and apply for structural MRI data classification. We show that 3D d-convolutions outperform standard ones and are effective for unprocessed 3D MR images being robust to particular geometrical properties of the data. Firstly proposed dVoxResNet architecture exhibits high potential for the use in MRI data classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge