Sergei Petrov

Incorporating Token Usage into Prompting Strategy Evaluation

May 20, 2025Abstract:In recent years, large language models have demonstrated remarkable performance across diverse tasks. However, their task effectiveness is heavily dependent on the prompting strategy used to elicit output, which can vary widely in both performance and token usage. While task performance is often used to determine prompting strategy success, we argue that efficiency--balancing performance and token usage--can be a more practical metric for real-world utility. To enable this, we propose Big-$O_{tok}$, a theoretical framework for describing the token usage growth of prompting strategies, and analyze Token Cost, an empirical measure of tokens per performance. We apply these to several common prompting strategies and find that increased token usage leads to drastically diminishing performance returns. Our results validate the Big-$O_{tok}$ analyses and reinforce the need for efficiency-aware evaluations.

Soft-Sensing ConFormer: A Curriculum Learning-based Convolutional Transformer

Nov 12, 2021

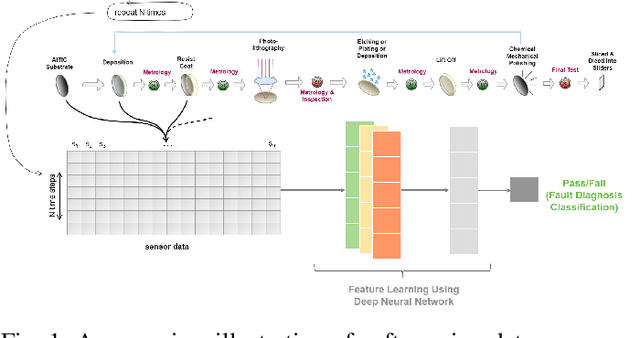

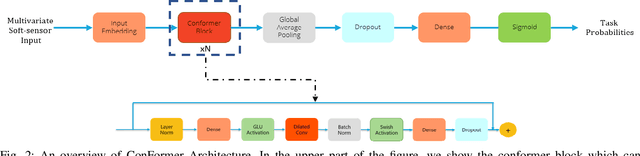

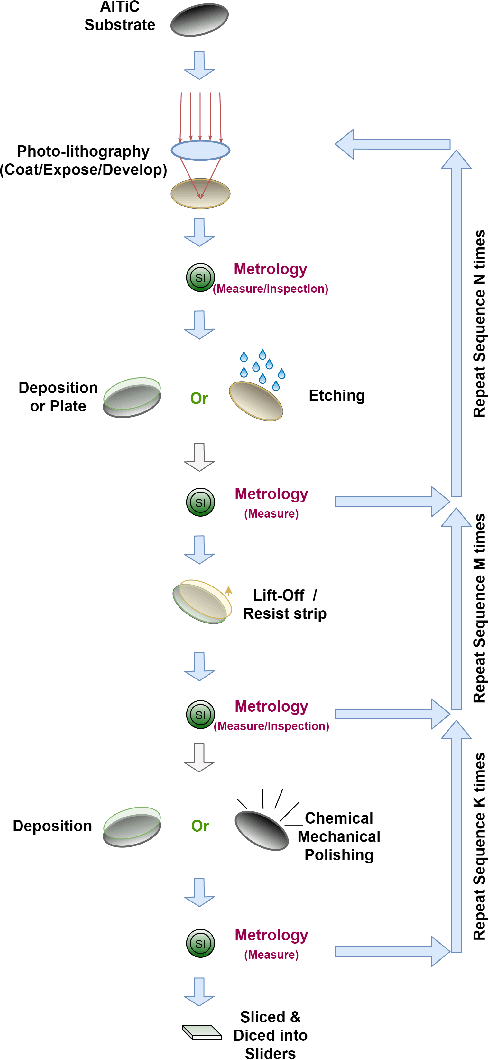

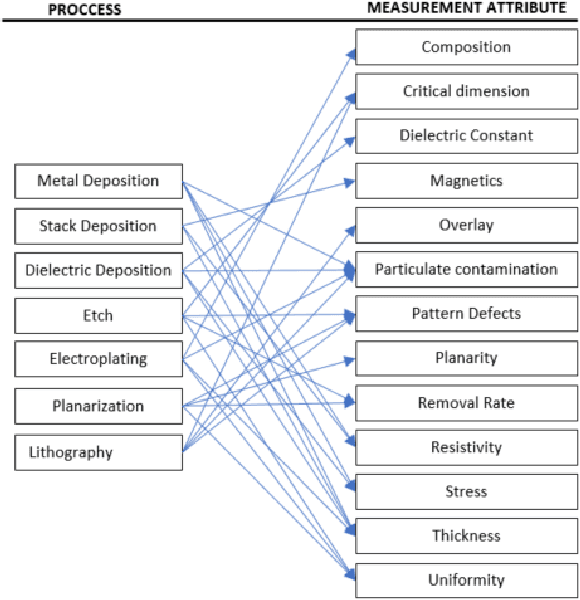

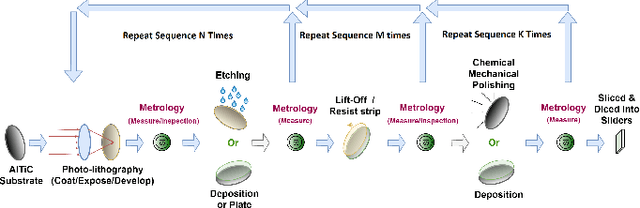

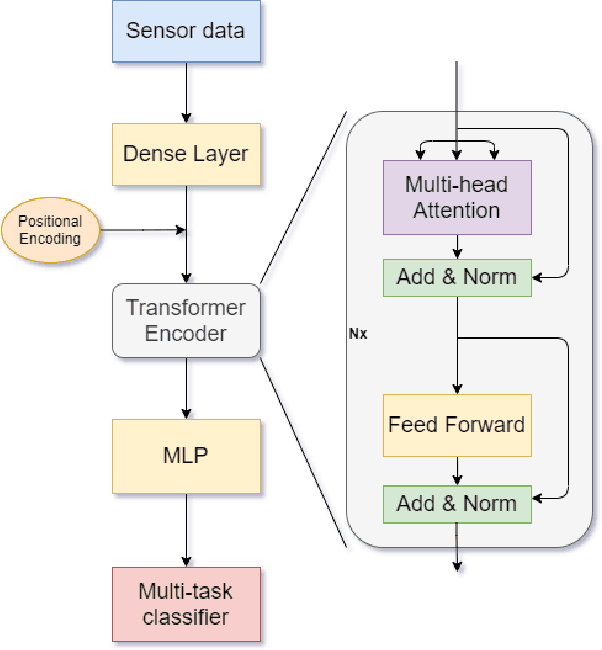

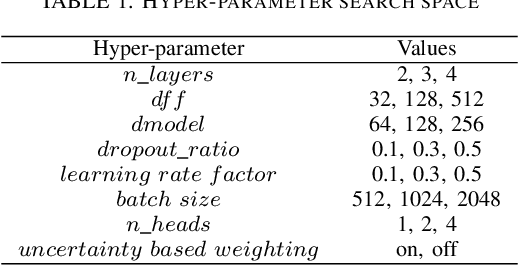

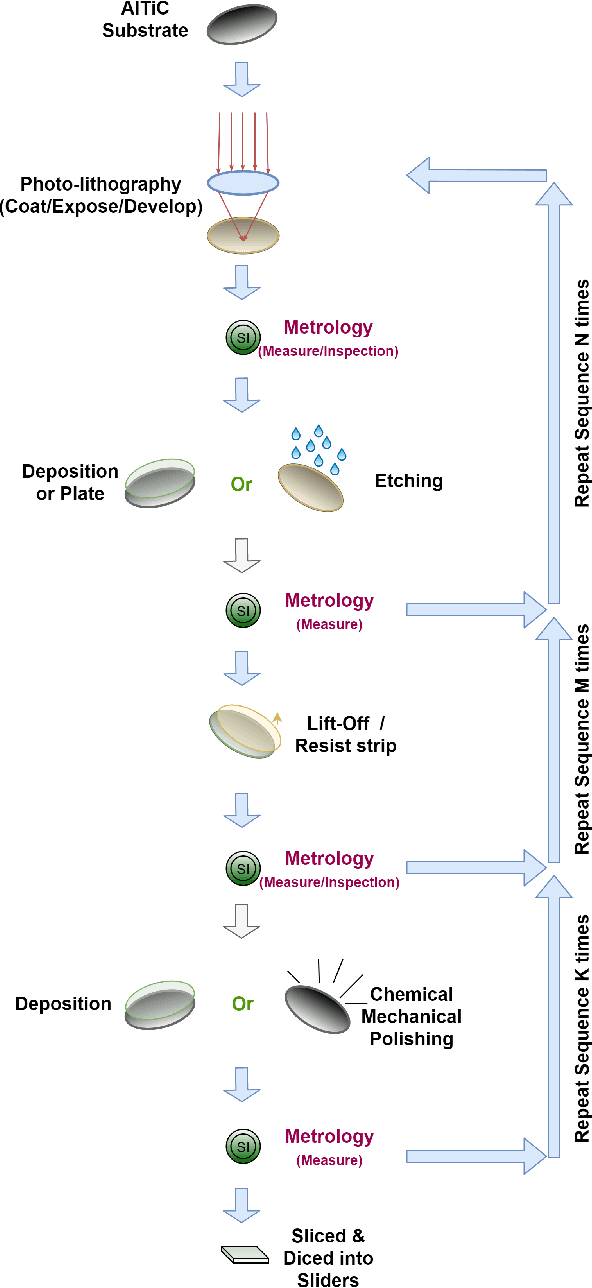

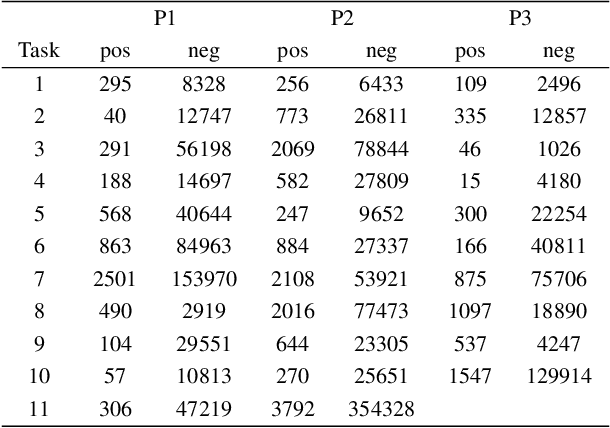

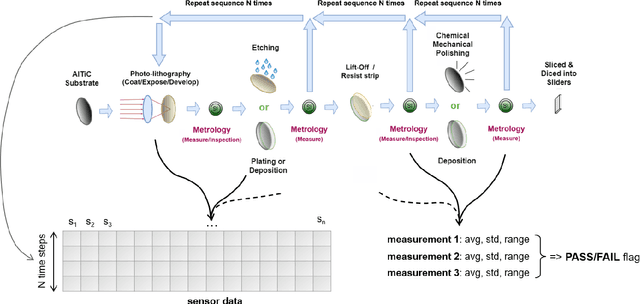

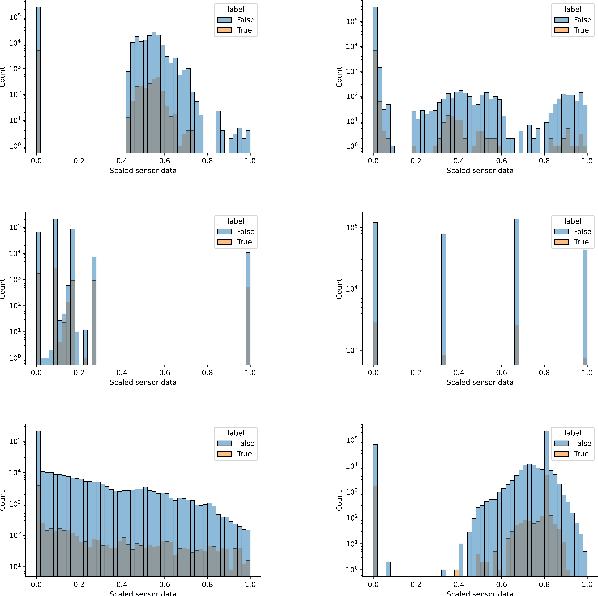

Abstract:Over the last few decades, modern industrial processes have investigated several cost-effective methodologies to improve the productivity and yield of semiconductor manufacturing. While playing an essential role in facilitating real-time monitoring and control, the data-driven soft-sensors in industries have provided a competitive edge when augmented with deep learning approaches for wafer fault-diagnostics. Despite the success of deep learning methods across various domains, they tend to suffer from bad performance on multi-variate soft-sensing data domains. To mitigate this, we propose a soft-sensing ConFormer (CONvolutional transFORMER) for wafer fault-diagnostic classification task which primarily consists of multi-head convolution modules that reap the benefits of fast and light-weight operations of convolutions, and also the ability to learn the robust representations through multi-head design alike transformers. Another key issue is that traditional learning paradigms tend to suffer from low performance on noisy and highly-imbalanced soft-sensing data. To address this, we augment our soft-sensing ConFormer model with a curriculum learning-based loss function, which effectively learns easy samples in the early phase of training and difficult ones later. To further demonstrate the utility of our proposed architecture, we performed extensive experiments on various toolsets of Seagate Technology's wafer manufacturing process which are shared openly along with this work. To the best of our knowledge, this is the first time that curriculum learning-based soft-sensing ConFormer architecture has been proposed for soft-sensing data and our results show strong promise for future use in soft-sensing research domain.

GraSSNet: Graph Soft Sensing Neural Networks

Nov 12, 2021

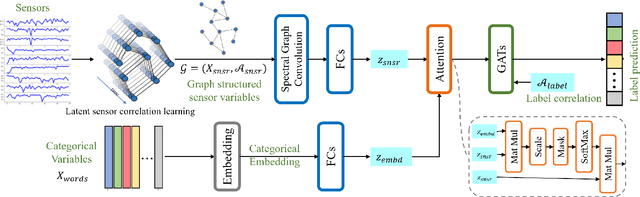

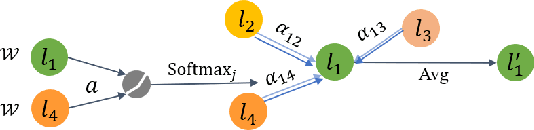

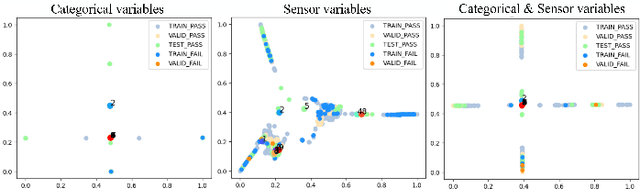

Abstract:In the era of big data, data-driven based classification has become an essential method in smart manufacturing to guide production and optimize inspection. The industrial data obtained in practice is usually time-series data collected by soft sensors, which are highly nonlinear, nonstationary, imbalanced, and noisy. Most existing soft-sensing machine learning models focus on capturing either intra-series temporal dependencies or pre-defined inter-series correlations, while ignoring the correlation between labels as each instance is associated with multiple labels simultaneously. In this paper, we propose a novel graph based soft-sensing neural network (GraSSNet) for multivariate time-series classification of noisy and highly-imbalanced soft-sensing data. The proposed GraSSNet is able to 1) capture the inter-series and intra-series dependencies jointly in the spectral domain; 2) exploit the label correlations by superimposing label graph that built from statistical co-occurrence information; 3) learn features with attention mechanism from both textual and numerical domain; and 4) leverage unlabeled data and mitigate data imbalance by semi-supervised learning. Comparative studies with other commonly used classifiers are carried out on Seagate soft sensing data, and the experimental results validate the competitive performance of our proposed method.

Soft Sensing Transformer: Hundreds of Sensors are Worth a Single Word

Nov 10, 2021

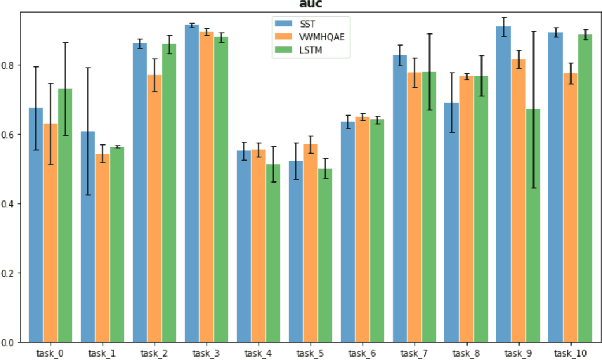

Abstract:With the rapid development of AI technology in recent years, there have been many studies with deep learning models in soft sensing area. However, the models have become more complex, yet, the data sets remain limited: researchers are fitting million-parameter models with hundreds of data samples, which is insufficient to exercise the effectiveness of their models and thus often fail to perform when implemented in industrial applications. To solve this long-lasting problem, we are providing large scale, high dimensional time series manufacturing sensor data from Seagate Technology to the public. We demonstrate the challenges and effectiveness of modeling industrial big data by a Soft Sensing Transformer model on these data sets. Transformer is used because, it has outperformed state-of-the-art techniques in Natural Language Processing, and since then has also performed well in the direct application to computer vision without introduction of image-specific inductive biases. We observe the similarity of a sentence structure to the sensor readings and process the multi-variable sensor readings in a time series in a similar manner of sentences in natural language. The high-dimensional time-series data is formatted into the same shape of embedded sentences and fed into the transformer model. The results show that transformer model outperforms the benchmark models in soft sensing field based on auto-encoder and long short-term memory (LSTM) models. To the best of our knowledge, we are the first team in academia or industry to benchmark the performance of original transformer model with large-scale numerical soft sensing data.

IEEE BigData 2021 Cup: Soft Sensing at Scale

Sep 07, 2021

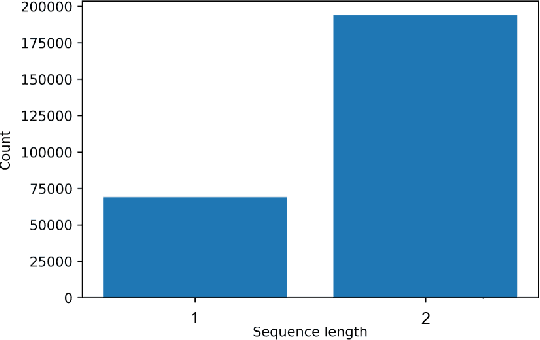

Abstract:IEEE BigData 2021 Cup: Soft Sensing at Scale is a data mining competition organized by Seagate Technology, in association with the IEEE BigData 2021 conference. The scope of this challenge is to tackle the task of classifying soft sensing data with machine learning techniques. In this paper we go into the details of the challenge and describe the data set provided to participants. We define the metrics of interest, baseline models, and describe approaches we found meaningful which may be a good starting point for further analysis. We discuss the results obtained with our approaches and give insights on what potential challenges participants may run into. Students, researchers, and anyone interested in working on a major industrial problem are welcome to participate in the challenge!

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge