Seongwoo Moon

VLA-R: Vision-Language Action Retrieval toward Open-World End-to-End Autonomous Driving

Nov 16, 2025Abstract:Exploring open-world situations in an end-to-end manner is a promising yet challenging task due to the need for strong generalization capabilities. In particular, end-to-end autonomous driving in unstructured outdoor environments often encounters conditions that were unfamiliar during training. In this work, we present Vision-Language Action Retrieval (VLA-R), an open-world end-to-end autonomous driving (OW-E2EAD) framework that integrates open-world perception with a novel vision-action retrieval paradigm. We leverage a frozen vision-language model for open-world detection and segmentation to obtain multi-scale, prompt-guided, and interpretable perception features without domain-specific tuning. A Q-Former bottleneck aggregates fine-grained visual representations with language-aligned visual features, bridging perception and action domains. To learn transferable driving behaviors, we introduce a vision-action contrastive learning scheme that aligns vision-language and action embeddings for effective open-world reasoning and action retrieval. Our experiments on a real-world robotic platform demonstrate strong generalization and exploratory performance in unstructured, unseen environments, even with limited data. Demo videos are provided in the supplementary material.

Words to Wheels: Vision-Based Autonomous Driving Understanding Human Language Instructions Using Foundation Models

Oct 14, 2024

Abstract:This paper introduces an innovative application of foundation models, enabling Unmanned Ground Vehicles (UGVs) equipped with an RGB-D camera to navigate to designated destinations based on human language instructions. Unlike learning-based methods, this approach does not require prior training but instead leverages existing foundation models, thus facilitating generalization to novel environments. Upon receiving human language instructions, these are transformed into a 'cognitive route description' using a large language model (LLM)-a detailed navigation route expressed in human language. The vehicle then decomposes this description into landmarks and navigation maneuvers. The vehicle also determines elevation costs and identifies navigability levels of different regions through a terrain segmentation model, GANav, trained on open datasets. Semantic elevation costs, which take both elevation and navigability levels into account, are estimated and provided to the Model Predictive Path Integral (MPPI) planner, responsible for local path planning. Concurrently, the vehicle searches for target landmarks using foundation models, including YOLO-World and EfficientViT-SAM. Ultimately, the vehicle executes the navigation commands to reach the designated destination, the final landmark. Our experiments demonstrate that this application successfully guides UGVs to their destinations following human language instructions in novel environments, such as unfamiliar terrain or urban settings.

Enhancing State Estimator for Autonomous Race Car : Leveraging Multi-modal System and Managing Computing Resources

Aug 14, 2023

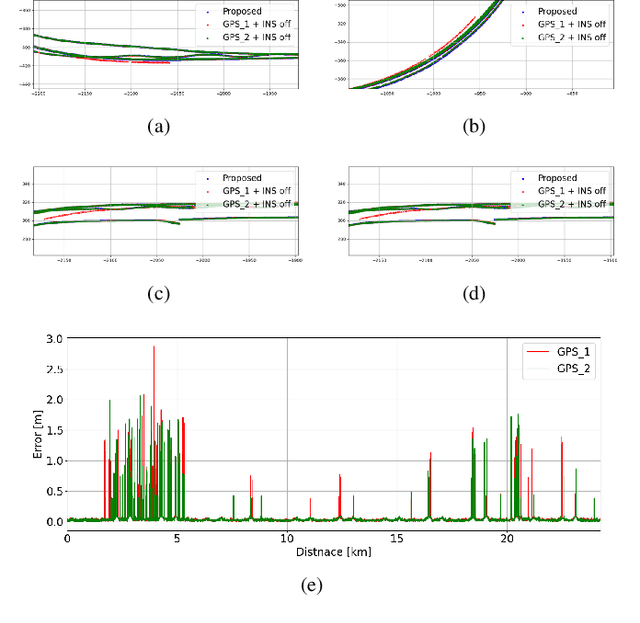

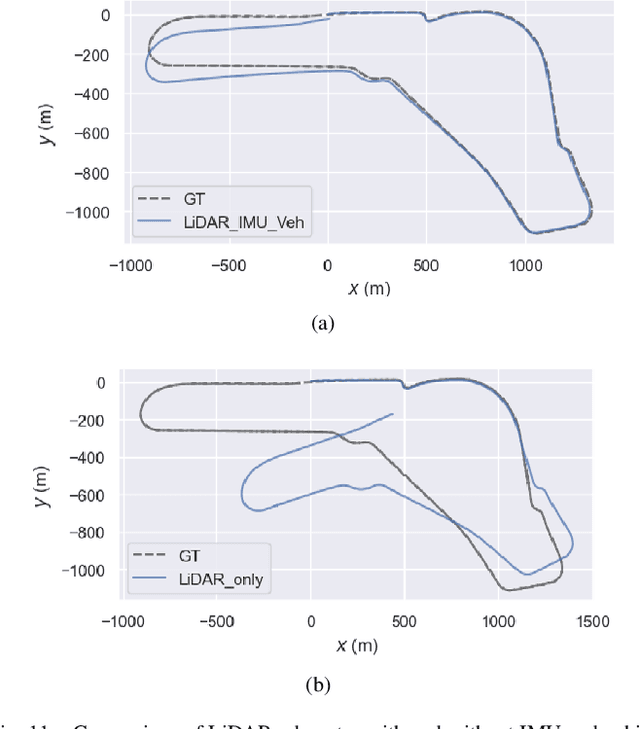

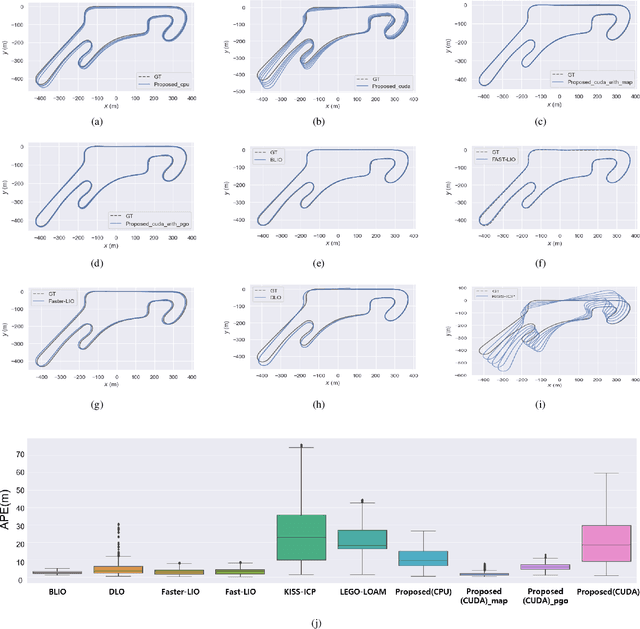

Abstract:This paper introduces an innovative approach to enhance the state estimator for high-speed autonomous race cars, addressing challenges related to unreliable measurements, localization failures, and computing resource management. The proposed robust localization system utilizes a Bayesian-based probabilistic approach to evaluate multimodal measurements, ensuring the use of credible data for accurate and reliable localization, even in harsh racing conditions. To tackle potential localization failures during intense racing, we present a resilient navigation system. This system enables the race car to continue track-following by leveraging direct perception information in planning and execution, ensuring continuous performance despite localization disruptions. Efficient computing resource management is critical to avoid overload and system failure. We optimize computing resources using an efficient LiDAR-based state estimation method. Leveraging CUDA programming and GPU acceleration, we perform nearest points search and covariance computation efficiently, overcoming CPU bottlenecks. Real-world and simulation tests validate the system's performance and resilience. The proposed approach successfully recovers from failures, effectively preventing accidents and ensuring race car safety.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge